Camera Calibration Using a Chessboard

Preparation

Traditionally, a camera is calibrated using a chessboard. Existing documentations are already out there and have discussed camera calibration in detail, for example, OpenCV-Python Tutorials.

Coding

Our code can be found at OpenCV Examples.

First of all

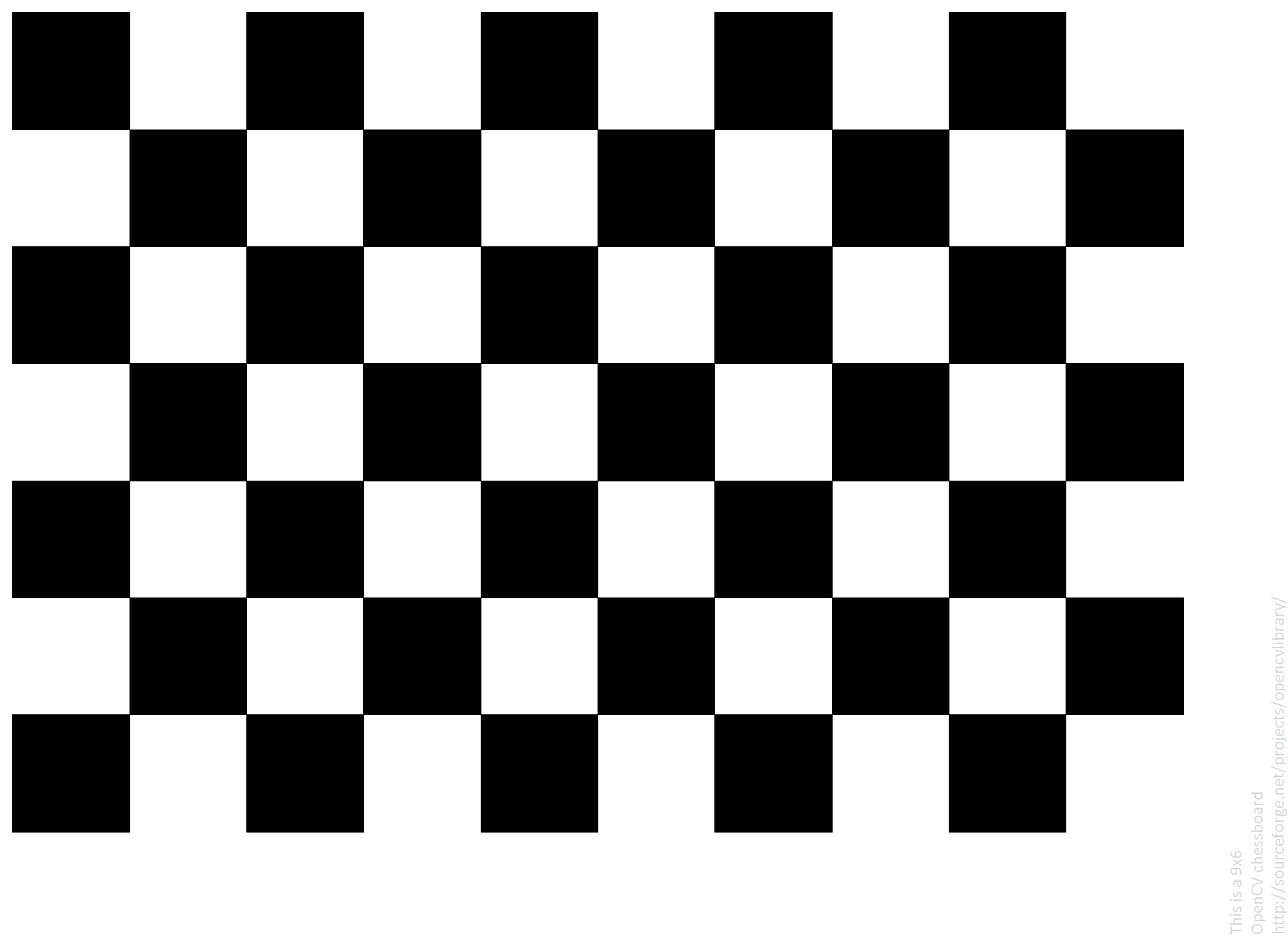

Unlike estimating camera postures which is dealing with the extrinsic parameters, camera calibration is to calculate the intrinsic parameters. In such a case, there is NO need for us to measure the cell size of the chessboard. Anyway, the adopted chessboard is just an ordinary chessboard as:

Secondly

Required packages need to be imported.

1 | import numpy as np |

Thirdly

Some initialization work need to be done, including: 1) define the termination criteria when refine the corner sub-pixel later on; 2) object points coordinators initialization.

1 | # termination criteria |

Fourthly

After localizing 10 frames (10 can be changed to any positive integer as you wish) of a grid of 2D chessboard cell corners, camera matrix and distortion coefficients can be calculated by invoking the function calibrateCamera. Here, we are testing on a real-time camera stream.

1 | cap = cv2.VideoCapture(0) |

Finally

Write the calculated calibration parameters into a yaml file. Here, it is a bit tricky. Please bear in mind that you MUST call function tolist() to transform a numpy array to a list.

1 | # It's very important to transform the matrix to list. |

Additionally

You may use the following piece of code to load the calibration parameters from file “calibration.yaml”.

1 | with open('calibration.yaml') as f: |