Preparation

Before start coding, you need to ensure your camera has already been calibrated. (Camera calibration is covered in our blog as well.) In the coding section, it’s assumed that you can successfully load the camera calibration parameters.

Coding

The code can be found at OpenCV Examples.

First of all

We need to ensure cv2.so is under our system path. cv2.so is specifically for OpenCV Python.

1 | import sys |

Then, we import some packages to be used.

1 | import os |

Secondly

We now load all camera calibration parameters, including: cameraMatrix, distCoeffs, etc. For example, your code might look like the following:

1 | calibrationFile = "calibrationFileName.xml" |

Since we are testing a calibrated fisheye camera, two extra parameters are to be loaded from the calibration file.

1 | r = calibrationParams.getNode("R").mat() |

Afterwards, two mapping matrices are pre-calculated by calling function cv2.fisheye.initUndistortRectifyMap() as (supposing the images to be processed are of 1080P):

1 | image_size = (1920, 1080) |

Thirdly

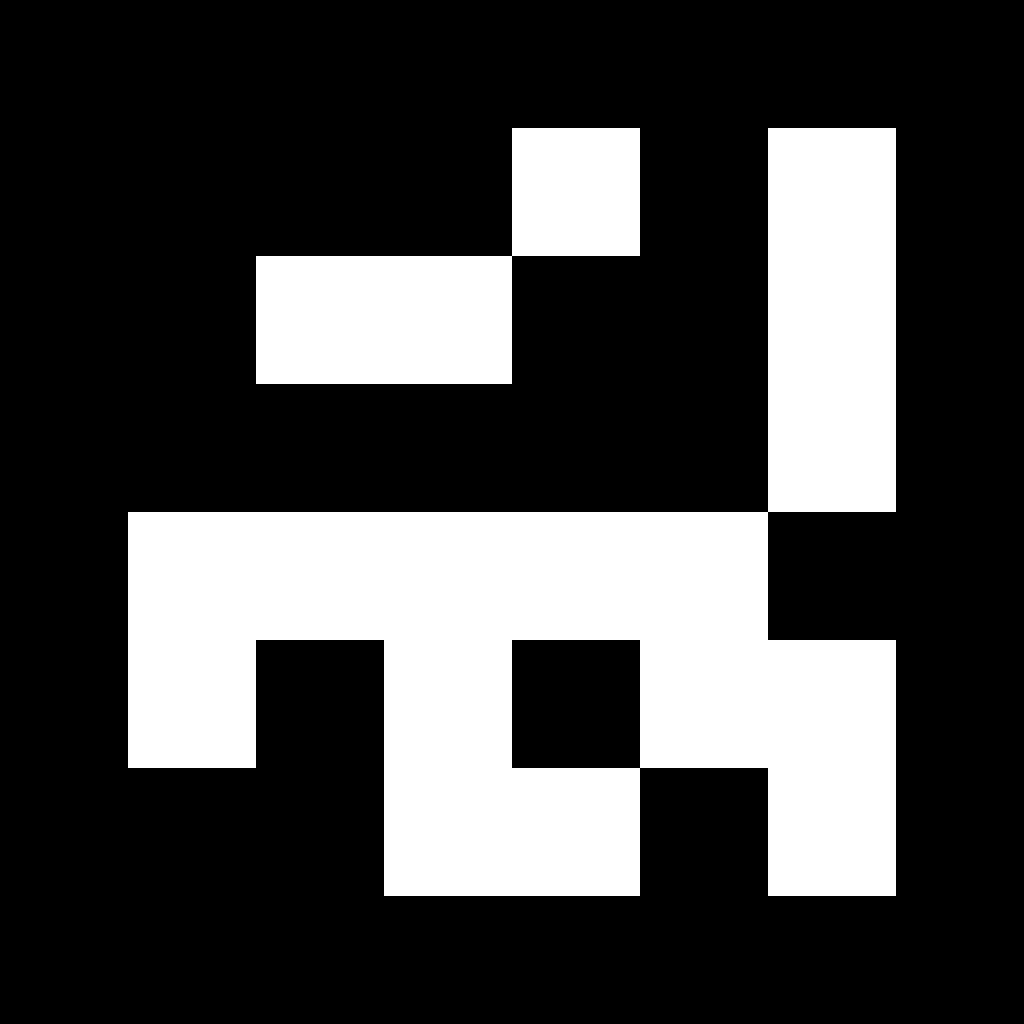

A dictionary is to be loaded. Current OpenCV provides four groups of aruco patterns, 4X4, 5X5, 6X6, 7X7, etc. Here, aruco.DICT_6X6_1000 is randomly selected as our example, which looks like:

1 | aruco_dict = aruco.Dictionary_get( aruco.DICT_6X6_1000 ) |

After having this aruco square marker printed, the edge length of this particular marker is to be measured and stored in a variable markerLength.

1 | markerLength = 20 # Here, our measurement unit is centimetre. |

Meanwhile, create aruco detector with default parameters.

1 | arucoParams = aruco.DetectorParameters_create() |

Finally

Estimate camera postures. Here, we are testing a sequence of images, rather than video streams. We first list all file names in sequence.

1 | imgDir = "imgSequence" # Specify the image directory |

Then, we calculate the camera posture frame by frame:

1 | for i in range(0, nbOfImgs): |

The drawn axis is just the world coordinators and orientations estimated from the images taken by the testing camera.