To start: JetPack 4.6 is

out.

1. Resource for Jetson

Embedded Systems

2. Flash the L4T Release

2.1 Untar Some Required tar

balls

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 ➜ AGX tar xvf Jetson_Linux_R32.6.1_aarch64.tbz2 Linux_for_Tegra/ Linux_for_Tegra/jetson-agx-xavier-industrial.conf Linux_for_Tegra/jetson-xavier-nx-devkit-qspi.conf Linux_for_Tegra/p2972.conf Linux_for_Tegra/source/ Linux_for_Tegra/source/nv_src_build.sh Linux_for_Tegra/p2822-0000+p2888-0008-maxn.conf Linux_for_Tegra/jetson-agx-xavier-devkit.conf Linux_for_Tegra/p3509-0000+p3636-0001.conf ...... ➜ cd Linux_for_Tegra ➜ Linux_for_Tegra ls apply_binaries.sh jetson-xavier-as-8gb.conf nv_tools p2972-as-galen-8gb.conf bootloader jetson-xavier-as-xavier-nx.conf p2597-0000+p3310-1000-as-p3489-0888.conf p2972.conf build_l4t_bup.sh jetson-xavier.conf p2597-0000+p3310-1000.conf p3449-0000+p3668-0000-qspi-sd.conf clara-agx-xavier-devkit.conf jetson-xavier-maxn.conf p2597-0000+p3489-0000-ucm1.conf p3449-0000+p3668-0001-qspi-emmc.conf e3900-0000+p2888-0004-b00.conf jetson-xavier-nx-devkit.conf p2597-0000+p3489-0000-ucm2.conf p3509-0000+p3636-0001.conf flash.sh jetson-xavier-nx-devkit-emmc.conf p2597-0000+p3489-0888.conf p3509-0000+p3668-0000-qspi.conf jetson-agx-xavier-devkit.conf jetson-xavier-nx-devkit-qspi.conf p2771-0000.conf.common p3509-0000+p3668-0000-qspi-sd.conf jetson-agx-xavier-ind-noecc.conf jetson-xavier-nx-devkit-tx2-nx.conf p2771-0000-dsi-hdmi-dp.conf p3509-0000+p3668-0001-qspi-emmc.conf jetson-agx-xavier-industrial.conf jetson-xavier-slvs-ec.conf p2822-0000+p2888-0001.conf p3636.conf.common jetson-agx-xavier-industrial-mxn.conf kernel p2822-0000+p2888-0004.conf p3668.conf.common jetson-tx2-4GB.conf l4t_generate_soc_bup.sh p2822-0000+p2888-0008.conf README_Autoflash.txt jetson-tx2-as-4GB.conf l4t_sign_image.sh p2822-0000+p2888-0008-maxn.conf README_Massflash.txt jetson-tx2.conf LICENSE.sce_t194 p2822-0000+p2888-0008-noecc.conf rootfs jetson-tx2-devkit-4gb.conf nvautoflash.sh p2822+p2888-0001-as-p3668-0001.conf source jetson-tx2-devkit.conf nvmassflashgen.sh p2972-0000.conf.common source_sync.sh jetson-tx2-devkit-tx2i.conf nvsdkmanager_flash.sh p2972-0000-devkit-maxn.conf tools jetson-tx2i.conf nv_tegra p2972-0000-devkit-slvs-ec.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 ➜ Linux_for_Tegra cd rootfs ➜ rootfs sudo tar xvf ../../Tegra_Linux_Sample-Root-Filesystem_R32.6.1_aarch64.tbz2 bin/ bin/ping6 bin/bunzip2 bin/gunzip bin/search-path bin/ping4 bin/systemd-sysusers bin/zegrep bin/zdiff bin/blockdev-wipe bin/ntfsfix bin/ntfscmp bin/ntfscat bin/dir bin/lsblk ...... ➜ rootfs ll total 96K drwxr-xr-x 2 root root 4.0K Jul 21 23:55 bin drwxr-xr-x 3 root root 12K Aug 11 10:11 boot drwxr-xr-x 2 root root 4.0K Aug 11 10:10 dev drwxr-xr-x 145 root root 12K Aug 11 10:11 etc drwxr-xr-x 2 root root 4.0K Apr 24 2018 home drwxr-xr-x 21 root root 4.0K Aug 11 10:09 lib drwxr-xr-x 2 root root 4.0K Aug 5 2018 media drwxr-xr-x 2 root root 4.0K Apr 26 2018 mnt drwxr-xr-x 4 root root 4.0K Aug 11 10:09 opt drwxr-xr-x 2 root root 4.0K Apr 24 2018 proc -rw-rw-r-- 1 lvision lvision 62 Jul 26 12:29 README.txt drwx------ 2 root root 4.0K Apr 26 2018 root drwxr-xr-x 17 root root 4.0K Nov 30 2020 run drwxr-xr-x 2 root root 4.0K Jul 6 21:01 sbin drwxr-xr-x 2 root root 4.0K May 11 2018 snap drwxr-xr-x 2 root root 4.0K Apr 26 2018 srv drwxr-xr-x 2 root root 4.0K Apr 24 2018 sys drwxrwxrwt 2 root root 4.0K Aug 11 10:10 tmp drwxr-xr-x 11 root root 4.0K May 21 2018 usr drwxr-xr-x 15 root root 4.0K Aug 11 10:09 var

2.1.3 Apply Binaries

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 ➜ rootfs cd .. ➜ Linux_for_Tegra sudo ./apply_binaries.sh Using rootfs directory of: /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra/rootfs Installing extlinux.conf into /boot/extlinux in target rootfs /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra/nv_tegra/nv-apply-debs.sh Root file system directory is /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra/rootfs Copying public debian packages to rootfs Start L4T BSP package installation QEMU binary is not available, looking for QEMU from host system Found /usr/bin/qemu-aarch64-static Installing QEMU binary in rootfs /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra/rootfs /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra Installing BSP Debian packages in /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra/rootfs Selecting previously unselected package nvidia-l4t-core. (Reading database ... 142475 files and directories currently installed.) Preparing to unpack .../nvidia-l4t-core_32.6.1-20210726122859_arm64.deb ... Pre-installing... skip compatibility checking. Unpacking nvidia-l4t-core (32.6.1-20210726122859) ... Setting up nvidia-l4t-core (32.6.1-20210726122859) ... Selecting previously unselected package jetson-gpio-common. (Reading database ... 142523 files and directories currently installed.) Preparing to unpack .../jetson-gpio-common_2.0.17_arm64.deb ... Unpacking jetson-gpio-common (2.0.17) ... Selecting previously unselected package python3-jetson-gpio. Preparing to unpack .../python3-jetson-gpio_2.0.17_arm64.deb ... Unpacking python3-jetson-gpio (2.0.17) ... Selecting previously unselected package python-jetson-gpio. Preparing to unpack .../python-jetson-gpio_2.0.17_arm64.deb ... Unpacking python-jetson-gpio (2.0.17) ... Selecting previously unselected package nvidia-l4t-3d-core. Preparing to unpack .../nvidia-l4t-3d-core_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-3d-core (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-apt-source. Preparing to unpack .../nvidia-l4t-apt-source_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-apt-source (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-camera. Preparing to unpack .../nvidia-l4t-camera_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-camera (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-configs. Preparing to unpack .../nvidia-l4t-configs_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-configs (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-cuda. Preparing to unpack .../nvidia-l4t-cuda_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-cuda (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-firmware. Preparing to unpack .../nvidia-l4t-firmware_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-firmware (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-gputools. Preparing to unpack .../nvidia-l4t-gputools_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-gputools (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-graphics-demos. Preparing to unpack .../nvidia-l4t-graphics-demos_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-graphics-demos (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-gstreamer. Preparing to unpack .../nvidia-l4t-gstreamer_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-gstreamer (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-init. Preparing to unpack .../nvidia-l4t-init_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-init (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-initrd. Preparing to unpack .../nvidia-l4t-initrd_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-initrd (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-jetson-io. Preparing to unpack .../nvidia-l4t-jetson-io_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-jetson-io (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-libvulkan. Preparing to unpack .../nvidia-l4t-libvulkan_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-libvulkan (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-multimedia. Preparing to unpack .../nvidia-l4t-multimedia_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-multimedia (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-multimedia-utils. Preparing to unpack .../nvidia-l4t-multimedia-utils_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-multimedia-utils (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-oem-config. Preparing to unpack .../nvidia-l4t-oem-config_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-oem-config (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-tools. Preparing to unpack .../nvidia-l4t-tools_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-tools (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-wayland. Preparing to unpack .../nvidia-l4t-wayland_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-wayland (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-weston. Preparing to unpack .../nvidia-l4t-weston_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-weston (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-x11. Preparing to unpack .../nvidia-l4t-x11_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-x11 (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-xusb-firmware. Preparing to unpack .../nvidia-l4t-xusb-firmware_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-xusb-firmware (32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-kernel. Preparing to unpack .../nvidia-l4t-kernel_4.9.253-tegra-32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-kernel (4.9.253-tegra-32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-kernel-dtbs. Preparing to unpack .../nvidia-l4t-kernel-dtbs_4.9.253-tegra-32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-kernel-dtbs (4.9.253-tegra-32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-kernel-headers. Preparing to unpack .../nvidia-l4t-kernel-headers_4.9.253-tegra-32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-kernel-headers (4.9.253-tegra-32.6.1-20210726122859) ... Selecting previously unselected package nvidia-l4t-bootloader. Preparing to unpack .../nvidia-l4t-bootloader_32.6.1-20210726122859_arm64.deb ... Unpacking nvidia-l4t-bootloader (32.6.1-20210726122859) ... Setting up jetson-gpio-common (2.0.17) ... Setting up python3-jetson-gpio (2.0.17) ... Setting up python-jetson-gpio (2.0.17) ... Setting up nvidia-l4t-apt-source (32.6.1-20210726122859) ... Pre-installing... skip changing source list. Setting up nvidia-l4t-configs (32.6.1-20210726122859) ... Setting up nvidia-l4t-firmware (32.6.1-20210726122859) ... Setting up nvidia-l4t-gputools (32.6.1-20210726122859) ... Setting up nvidia-l4t-init (32.6.1-20210726122859) ... Setting up nvidia-l4t-libvulkan (32.6.1-20210726122859) ... Setting up nvidia-l4t-multimedia-utils (32.6.1-20210726122859) ... Setting up nvidia-l4t-oem-config (32.6.1-20210726122859) ... Setting up nvidia-l4t-tools (32.6.1-20210726122859) ... Setting up nvidia-l4t-wayland (32.6.1-20210726122859) ... Setting up nvidia-l4t-weston (32.6.1-20210726122859) ... Setting up nvidia-l4t-x11 (32.6.1-20210726122859) ... Setting up nvidia-l4t-xusb-firmware (32.6.1-20210726122859) ... Pre-installing xusb firmware package, skip flashing Setting up nvidia-l4t-kernel (4.9.253-tegra-32.6.1-20210726122859) ... Using the existing boot entry 'primary' Pre-installing kernel package, skip flashing Setting up nvidia-l4t-kernel-dtbs (4.9.253-tegra-32.6.1-20210726122859) ... Pre-installing kernel-dtbs package, skip flashing Setting up nvidia-l4t-kernel-headers (4.9.253-tegra-32.6.1-20210726122859) ... Setting up nvidia-l4t-bootloader (32.6.1-20210726122859) ... Pre-installing bootloader package, skip flashing Setting up nvidia-l4t-3d-core (32.6.1-20210726122859) ... Setting up nvidia-l4t-cuda (32.6.1-20210726122859) ... Setting up nvidia-l4t-graphics-demos (32.6.1-20210726122859) ... Setting up nvidia-l4t-initrd (32.6.1-20210726122859) ... Pre-installing initrd package, skip flashing Setting up nvidia-l4t-jetson-io (32.6.1-20210726122859) ... Setting up nvidia-l4t-multimedia (32.6.1-20210726122859) ... Setting up nvidia-l4t-camera (32.6.1-20210726122859) ... Setting up nvidia-l4t-gstreamer (32.6.1-20210726122859) ... Processing triggers for nvidia-l4t-kernel (4.9.253-tegra-32.6.1-20210726122859) ... Processing triggers for libc-bin (2.27-3ubuntu1.4) ... /media/lvision/Sabrent/Downloads/nvidia/jetson/jetpack/Linux_for_Tegra Removing QEMU binary from rootfs Removing stashed Debian packages from rootfs L4T BSP package installation completed! Rename ubuntu.desktop --> ux-ubuntu.desktop Disabling NetworkManager-wait-online.service Disable the ondemand service by changing the runlevels to 'K' Success!

2.2 Flashing Process

2.2.1 Force Recovery mode

Let's first follow L4T

Documentation Quick Start Guide , and let Jetson

AGX Xavier enter Force Recovery mode . To double

confirm, we type the following command line on host computer:

1 2 ➜ ~ lsusb | grep NVIDIA Bus 011 Device 019: ID 0955:7019 NVIDIA Corp. USB2.0 Hub

From the number 7019 , it's clear that I've got a Jetson

AGX Xavier , either P2888-0001 or

P2888-0004 .

2.2.2 Flash onto eMMC

As mentioned, I've got either a P2888-0001 or a

P2888-0004 , therefore, we should use the configuration

jetson-agx-xavier-devkit .

1 2 ➜ Linux_for_Tegra ll flash.sh -rwxrwxr-x 1 lvision lvision 106K Jul 26 12:29 flash.sh

2.2.2.1 Flashing

Now, lets start flashing. Please bear in mind: mmcblk0p1 refers to eMMC on Jetson

AGX Xavier ; mmcblk1p1 is NOT the SD card on your host computer, but

the SD card inserted into Jetson

AGX Xavier . That is to say: the command on host computer

ls /dev/mmc* may NOT display anything.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 734 735 736 737 738 739 740 741 742 743 744 745 746 747 748 749 750 751 752 753 754 755 756 757 758 759 760 761 762 763 764 765 766 767 768 769 770 771 772 773 774 775 776 777 778 779 780 781 782 783 784 785 786 787 788 789 790 791 792 793 794 795 796 797 798 799 800 801 802 803 804 805 806 807 808 809 810 811 812 813 814 815 816 817 818 819 820 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 837 838 839 840 841 842 843 844 845 846 847 848 849 850 851 852 853 854 855 856 857 858 859 860 861 862 863 864 865 866 867 868 869 870 871 872 873 874 875 876 877 878 879 880 881 882 883 884 885 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 902 903 904 905 906 907 908 909 910 911 912 913 914 915 916 917 918 919 920 921 922 923 924 925 926 927 928 929 930 931 932 933 934 935 936 937 938 939 940 941 942 943 944 945 946 947 948 949 950 951 952 953 954 955 956 957 958 959 960 961 962 963 964 965 966 967 968 969 970 971 972 973 974 975 976 977 978 979 980 981 982 983 984 985 986 987 988 ➜ Linux_for_Tegra sudo BOARDID=2888 FAB=400 BOARDSKU=0004 BOARDREV=K.0 ./flash.sh jetson-agx-xavier-devkit mmcblk0p1 # # L4T BSP Information: # R32 , REVISION: 6.1 # # Target Board Information: # Name: jetson-agx-xavier-devkit, Board Family: t186ref, SoC: Tegra 194, # OpMode: production, Boot Authentication: NS, # Disk encryption: disabled , # copying soft_fuses(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-soft-fuses-l4t.cfg)... done. ./tegraflash.py --chip 0x19 --applet "....../Linux_for_Tegra/bootloader/mb1_t194_prod.bin" --skipuid --soft_fuses tegra194-mb1-soft-fuses-l4t.cfg --bins "mb2_applet nvtboot_applet_t194.bin" --cmd "dump eeprom boardinfo cvm.bin;reboot recovery" Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0070 ] Generating RCM messages [ 0.0078 ] tegrahost_v2 --chip 0x19 0 --magicid MB1B --appendsigheader ....../Linux_for_Tegra/bootloader/mb1_t194_prod.bin zerosbk [ 0.0087 ] Header already present for ....../Linux_for_Tegra/bootloader/mb1_t194_prod.bin [ 0.0118 ] [ 0.0130 ] tegrasign_v2 --key None --getmode mode.txt [ 0.0140 ] Assuming zero filled SBK key [ 0.0142 ] [ 0.0160 ] tegrasign_v2 --key None --file ....../Linux_for_Tegra/bootloader/mb1_t194_prod_sigheader.bin --offset 2960 --length 1136 --pubkeyhash pub_key.key [ 0.0171 ] Assuming zero filled SBK key [ 0.0177 ] [ 0.0189 ] tegrahost_v2 --chip 0x19 0 --updatesigheader ....../Linux_for_Tegra/bootloader/mb1_t194_prod_sigheader.bin ....../Linux_for_Tegra/bootloader/mb1_t194_prod_sigheader.hash zerosbk [ 0.0231 ] [ 0.0244 ] tegrabct_v2 --chip 0x19 0 --sfuse tegra194-mb1-soft-fuses-l4t.cfg.pdf sfuse.bin [ 0.0255 ] [ 0.0265 ] tegrarcm_v2 --listrcm rcm_list.xml --chip 0x19 0 --sfuses sfuse.bin --download rcm ....../Linux_for_Tegra/bootloader/mb1_t194_prod_sigheader.bin 0 0 [ 0.0275 ] RCM 0 is saved as rcm_0.rcm [ 0.0307 ] RCM 1 is saved as rcm_1.rcm [ 0.0308 ] RCM 2 is saved as rcm_2.rcm [ 0.0308 ] List of rcm files are saved in rcm_list.xml [ 0.0308 ] [ 0.0308 ] Signing RCM messages [ 0.0320 ] tegrasign_v2 --key None --list rcm_list.xml --pubkeyhash pub_key.key --getmontgomeryvalues montgomery.bin [ 0.0330 ] Assuming zero filled SBK key [ 0.0338 ] [ 0.0338 ] Copying signature to RCM mesages [ 0.0348 ] tegrarcm_v2 --chip 0x19 0 --updatesig rcm_list_signed.xml [ 0.0367 ] [ 0.0367 ] Boot Rom communication [ 0.0377 ] tegrarcm_v2 --chip 0x19 0 --rcm rcm_list_signed.xml --skipuid [ 0.0385 ] RCM version 0X190001 [ 0.0397 ] Boot Rom communication completed [ 1.0554 ] [ 2.0583 ] tegrarcm_v2 --isapplet [ 2.0594 ] Applet version 01.00.0000 [ 2.0614 ] [ 2.0627 ] tegrarcm_v2 --ismb2 [ 2.1006 ] [ 2.1020 ] tegrahost_v2 --chip 0x19 --align nvtboot_applet_t194.bin [ 2.1031 ] [ 2.1043 ] tegrahost_v2 --chip 0x19 0 --magicid PLDT --appendsigheader nvtboot_applet_t194.bin zerosbk [ 2.1054 ] adding BCH for nvtboot_applet_t194.bin [ 2.1077 ] [ 2.1096 ] tegrasign_v2 --key None --list nvtboot_applet_t194_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 2.1106 ] Assuming zero filled SBK key [ 2.1113 ] [ 2.1129 ] tegrahost_v2 --chip 0x19 0 --updatesigheader nvtboot_applet_t194_sigheader.bin.encrypt nvtboot_applet_t194_sigheader.bin.hash zerosbk [ 2.1154 ] [ 2.1165 ] tegrarcm_v2 --download mb2 nvtboot_applet_t194_sigheader.bin.encrypt [ 2.1175 ] Applet version 01.00.0000 [ 2.1366 ] Sending mb2 [ 2.1366 ] [................................................] 100% [ 2.1497 ] [ 2.1510 ] tegrarcm_v2 --boot recovery [ 2.1521 ] Applet version 01.00.0000 [ 2.1576 ] [ 3.1601 ] tegrarcm_v2 --isapplet [ 3.1643 ] [ 3.1656 ] tegrarcm_v2 --ismb2 [ 3.1666 ] MB2 Applet version 01.00.0000 [ 3.2008 ] [ 3.2021 ] tegrarcm_v2 --ismb2 [ 3.2031 ] MB2 Applet version 01.00.0000 [ 3.2368 ] [ 3.2380 ] Retrieving board information [ 3.2390 ] tegrarcm_v2 --oem platformdetails chip chip_info.bin [ 3.2401 ] MB2 Applet version 01.00.0000 [ 3.2585 ] Saved platform info in chip_info.bin [ 3.2625 ] Chip minor revision: 2 [ 3.2627 ] Bootrom revision: 0xf [ 3.2628 ] Ram code: 0x2 [ 3.2629 ] Chip sku: 0xd0 [ 3.2630 ] Chip Sample: non es [ 3.2631 ] [ 3.2634 ] Retrieving EEPROM data [ 3.2635 ] tegrarcm_v2 --oem platformdetails eeprom cvm ....../Linux_for_Tegra/bootloader/cvm.bin [ 3.2647 ] MB2 Applet version 01.00.0000 [ 3.2986 ] Saved platform info in ....../Linux_for_Tegra/bootloader/cvm.bin [ 3.3326 ] [ 3.3327 ] Rebooting to recovery mode [ 3.3339 ] tegrarcm_v2 --ismb2 [ 3.3348 ] MB2 Applet version 01.00.0000 [ 3.3369 ] [ 3.3369 ] Rebooting to recovery mode [ 3.3382 ] tegrarcm_v2 --reboot recovery [ 3.3394 ] MB2 Applet version 01.00.0000 [ 3.3792 ] Board ID(2888) version(400) sku(0004) revision(K.0) Copy ....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb to ....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb.rec copying bctfile(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-memcfg-p2888.cfg)... done. copying bctfile1(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-memcfg-sw-override.cfg)... done. copying uphy_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg)... done. copying minratchet_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-ratchet-p2888-0000-p2822-0000.cfg)... done. copying device_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra19x-mb1-bct-device-sdmmc.cfg)... done. copying misc_cold_boot_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-misc-l4t.cfg)... done. copying misc_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-misc-flash.cfg)... done. copying pinmux_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg)... done. copying gpioint_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg)... done. copying pmic_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg)... done. copying pmc_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg)... done. copying prod_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg)... done. copying scr_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-scr-cbb-mini.cfg)... done. copying scr_cold_boot_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-scr-cbb-mini.cfg)... done. copying bootrom_config(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg)... done. copying dev_params(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-br-bct-sdmmc.cfg)... done. Existing bootloader(....../Linux_for_Tegra/bootloader/nvtboot_cpu_t194.bin) reused. copying initrd(....../Linux_for_Tegra/bootloader/l4t_initrd.img)... done. populating kernel to rootfs... done. ....../Linux_for_Tegra/bootloader/tegraflash.py --chip 0x19 --key --cmd sign ....../Linux_for_Tegra/rootfs/boot/Image Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0076 ] Generating signature [ 0.0086 ] tegrasign_v2 --key --getmode mode.txt [ 0.0093 ] Assuming zero filled SBK key [ 0.0096 ] [ 0.0103 ] header_magic: 5614 [ 0.0117 ] tegrahost_v2 --chip 0x19 --align 1_Image [ 0.0128 ] [ 0.0138 ] tegrahost_v2 --chip 0x19 0 --magicid DATA --appendsigheader 1_Image zerosbk [ 0.0147 ] adding BCH for 1_Image [ 0.4107 ] [ 0.4128 ] tegrasign_v2 --key --list 1_Image_sigheader_list.xml --pubkeyhash pub_key.key [ 0.4137 ] Assuming zero filled SBK key [ 0.4470 ] [ 0.4485 ] tegrahost_v2 --chip 0x19 0 --updatesigheader 1_Image_sigheader.encrypt 1_Image_sigheader.hash zerosbk [ 0.6813 ] [ 0.7005 ] Signed file: ....../Linux_for_Tegra/bootloader/Image_sigheader.encrypt l4t_sign_image.sh: Generate header for Image_sigheader.encrypt l4t_sign_image.sh: chip 0x19: add 0x20bf808 to offset 0x8 in sig file l4t_sign_image.sh: Generate 16-byte-size-aligned base file for Image_sigheader.encrypt l4t_sign_image.sh: the sign header is saved at ....../Linux_for_Tegra/rootfs/boot/Image.sig done. populating initrd to rootfs... done. ....../Linux_for_Tegra/bootloader/tegraflash.py --chip 0x19 --key --cmd sign ....../Linux_for_Tegra/rootfs/boot/initrd Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0071 ] Generating signature [ 0.0079 ] tegrasign_v2 --key --getmode mode.txt [ 0.0087 ] Assuming zero filled SBK key [ 0.0089 ] [ 0.0094 ] header_magic: 1f8b0800 [ 0.0105 ] tegrahost_v2 --chip 0x19 --align 1_initrd [ 0.0113 ] [ 0.0122 ] tegrahost_v2 --chip 0x19 0 --magicid DATA --appendsigheader 1_initrd zerosbk [ 0.0129 ] adding BCH for 1_initrd [ 0.0981 ] [ 0.1002 ] tegrasign_v2 --key --list 1_initrd_sigheader_list.xml --pubkeyhash pub_key.key [ 0.1011 ] Assuming zero filled SBK key [ 0.1101 ] [ 0.1112 ] tegrahost_v2 --chip 0x19 0 --updatesigheader 1_initrd_sigheader.encrypt 1_initrd_sigheader.hash zerosbk [ 0.1549 ] [ 0.1600 ] Signed file: ....../Linux_for_Tegra/bootloader/initrd_sigheader.encrypt l4t_sign_image.sh: Generate header for initrd_sigheader.encrypt l4t_sign_image.sh: chip 0x19: add 0x6e6ce8 to offset 0x8 in sig file l4t_sign_image.sh: Generate 16-byte-size-aligned base file for initrd_sigheader.encrypt l4t_sign_image.sh: the sign header is saved at ....../Linux_for_Tegra/rootfs/boot/initrd.sig done. populating ....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb to rootfs... done. Making Boot image... done. ....../Linux_for_Tegra/bootloader/tegraflash.py --chip 0x19 --key --cmd sign boot.img Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0004 ] Generating signature [ 0.0011 ] tegrasign_v2 --key --getmode mode.txt [ 0.0017 ] Assuming zero filled SBK key [ 0.0020 ] [ 0.0025 ] header_magic: 414e4452 [ 0.0038 ] tegrahost_v2 --chip 0x19 --align 1_boot.img [ 0.0050 ] [ 0.0059 ] tegrahost_v2 --chip 0x19 0 --magicid DATA --appendsigheader 1_boot.img zerosbk [ 0.0068 ] adding BCH for 1_boot.img [ 0.5130 ] [ 0.5151 ] tegrasign_v2 --key --list 1_boot_sigheader.img_list.xml --pubkeyhash pub_key.key [ 0.5160 ] Assuming zero filled SBK key [ 0.5541 ] [ 0.5557 ] tegrahost_v2 --chip 0x19 0 --updatesigheader 1_boot_sigheader.img.encrypt 1_boot_sigheader.img.hash zerosbk [ 0.8122 ] [ 0.8317 ] Signed file: ....../Linux_for_Tegra/bootloader/temp_user_dir/boot_sigheader.img.encrypt l4t_sign_image.sh: Generate header for boot_sigheader.img.encrypt l4t_sign_image.sh: chip 0x19: add 0x27a7800 to offset 0x8 in sig file l4t_sign_image.sh: Generate 16-byte-size-aligned base file for boot_sigheader.img.encrypt l4t_sign_image.sh: the signed file is ....../Linux_for_Tegra/bootloader/temp_user_dir/boot_sigheader.img.encrypt done. Making recovery ramdisk for recovery image... Re-generating recovery ramdisk for recovery image... ....../Linux_for_Tegra/bootloader/ramdisk_tmp ....../Linux_for_Tegra/bootloader ....../Linux_for_Tegra 30402 blocks _BASE_KERNEL_VERSION=4.9.201-tegra ....../Linux_for_Tegra/bootloader/ramdisk_tmp/lib ....../Linux_for_Tegra/bootloader/ramdisk_tmp ....../Linux_for_Tegra/bootloader ....../Linux_for_Tegra 65765 blocks Making Recovery image... copying recdtbfile(....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb.rec)... done. 20+0 records in 20+0 records out 20 bytes copied, 0.00012767 s, 157 kB/s Existing sosfile(....../Linux_for_Tegra/bootloader/mb1_t194_prod.bin) reused. Existing tegraboot(....../Linux_for_Tegra/bootloader/nvtboot_t194.bin) reused. Existing cpu_bootloader(....../Linux_for_Tegra/bootloader/nvtboot_cpu_t194.bin) reused. Existing mb2blfile(....../Linux_for_Tegra/bootloader/nvtboot_recovery_t194.bin) reused. Existing mtspreboot(....../Linux_for_Tegra/bootloader/preboot_c10_prod_cr.bin) reused. Existing mcepreboot(....../Linux_for_Tegra/bootloader/mce_c10_prod_cr.bin) reused. Existing mtsproper(....../Linux_for_Tegra/bootloader/mts_c10_prod_cr.bin) reused. Existing mb1file(....../Linux_for_Tegra/bootloader/mb1_t194_prod.bin) reused. Existing bpffile(....../Linux_for_Tegra/bootloader/bpmp_t194.bin) reused. copying bpfdtbfile(....../Linux_for_Tegra/bootloader/t186ref/tegra194-a02-bpmp-p2888-a04.dtb)... done. Existing scefile(....../Linux_for_Tegra/bootloader/camera-rtcpu-sce.img) reused. Existing camerafw(....../Linux_for_Tegra/bootloader/camera-rtcpu-rce.img) reused. Existing spefile(....../Linux_for_Tegra/bootloader/spe_t194.bin) reused. Existing drameccfile(....../Linux_for_Tegra/bootloader/dram-ecc.bin) reused. Existing badpagefile(....../Linux_for_Tegra/bootloader/badpage.bin) reused. Existing wb0boot(....../Linux_for_Tegra/bootloader/warmboot_t194_prod.bin) reused. Existing tosfile(....../Linux_for_Tegra/bootloader/tos-trusty_t194.img) reused. Existing eksfile(....../Linux_for_Tegra/bootloader/eks.img) reused. copying soft_fuses(....../Linux_for_Tegra/bootloader/t186ref/BCT/tegra194-mb1-soft-fuses-l4t.cfg)... done. copying dtbfile(....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb)... done. Copying nv_boot_control.conf to rootfs ....../Linux_for_Tegra/bootloader/tegraflash.py --chip 0x19 --key --cmd sign kernel_tegra194-p2888-0001-p2822-0000.dtb Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0005 ] Generating signature [ 0.0013 ] tegrasign_v2 --key --getmode mode.txt [ 0.0020 ] Assuming zero filled SBK key [ 0.0023 ] [ 0.0028 ] header_magic: d00dfeed [ 0.0041 ] tegrahost_v2 --chip 0x19 --align 1_kernel_tegra194-p2888-0001-p2822-0000.dtb [ 0.0054 ] [ 0.0064 ] tegrahost_v2 --chip 0x19 0 --magicid DATA --appendsigheader 1_kernel_tegra194-p2888-0001-p2822-0000.dtb zerosbk [ 0.0073 ] adding BCH for 1_kernel_tegra194-p2888-0001-p2822-0000.dtb [ 0.0138 ] [ 0.0157 ] tegrasign_v2 --key --list 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb_list.xml --pubkeyhash pub_key.key [ 0.0166 ] Assuming zero filled SBK key [ 0.0173 ] [ 0.0187 ] tegrahost_v2 --chip 0x19 0 --updatesigheader 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.hash zerosbk [ 0.0231 ] [ 0.0237 ] Signed file: ....../Linux_for_Tegra/bootloader/temp_user_dir/kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: Generate header for kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: chip 0x19: add 0x45209 to offset 0x8 in sig file l4t_sign_image.sh: Generate 16-byte-size-aligned base file for kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: the sign header is saved at kernel_tegra194-p2888-0001-p2822-0000.dtb.sig done. ....../Linux_for_Tegra/bootloader/tegraflash.py --chip 0x19 --key --cmd sign kernel_tegra194-p2888-0001-p2822-0000.dtb Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0005 ] Generating signature [ 0.0012 ] tegrasign_v2 --key --getmode mode.txt [ 0.0020 ] Assuming zero filled SBK key [ 0.0022 ] [ 0.0028 ] header_magic: d00dfeed [ 0.0041 ] tegrahost_v2 --chip 0x19 --align 1_kernel_tegra194-p2888-0001-p2822-0000.dtb [ 0.0053 ] [ 0.0063 ] tegrahost_v2 --chip 0x19 0 --magicid DATA --appendsigheader 1_kernel_tegra194-p2888-0001-p2822-0000.dtb zerosbk [ 0.0072 ] adding BCH for 1_kernel_tegra194-p2888-0001-p2822-0000.dtb [ 0.0127 ] [ 0.0148 ] tegrasign_v2 --key --list 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb_list.xml --pubkeyhash pub_key.key [ 0.0158 ] Assuming zero filled SBK key [ 0.0164 ] [ 0.0178 ] tegrahost_v2 --chip 0x19 0 --updatesigheader 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt 1_kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.hash zerosbk [ 0.0221 ] [ 0.0227 ] Signed file: ....../Linux_for_Tegra/bootloader/temp_user_dir/kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: Generate header for kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: chip 0x19: add 0x45209 to offset 0x8 in sig file l4t_sign_image.sh: Generate 16-byte-size-aligned base file for kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt l4t_sign_image.sh: the signed file is ....../Linux_for_Tegra/bootloader/temp_user_dir/kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt done. Making system.img... populating rootfs from ....../Linux_for_Tegra/rootfs ... done. populating /boot/extlinux/extlinux.conf ... done. Sync'ing system.img ... done. Converting RAW image to Sparse image... done. system.img built successfully. Existing tbcfile(....../Linux_for_Tegra/bootloader/cboot_t194.bin) reused. copying tbcdtbfile(....../Linux_for_Tegra/kernel/dtb/tegra194-p2888-0001-p2822-0000.dtb)... done. copying cfgfile(....../Linux_for_Tegra/bootloader/t186ref/cfg/flash_t194_sdmmc.xml) to flash.xml... done. Existing flasher(....../Linux_for_Tegra/bootloader/nvtboot_recovery_cpu_t194.bin) reused. Existing flashapp(....../Linux_for_Tegra/bootloader/tegraflash.py) reused. ./tegraflash.py --bl nvtboot_recovery_cpu_t194.bin --sdram_config tegra194-mb1-bct-memcfg-p2888.cfg,tegra194-memcfg-sw-override.cfg --odmdata 0x9190000 --applet mb1_t194_prod.bin --cmd "flash; reboot" --soft_fuses tegra194-mb1-soft-fuses-l4t.cfg --cfg flash.xml --chip 0x19 --uphy_config tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg --minratchet_config tegra194-mb1-bct-ratchet-p2888-0000-p2822-0000.cfg --device_config tegra19x-mb1-bct-device-sdmmc.cfg --misc_cold_boot_config tegra194-mb1-bct-misc-l4t.cfg --misc_config tegra194-mb1-bct-misc-flash.cfg --pinmux_config tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg --gpioint_config tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg --pmic_config tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg --pmc_config tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg --prod_config tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg --scr_config tegra194-mb1-bct-scr-cbb-mini.cfg --scr_cold_boot_config tegra194-mb1-bct-scr-cbb-mini.cfg --br_cmd_config tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg --dev_params tegra194-br-bct-sdmmc.cfg --bin "mb2_bootloader nvtboot_recovery_t194.bin; mts_preboot preboot_c10_prod_cr.bin; mts_mce mce_c10_prod_cr.bin; mts_proper mts_c10_prod_cr.bin; bpmp_fw bpmp_t194.bin; bpmp_fw_dtb tegra194-a02-bpmp-p2888-a04.dtb; spe_fw spe_t194.bin; tlk tos-trusty_t194.img; eks eks.img; bootloader_dtb tegra194-p2888-0001-p2822-0000.dtb" saving flash command in ....../Linux_for_Tegra/bootloader/flashcmd.txt saving Windows flash command to ....../Linux_for_Tegra/bootloader/flash_win.bat *** Flashing target device started. *** Welcome to Tegra Flash version 1.0.0 Type ? or help for help and q or quit to exit Use ! to execute system commands [ 0.0008 ] tegrasign_v2 --key None --getmode mode.txt [ 0.0015 ] Assuming zero filled SBK key [ 0.0017 ] [ 0.0019 ] Generating RCM messages [ 0.0030 ] tegrahost_v2 --chip 0x19 0 --magicid MB1B --appendsigheader mb1_t194_prod.bin zerosbk [ 0.0039 ] Header already present for mb1_t194_prod.bin [ 0.0069 ] [ 0.0082 ] tegrasign_v2 --key None --getmode mode.txt [ 0.0091 ] Assuming zero filled SBK key [ 0.0093 ] [ 0.0104 ] tegrasign_v2 --key None --file mb1_t194_prod_sigheader.bin --offset 2960 --length 1136 --pubkeyhash pub_key.key [ 0.0112 ] Assuming zero filled SBK key [ 0.0118 ] [ 0.0127 ] tegrahost_v2 --chip 0x19 0 --updatesigheader mb1_t194_prod_sigheader.bin mb1_t194_prod_sigheader.hash zerosbk [ 0.0165 ] [ 0.0173 ] tegrabct_v2 --chip 0x19 0 --sfuse tegra194-mb1-soft-fuses-l4t.cfg sfuse.bin [ 0.0182 ] [ 0.0190 ] tegrabct_v2 --chip 0x19 0 --ratchet_blob ratchet_blob.bin --minratchet tegra194-mb1-bct-ratchet-p2888-0000-p2822-0000.cfg [ 0.0196 ] FwIndex: 1, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 2, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 3, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 4, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 5, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 6, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 7, MinRatchetLevel: 0 [ 0.0199 ] FwIndex: 8, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 11, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 12, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 13, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 14, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 15, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 16, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 17, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 18, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 19, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 30, MinRatchetLevel: 0 [ 0.0200 ] FwIndex: 31, MinRatchetLevel: 0 [ 0.0200 ] [ 0.0210 ] tegrarcm_v2 --listrcm rcm_list.xml --chip 0x19 0 --sfuses sfuse.bin --download rcm mb1_t194_prod_sigheader.bin 0 0 [ 0.0218 ] RCM 0 is saved as rcm_0.rcm [ 0.0244 ] RCM 1 is saved as rcm_1.rcm [ 0.0244 ] RCM 2 is saved as rcm_2.rcm [ 0.0244 ] List of rcm files are saved in rcm_list.xml [ 0.0244 ] [ 0.0245 ] Signing RCM messages [ 0.0257 ] tegrasign_v2 --key None --list rcm_list.xml --pubkeyhash pub_key.key --getmontgomeryvalues montgomery.bin [ 0.0266 ] Assuming zero filled SBK key [ 0.0273 ] [ 0.0273 ] Copying signature to RCM mesages [ 0.0285 ] tegrarcm_v2 --chip 0x19 0 --updatesig rcm_list_signed.xml [ 0.0301 ] [ 0.0301 ] Parsing partition layout [ 0.0310 ] tegraparser_v2 --pt flash.xml.tmp [ 0.0325 ] [ 0.0325 ] Creating list of images to be signed [ 0.0334 ] tegrahost_v2 --chip 0x19 0 --partitionlayout flash.xml.bin --ratchet_blob ratchet_blob.bin --list images_list.xml zerosbk [ 0.0343 ] MB1: Nvheader already present is mb1_t194_prod.bin [ 0.0349 ] Header already present for mb1_t194_prod_sigheader.bin [ 0.0352 ] MB1: Nvheader already present is mb1_t194_prod.bin [ 0.0374 ] Header already present for mb1_t194_prod_sigheader.bin [ 0.0378 ] adding BCH for spe_t194.bin [ 0.0399 ] adding BCH for spe_t194.bin [ 0.0414 ] adding BCH for nvtboot_t194.bin [ 0.0430 ] adding BCH for nvtboot_t194.bin [ 0.0453 ] Header already present for preboot_c10_prod_cr.bin [ 0.0475 ] Header already present for preboot_c10_prod_cr.bin [ 0.0477 ] Header already present for mce_c10_prod_cr.bin [ 0.0480 ] Header already present for mce_c10_prod_cr.bin [ 0.0486 ] adding BCH for mts_c10_prod_cr.bin [ 0.0504 ] adding BCH for mts_c10_prod_cr.bin [ 0.0886 ] adding BCH for cboot_t194.bin [ 0.1285 ] adding BCH for cboot_t194.bin [ 0.1335 ] adding BCH for tegra194-p2888-0001-p2822-0000.dtb [ 0.1385 ] adding BCH for tegra194-p2888-0001-p2822-0000.dtb [ 0.1417 ] adding BCH for tos-trusty_t194.img [ 0.1448 ] adding BCH for tos-trusty_t194.img [ 0.1492 ] adding BCH for eks.img [ 0.1534 ] adding BCH for eks.img [ 0.1536 ] adding BCH for bpmp_t194.bin [ 0.1538 ] adding BCH for bpmp_t194.bin [ 0.1634 ] adding BCH for tegra194-a02-bpmp-p2888-a04.dtb [ 0.1731 ] adding BCH for tegra194-a02-bpmp-p2888-a04.dtb [ 0.1814 ] adding BCH for camera-rtcpu-rce.img [ 0.1895 ] adding BCH for camera-rtcpu-rce.img [ 0.1924 ] adding BCH for adsp-fw.bin [ 0.1953 ] adding BCH for adsp-fw.bin [ 0.1962 ] Header already present for warmboot_t194_prod.bin [ 0.1972 ] Header already present for warmboot_t194_prod.bin [ 0.1975 ] adding BCH for recovery.img [ 0.2152 ] adding BCH for tegra194-p2888-0001-p2822-0000.dtb.rec [ 0.7566 ] adding BCH for boot.img [ 0.7743 ] adding BCH for boot.img [ 1.2452 ] adding BCH for kernel_tegra194-p2888-0001-p2822-0000.dtb [ 1.7163 ] adding BCH for kernel_tegra194-p2888-0001-p2822-0000.dtb [ 1.7243 ] [ 1.7244 ] Filling MB1 storage info [ 1.7244 ] Generating br-bct [ 1.7256 ] Performing cfg overlay [ 1.7256 ] ['tegra194-mb1-bct-memcfg-p2888.cfg', 'tegra194-memcfg-sw-override.cfg'] [ 1.7259 ] sw_memcfg_overlay.pl -c tegra194-mb1-bct-memcfg-p2888.cfg -s tegra194-memcfg-sw-override.cfg -o ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg [ 1.7698 ] [ 1.7699 ] Updating dev and MSS params in BR BCT [ 1.7699 ] tegrabct_v2 --dev_param tegra194-br-bct-sdmmc.cfg --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --brbct br_bct.cfg --sfuse tegra194-mb1-soft-fuses-l4t.cfg --chip 0x19 0 [ 1.8085 ] [ 1.8086 ] Updating bl info [ 1.8093 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 0 --updateblinfo flash.xml.bin [ 1.8105 ] [ 1.8105 ] Generating signatures [ 1.8112 ] tegrasign_v2 --key None --list images_list.xml --pubkeyhash pub_key.key [ 1.8119 ] Assuming zero filled SBK key [ 1.9290 ] [ 1.9290 ] Generating br-bct [ 1.9300 ] Performing cfg overlay [ 1.9301 ] ['....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg'] [ 1.9301 ] Updating dev and MSS params in BR BCT [ 1.9301 ] tegrabct_v2 --dev_param tegra194-br-bct-sdmmc.cfg --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --brbct br_bct.cfg --sfuse tegra194-mb1-soft-fuses-l4t.cfg --chip 0x19 0 [ 1.9757 ] [ 1.9757 ] Updating bl info [ 1.9768 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 0 --updateblinfo flash.xml.bin --updatesig images_list_signed.xml [ 1.9793 ] [ 1.9794 ] Updating smd info [ 1.9803 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 --updatesmdinfo flash.xml.bin [ 1.9815 ] [ 1.9815 ] Updating Odmdata [ 1.9825 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 0 --updatefields Odmdata =0x9190000 [ 1.9835 ] [ 1.9836 ] Get Signed section of bct [ 1.9844 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 0 --listbct bct_list.xml [ 1.9851 ] [ 1.9857 ] tegrasign_v2 --key None --list bct_list.xml --pubkeyhash pub_key.key --getmontgomeryvalues montgomery.bin [ 1.9862 ] Assuming zero filled SBK key [ 1.9864 ] [ 1.9864 ] Updating BCT with signature [ 1.9875 ] tegrabct_v2 --brbct br_bct_BR.bct --chip 0x19 0 --updatesig bct_list_signed.xml [ 1.9886 ] [ 1.9887 ] Generating coldboot mb1-bct [ 1.9899 ] tegrabct_v2 --chip 0x19 0 --mb1bct mb1_cold_boot_bct.cfg --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --misc tegra194-mb1-bct-misc-l4t.cfg --scr tegra194-mb1-bct-scr-cbb-mini.cfg --pinmux tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg --pmc tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg --pmic tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg --brcommand tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg --prod tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg --gpioint tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg --uphy tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg --device tegra19x-mb1-bct-device-sdmmc.cfg [ 1.9907 ] MB1-BCT version: 0x1 [ 1.9910 ] Parsing config file :tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg [ 1.9915 ] Added Platform Config 0 data with size :- 3008 [ 1.9928 ] Parsing config file :tegra194-mb1-bct-scr-cbb-mini.cfg [ 1.9931 ] Added Platform Config 1 data with size :- 19640 [ 2.0032 ] Parsing config file :tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg [ 2.0037 ] Added Platform Config 2 data with size :- 24 [ 2.0039 ] Parsing config file :tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg [ 2.0043 ] Added Platform Config 4 data with size :- 348 [ 2.0043 ] [ 2.0044 ] Parsing config file :tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg [ 2.0044 ] Added Platform Config 3 data with size :- 92 [ 2.0044 ] [ 2.0044 ] Parsing config file :tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg [ 2.0044 ] Added Platform Config 5 data with size :- 56 [ 2.0044 ] [ 2.0044 ] Parsing config file :tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg [ 2.0044 ] Added Platform Config 7 data with size :- 392 [ 2.0044 ] [ 2.0044 ] Parsing config file :tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg [ 2.0044 ] Added Platform Config 8 data with size :- 12 [ 2.0044 ] [ 2.0044 ] Parsing config file :tegra19x-mb1-bct-device-sdmmc.cfg [ 2.0044 ] Added Platform Config 9 data with size :- 32 [ 2.0044 ] [ 2.0045 ] Updating mb1-bct with firmware information [ 2.0056 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_cold_boot_bct_MB1.bct --updatefwinfo flash.xml.bin [ 2.0065 ] MB1-BCT version: 0x1 [ 2.0069 ] [ 2.0070 ] Updating mb1-bct with storage information [ 2.0082 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_cold_boot_bct_MB1.bct --updatestorageinfo flash.xml.bin [ 2.0091 ] MB1-BCT version: 0x1 [ 2.0095 ] [ 2.0096 ] Updating mb1-bct with ratchet information [ 2.0106 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_cold_boot_bct_MB1.bct --minratchet tegra194-mb1-bct-ratchet-p2888-0000-p2822-0000.cfg [ 2.0113 ] MB1-BCT version: 0x1 [ 2.0117 ] FwIndex: 1, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 2, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 3, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 4, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 5, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 6, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 7, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 8, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 11, MinRatchetLevel: 0 [ 2.0117 ] FwIndex: 12, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 13, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 14, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 15, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 16, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 17, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 18, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 19, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 30, MinRatchetLevel: 0 [ 2.0118 ] FwIndex: 31, MinRatchetLevel: 0 [ 2.0118 ] [ 2.0130 ] tegrahost_v2 --chip 0x19 --align mb1_cold_boot_bct_MB1.bct [ 2.0139 ] [ 2.0150 ] tegrahost_v2 --chip 0x19 0 --magicid MBCT --ratchet_blob ratchet_blob.bin --appendsigheader mb1_cold_boot_bct_MB1.bct zerosbk [ 2.0160 ] adding BCH for mb1_cold_boot_bct_MB1.bct [ 2.0168 ] [ 2.0180 ] tegrasign_v2 --key None --list mb1_cold_boot_bct_MB1_sigheader.bct_list.xml --pubkeyhash pub_key.key [ 2.0188 ] Assuming zero filled SBK key [ 2.0192 ] [ 2.0206 ] tegrahost_v2 --chip 0x19 0 --updatesigheader mb1_cold_boot_bct_MB1_sigheader.bct.encrypt mb1_cold_boot_bct_MB1_sigheader.bct.hash zerosbk [ 2.0221 ] [ 2.0222 ] Generating recovery mb1-bct [ 2.0233 ] tegrabct_v2 --chip 0x19 0 --mb1bct mb1_bct.cfg --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --misc tegra194-mb1-bct-misc-flash.cfg --scr tegra194-mb1-bct-scr-cbb-mini.cfg --pinmux tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg --pmc tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg --pmic tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg --brcommand tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg --prod tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg --gpioint tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg --uphy tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg --device tegra19x-mb1-bct-device-sdmmc.cfg [ 2.0244 ] MB1-BCT version: 0x1 [ 2.0247 ] Parsing config file :tegra19x-mb1-pinmux-p2888-0000-a04-p2822-0000-b01.cfg [ 2.0252 ] Added Platform Config 0 data with size :- 3008 [ 2.0264 ] Parsing config file :tegra194-mb1-bct-scr-cbb-mini.cfg [ 2.0267 ] Added Platform Config 1 data with size :- 19640 [ 2.0360 ] Parsing config file :tegra19x-mb1-padvoltage-p2888-0000-a00-p2822-0000-a00.cfg [ 2.0364 ] Added Platform Config 2 data with size :- 24 [ 2.0366 ] [ 2.0366 ] Parsing config file :tegra194-mb1-bct-pmic-p2888-0001-a04-E-0-p2822-0000.cfg [ 2.0366 ] Added Platform Config 4 data with size :- 348 [ 2.0366 ] [ 2.0366 ] Parsing config file :tegra194-mb1-bct-reset-p2888-0000-p2822-0000.cfg [ 2.0366 ] Added Platform Config 3 data with size :- 92 [ 2.0367 ] [ 2.0367 ] Parsing config file :tegra19x-mb1-prod-p2888-0000-p2822-0000.cfg [ 2.0367 ] Added Platform Config 5 data with size :- 56 [ 2.0367 ] [ 2.0367 ] Parsing config file :tegra194-mb1-bct-gpioint-p2888-0000-p2822-0000.cfg [ 2.0367 ] Added Platform Config 7 data with size :- 392 [ 2.0367 ] [ 2.0367 ] Parsing config file :tegra194-mb1-uphy-lane-p2888-0000-p2822-0000.cfg [ 2.0367 ] Added Platform Config 8 data with size :- 12 [ 2.0367 ] [ 2.0367 ] Parsing config file :tegra19x-mb1-bct-device-sdmmc.cfg [ 2.0367 ] Added Platform Config 9 data with size :- 32 [ 2.0367 ] [ 2.0368 ] Updating mb1-bct with firmware information [ 2.0377 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_bct_MB1.bct --updatefwinfo flash.xml.bin [ 2.0386 ] MB1-BCT version: 0x1 [ 2.0389 ] [ 2.0389 ] Updating mb1-bct with storage information [ 2.0399 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_bct_MB1.bct --updatestorageinfo flash.xml.bin [ 2.0408 ] MB1-BCT version: 0x1 [ 2.0411 ] [ 2.0411 ] Updating mb1-bct with ratchet information [ 2.0421 ] tegrabct_v2 --chip 0x19 --mb1bct mb1_bct_MB1.bct --minratchet tegra194-mb1-bct-ratchet-p2888-0000-p2822-0000.cfg [ 2.0431 ] MB1-BCT version: 0x1 [ 2.0433 ] FwIndex: 1, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 2, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 3, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 4, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 5, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 6, MinRatchetLevel: 0 [ 2.0434 ] FwIndex: 7, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 8, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 11, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 12, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 13, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 14, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 15, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 16, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 17, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 18, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 19, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 30, MinRatchetLevel: 0 [ 2.0435 ] FwIndex: 31, MinRatchetLevel: 0 [ 2.0435 ] [ 2.0446 ] tegrahost_v2 --chip 0x19 --align mb1_bct_MB1.bct [ 2.0456 ] [ 2.0466 ] tegrahost_v2 --chip 0x19 0 --magicid MBCT --ratchet_blob ratchet_blob.bin --appendsigheader mb1_bct_MB1.bct zerosbk [ 2.0475 ] adding BCH for mb1_bct_MB1.bct [ 2.0483 ] [ 2.0495 ] tegrasign_v2 --key None --list mb1_bct_MB1_sigheader.bct_list.xml --pubkeyhash pub_key.key [ 2.0505 ] Assuming zero filled SBK key [ 2.0508 ] [ 2.0520 ] tegrahost_v2 --chip 0x19 0 --updatesigheader mb1_bct_MB1_sigheader.bct.encrypt mb1_bct_MB1_sigheader.bct.hash zerosbk [ 2.0533 ] [ 2.0533 ] Generating coldboot mem-bct [ 2.0540 ] tegrabct_v2 --chip 0x19 0 --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --membct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_1.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_2.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_3.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_4.bct [ 2.0547 ] Packing sdram param for instance[0] [ 2.0937 ] Packing sdram param for instance[1] [ 2.0938 ] Packing sdram param for instance[2] [ 2.0938 ] Packing sdram param for instance[3] [ 2.0938 ] Packing sdram param for instance[4] [ 2.0938 ] Packing sdram param for instance[5] [ 2.0939 ] Packing sdram param for instance[6] [ 2.0939 ] Packing sdram param for instance[7] [ 2.0939 ] Packing sdram param for instance[8] [ 2.0939 ] Packing sdram param for instance[9] [ 2.0939 ] Packing sdram param for instance[10] [ 2.0939 ] Packing sdram param for instance[11] [ 2.0939 ] Packing sdram param for instance[12] [ 2.0939 ] Packing sdram param for instance[13] [ 2.0939 ] Packing sdram param for instance[14] [ 2.0939 ] Packing sdram param for instance[15] [ 2.0939 ] [ 2.0939 ] Getting sector size from pt [ 2.0950 ] tegraparser_v2 --getsectorsize flash.xml.bin sector_info.bin [ 2.0960 ] [ 2.0961 ] BlockSize read from layout is 200 [ 2.0971 ] tegrahost_v2 --chip 0x19 0 --blocksize 512 --magicid MEMB --addsigheader_multi ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_1.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_2.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_3.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_4.bct [ 2.0981 ] adding BCH for ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_1.bct [ 2.1005 ] [ 2.1016 ] tegrahost_v2 --chip 0x19 --align mem_coldboot.bct [ 2.1026 ] [ 2.1035 ] tegrahost_v2 --chip 0x19 0 --magicid MEMB --ratchet_blob ratchet_blob.bin --appendsigheader mem_coldboot.bct zerosbk [ 2.1045 ] Header already present for mem_coldboot.bct [ 2.1049 ] [ 2.1062 ] tegrasign_v2 --key None --list mem_coldboot_sigheader.bct_list.xml --pubkeyhash pub_key.key [ 2.1070 ] Assuming zero filled SBK key [ 2.1076 ] [ 2.1088 ] tegrahost_v2 --chip 0x19 0 --updatesigheader mem_coldboot_sigheader.bct.encrypt mem_coldboot_sigheader.bct.hash zerosbk [ 2.1101 ] [ 2.1102 ] Generating recovery mem-bct [ 2.1112 ] tegrabct_v2 --chip 0x19 0 --sdram ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1.cfg --membct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_1.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_2.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_3.bct ....../Linux_for_Tegra/bootloader/407391/tmp0p6j3fq_1_4.bct [ 2.1121 ] Packing sdram param for instance[0] [ 2.1538 ] Packing sdram param for instance[1] [ 2.1539 ] Packing sdram param for instance[2] [ 2.1540 ] Packing sdram param for instance[3] [ 2.1540 ] Packing sdram param for instance[4] [ 2.1540 ] Packing sdram param for instance[5] [ 2.1540 ] Packing sdram param for instance[6] [ 2.1540 ] Packing sdram param for instance[7] [ 2.1540 ] Packing sdram param for instance[8] [ 2.1540 ] Packing sdram param for instance[9] [ 2.1540 ] Packing sdram param for instance[10] [ 2.1540 ] Packing sdram param for instance[11] [ 2.1540 ] Packing sdram param for instance[12] [ 2.1540 ] Packing sdram param for instance[13] [ 2.1540 ] Packing sdram param for instance[14] [ 2.1540 ] Packing sdram param for instance[15] [ 2.1540 ] [ 2.1541 ] Reading ramcode from backup chip_info.bin file [ 2.1542 ] RAMCODE Read from Device: 2 [ 2.1543 ] Disabled BPMP dtb trim, using default dtb [ 2.1543 ] [ 2.1554 ] tegrahost_v2 --chip 0x19 --align mem_rcm.bct [ 2.1564 ] [ 2.1574 ] tegrahost_v2 --chip 0x19 0 --magicid MEMB --ratchet_blob ratchet_blob.bin --appendsigheader mem_rcm.bct zerosbk [ 2.1583 ] adding BCH for mem_rcm.bct [ 2.1595 ] [ 2.1608 ] tegrasign_v2 --key None --list mem_rcm_sigheader.bct_list.xml --pubkeyhash pub_key.key [ 2.1618 ] Assuming zero filled SBK key [ 2.1622 ] [ 2.1633 ] tegrahost_v2 --chip 0x19 0 --updatesigheader mem_rcm_sigheader.bct.encrypt mem_rcm_sigheader.bct.hash zerosbk [ 2.1647 ] [ 2.1648 ] Copying signatures [ 2.1657 ] tegrahost_v2 --chip 0x19 0 --partitionlayout flash.xml.bin --updatesig images_list_signed.xml [ 3.1271 ] [ 3.1271 ] Boot Rom communication [ 3.1282 ] tegrarcm_v2 --chip 0x19 0 --rcm rcm_list_signed.xml [ 3.1291 ] BR_CID: 0x88021911646406490000000012000140 [ 3.1298 ] RCM version 0X190001 [ 3.1484 ] Boot Rom communication completed [ 4.1641 ] [ 5.1674 ] tegrarcm_v2 --isapplet [ 5.1683 ] Applet version 01.00.0000 [ 5.1703 ] [ 5.1703 ] Sending BCTs [ 5.1715 ] tegrarcm_v2 --download bct_bootrom br_bct_BR.bct --download bct_mb1 mb1_bct_MB1_sigheader.bct.encrypt --download bct_mem mem_rcm_sigheader.bct.encrypt [ 5.1725 ] Applet version 01.00.0000 [ 5.1937 ] Sending bct_bootrom [ 5.1939 ] [................................................] 100% [ 5.1950 ] Sending bct_mb1 [ 5.1995 ] [................................................] 100% [ 5.2030 ] Sending bct_mem [ 5.2484 ] [................................................] 100% [ 5.3300 ] [ 5.3300 ] Generating blob [ 5.3313 ] tegrahost_v2 --chip 0x19 --align blob_nvtboot_recovery_cpu_t194.bin [ 5.3325 ] [ 5.3335 ] tegrahost_v2 --chip 0x19 0 --magicid CPBL --ratchet_blob ratchet_blob.bin --appendsigheader blob_nvtboot_recovery_cpu_t194.bin zerosbk [ 5.3344 ] adding BCH for blob_nvtboot_recovery_cpu_t194.bin [ 5.3402 ] [ 5.3416 ] tegrasign_v2 --key None --list blob_nvtboot_recovery_cpu_t194_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.3425 ] Assuming zero filled SBK key [ 5.3431 ] [ 5.3443 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_nvtboot_recovery_cpu_t194_sigheader.bin.encrypt blob_nvtboot_recovery_cpu_t194_sigheader.bin.hash zerosbk [ 5.3483 ] [ 5.3495 ] tegrahost_v2 --chip 0x19 --align blob_nvtboot_recovery_t194.bin [ 5.3505 ] [ 5.3514 ] tegrahost_v2 --chip 0x19 0 --magicid MB2B --ratchet_blob ratchet_blob.bin --appendsigheader blob_nvtboot_recovery_t194.bin zerosbk [ 5.3520 ] adding BCH for blob_nvtboot_recovery_t194.bin [ 5.3535 ] [ 5.3550 ] tegrasign_v2 --key None --list blob_nvtboot_recovery_t194_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.3559 ] Assuming zero filled SBK key [ 5.3564 ] [ 5.3579 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_nvtboot_recovery_t194_sigheader.bin.encrypt blob_nvtboot_recovery_t194_sigheader.bin.hash zerosbk [ 5.3605 ] [ 5.3615 ] tegrahost_v2 --chip 0x19 --align blob_preboot_c10_prod_cr.bin [ 5.3623 ] [ 5.3631 ] tegrahost_v2 --chip 0x19 0 --magicid MTSP --ratchet_blob ratchet_blob.bin --appendsigheader blob_preboot_c10_prod_cr.bin zerosbk [ 5.3638 ] Header already present for blob_preboot_c10_prod_cr.bin [ 5.3642 ] [ 5.3658 ] tegrasign_v2 --key None --list blob_preboot_c10_prod_cr_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.3668 ] Assuming zero filled SBK key [ 5.3671 ] [ 5.3686 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_preboot_c10_prod_cr_sigheader.bin.encrypt blob_preboot_c10_prod_cr_sigheader.bin.hash zerosbk [ 5.3700 ] [ 5.3711 ] tegrahost_v2 --chip 0x19 --align blob_mce_c10_prod_cr.bin [ 5.3721 ] [ 5.3732 ] tegrahost_v2 --chip 0x19 0 --magicid MTSM --ratchet_blob ratchet_blob.bin --appendsigheader blob_mce_c10_prod_cr.bin zerosbk [ 5.3741 ] Header already present for blob_mce_c10_prod_cr.bin [ 5.3760 ] [ 5.3775 ] tegrasign_v2 --key None --list blob_mce_c10_prod_cr_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.3784 ] Assuming zero filled SBK key [ 5.3789 ] [ 5.3803 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_mce_c10_prod_cr_sigheader.bin.encrypt blob_mce_c10_prod_cr_sigheader.bin.hash zerosbk [ 5.3831 ] [ 5.3844 ] tegrahost_v2 --chip 0x19 --align blob_mts_c10_prod_cr.bin [ 5.3856 ] [ 5.3866 ] tegrahost_v2 --chip 0x19 0 --magicid MTSB --ratchet_blob ratchet_blob.bin --appendsigheader blob_mts_c10_prod_cr.bin zerosbk [ 5.3875 ] adding BCH for blob_mts_c10_prod_cr.bin [ 5.4303 ] [ 5.4315 ] tegrasign_v2 --key None --list blob_mts_c10_prod_cr_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.4326 ] Assuming zero filled SBK key [ 5.4367 ] [ 5.4379 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_mts_c10_prod_cr_sigheader.bin.encrypt blob_mts_c10_prod_cr_sigheader.bin.hash zerosbk [ 5.4631 ] [ 5.4641 ] tegrahost_v2 --chip 0x19 --align blob_bpmp_t194.bin [ 5.4649 ] [ 5.4656 ] tegrahost_v2 --chip 0x19 0 --magicid BPMF --ratchet_blob ratchet_blob.bin --appendsigheader blob_bpmp_t194.bin zerosbk [ 5.4663 ] adding BCH for blob_bpmp_t194.bin [ 5.4760 ] [ 5.4772 ] tegrasign_v2 --key None --list blob_bpmp_t194_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.4781 ] Assuming zero filled SBK key [ 5.4795 ] [ 5.4807 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_bpmp_t194_sigheader.bin.encrypt blob_bpmp_t194_sigheader.bin.hash zerosbk [ 5.4901 ] [ 5.4909 ] tegrahost_v2 --chip 0x19 --align blob_tegra194-a02-bpmp-p2888-a04.dtb [ 5.4916 ] [ 5.4924 ] tegrahost_v2 --chip 0x19 0 --magicid BPMD --ratchet_blob ratchet_blob.bin --appendsigheader blob_tegra194-a02-bpmp-p2888-a04.dtb zerosbk [ 5.4930 ] adding BCH for blob_tegra194-a02-bpmp-p2888-a04.dtb [ 5.5016 ] [ 5.5028 ] tegrasign_v2 --key None --list blob_tegra194-a02-bpmp-p2888-a04_sigheader.dtb_list.xml --pubkeyhash pub_key.key [ 5.5036 ] Assuming zero filled SBK key [ 5.5049 ] [ 5.5062 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_tegra194-a02-bpmp-p2888-a04_sigheader.dtb.encrypt blob_tegra194-a02-bpmp-p2888-a04_sigheader.dtb.hash zerosbk [ 5.5157 ] [ 5.5166 ] tegrahost_v2 --chip 0x19 --align blob_spe_t194.bin [ 5.5174 ] [ 5.5181 ] tegrahost_v2 --chip 0x19 0 --magicid SPEF --ratchet_blob ratchet_blob.bin --appendsigheader blob_spe_t194.bin zerosbk [ 5.5189 ] adding BCH for blob_spe_t194.bin [ 5.5200 ] [ 5.5212 ] tegrasign_v2 --key None --list blob_spe_t194_sigheader.bin_list.xml --pubkeyhash pub_key.key [ 5.5221 ] Assuming zero filled SBK key [ 5.5225 ] [ 5.5238 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_spe_t194_sigheader.bin.encrypt blob_spe_t194_sigheader.bin.hash zerosbk [ 5.5259 ] [ 5.5271 ] tegrahost_v2 --chip 0x19 --align blob_tos-trusty_t194.img [ 5.5281 ] [ 5.5291 ] tegrahost_v2 --chip 0x19 0 --magicid TOSB --ratchet_blob ratchet_blob.bin --appendsigheader blob_tos-trusty_t194.img zerosbk [ 5.5298 ] adding BCH for blob_tos-trusty_t194.img [ 5.5344 ] [ 5.5357 ] tegrasign_v2 --key None --list blob_tos-trusty_t194_sigheader.img_list.xml --pubkeyhash pub_key.key [ 5.5366 ] Assuming zero filled SBK key [ 5.5375 ] [ 5.5387 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_tos-trusty_t194_sigheader.img.encrypt blob_tos-trusty_t194_sigheader.img.hash zerosbk [ 5.5447 ] [ 5.5455 ] tegrahost_v2 --chip 0x19 --align blob_eks.img [ 5.5460 ] [ 5.5466 ] tegrahost_v2 --chip 0x19 0 --magicid EKSB --ratchet_blob ratchet_blob.bin --appendsigheader blob_eks.img zerosbk [ 5.5471 ] adding BCH for blob_eks.img [ 5.5473 ] [ 5.5484 ] tegrasign_v2 --key None --list blob_eks_sigheader.img_list.xml --pubkeyhash pub_key.key [ 5.5493 ] Assuming zero filled SBK key [ 5.5495 ] [ 5.5508 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_eks_sigheader.img.encrypt blob_eks_sigheader.img.hash zerosbk [ 5.5518 ] [ 5.5529 ] tegrahost_v2 --chip 0x19 --align blob_tegra194-p2888-0001-p2822-0000.dtb [ 5.5539 ] [ 5.5549 ] tegrahost_v2 --chip 0x19 0 --magicid CDTB --ratchet_blob ratchet_blob.bin --appendsigheader blob_tegra194-p2888-0001-p2822-0000.dtb zerosbk [ 5.5557 ] adding BCH for blob_tegra194-p2888-0001-p2822-0000.dtb [ 5.5622 ] [ 5.5634 ] tegrasign_v2 --key None --list blob_tegra194-p2888-0001-p2822-0000_sigheader.dtb_list.xml --pubkeyhash pub_key.key [ 5.5642 ] Assuming zero filled SBK key [ 5.5649 ] [ 5.5659 ] tegrahost_v2 --chip 0x19 0 --updatesigheader blob_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt blob_tegra194-p2888-0001-p2822-0000_sigheader.dtb.hash zerosbk [ 5.5702 ] [ 5.5713 ] tegrahost_v2 --chip 0x19 --generateblob blob.xml blob.bin [ 5.5720 ] number of images in blob are 11 [ 5.5723 ] blobsize is 6381592 [ 5.5724 ] Added binary blob_nvtboot_recovery_cpu_t194_sigheader.bin.encrypt of size 260032 [ 5.5743 ] Added binary blob_nvtboot_recovery_t194_sigheader.bin.encrypt of size 130928 [ 5.5747 ] Added binary blob_preboot_c10_prod_cr_sigheader.bin.encrypt of size 24016 [ 5.5750 ] Added binary blob_mce_c10_prod_cr_sigheader.bin.encrypt of size 143200 [ 5.5753 ] Added binary blob_mts_c10_prod_cr_sigheader.bin.encrypt of size 3430416 [ 5.5758 ] Added binary blob_bpmp_t194_sigheader.bin.encrypt of size 856352 [ 5.5766 ] Added binary blob_tegra194-a02-bpmp-p2888-a04_sigheader.dtb.encrypt of size 746752 [ 5.5769 ] Added binary blob_spe_t194_sigheader.bin.encrypt of size 94960 [ 5.5773 ] Added binary blob_tos-trusty_t194_sigheader.img.encrypt of size 402368 [ 5.5776 ] Added binary blob_eks_sigheader.img.encrypt of size 5136 [ 5.5778 ] Added binary blob_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt of size 287248 [ 5.5792 ] [ 5.5793 ] Sending bootloader and pre-requisite binaries [ 5.5803 ] tegrarcm_v2 --download blob blob.bin [ 5.5812 ] Applet version 01.00.0000 [ 5.5835 ] Sending blob [ 5.5836 ] [................................................] 100% [ 6.4621 ] [ 6.4632 ] tegrarcm_v2 --boot recovery [ 6.4641 ] Applet version 01.00.0000 [ 6.4898 ] [ 7.4921 ] tegrarcm_v2 --isapplet [ 8.0873 ] [ 8.0884 ] tegrarcm_v2 --ismb2 [ 8.1054 ] [ 8.1066 ] tegradevflash_v2 --iscpubl [ 8.1075 ] Bootloader version 01.00.0000 [ 8.1217 ] Bootloader version 01.00.0000 [ 8.1228 ] [ 8.1228 ] Retrieving storage infomation [ 8.1241 ] tegrarcm_v2 --oem platformdetails storage storage_info.bin [ 8.1250 ] Applet is not running on device. Continue with Bootloader [ 8.1469 ] [ 8.1481 ] tegradevflash_v2 --oem platformdetails storage storage_info.bin [ 8.1491 ] Bootloader version 01.00.0000 [ 8.1699 ] Saved platform info in storage_info.bin [ 8.1714 ] [ 8.1715 ] Flashing the device [ 8.1726 ] tegraparser_v2 --storageinfo storage_info.bin --generategpt --pt flash.xml.bin [ 8.1741 ] [ 8.1751 ] tegradevflash_v2 --pt flash.xml.bin --create [ 8.1760 ] Bootloader version 01.00.0000 [ 8.1978 ] Erasing sdmmc_boot: 3 ......... [Done] [ 9.3064 ] Writing partition secondary_gpt with gpt_secondary_0_3.bin [ 9.3067 ] [................................................] 100% [ 9.3393 ] Erasing sdmmc_user: 3 ......... [Done] [ 10.1030 ] Writing partition master_boot_record with mbr_1_3.bin [ 10.1033 ] [................................................] 100% [ 10.1044 ] Writing partition primary_gpt with gpt_primary_1_3.bin [ 10.1109 ] [................................................] 100% [ 10.1125 ] Writing partition secondary_gpt with gpt_secondary_1_3.bin [ 10.1319 ] [................................................] 100% [ 10.1524 ] Writing partition mb1 with mb1_t194_prod_sigheader.bin.encrypt [ 10.1527 ] [................................................] 100% [ 10.1610 ] Writing partition mb1_b with mb1_t194_prod_sigheader.bin.encrypt [ 10.2669 ] [................................................] 100% [ 10.2753 ] Writing partition spe-fw with spe_t194_sigheader.bin.encrypt [ 10.3004 ] [................................................] 100% [ 10.3040 ] Writing partition spe-fw_b with spe_t194_sigheader.bin.encrypt [ 10.3239 ] [................................................] 100% [ 10.3276 ] Writing partition mb2 with nvtboot_t194_sigheader.bin.encrypt [ 10.3475 ] [................................................] 100% [ 10.3533 ] Writing partition mb2_b with nvtboot_t194_sigheader.bin.encrypt [ 10.3757 ] [................................................] 100% [ 10.3810 ] Writing partition mts-preboot with preboot_c10_prod_cr_sigheader.bin.encrypt [ 10.4035 ] [................................................] 100% [ 10.4051 ] Writing partition mts-preboot_b with preboot_c10_prod_cr_sigheader.bin.encrypt [ 10.4238 ] [................................................] 100% [ 10.4254 ] Writing partition SMD with slot_metadata.bin [ 10.4440 ] [................................................] 100% [ 10.4451 ] Writing partition SMD_b with slot_metadata.bin [ 10.4577 ] [................................................] 100% [ 10.4588 ] Writing partition VER_b with emmc_bootblob_ver.txt [ 10.4714 ] [................................................] 100% [ 10.4725 ] Writing partition VER with emmc_bootblob_ver.txt [ 10.4850 ] [................................................] 100% [ 10.4861 ] Writing partition master_boot_record with mbr_1_3.bin [ 10.4987 ] [................................................] 100% [ 10.4998 ] Writing partition APP with system.img [ 10.5069 ] [................................................] 100% [ 202.3141 ] Writing partition mts-mce with mce_c10_prod_cr_sigheader.bin.encrypt [ 202.3403 ] [................................................] 100% [ 202.3448 ] Writing partition mts-mce_b with mce_c10_prod_cr_sigheader.bin.encrypt [ 202.3635 ] [................................................] 100% [ 202.3680 ] Writing partition mts-proper with mts_c10_prod_cr_sigheader.bin.encrypt [ 202.3867 ] [................................................] 100% [ 202.5104 ] Writing partition mts-proper_b with mts_c10_prod_cr_sigheader.bin.encrypt [ 202.5298 ] [................................................] 100% [ 202.6534 ] Writing partition cpu-bootloader with cboot_t194_sigheader.bin.encrypt [ 202.6729 ] [................................................] 100% [ 202.6850 ] Writing partition cpu-bootloader_b with cboot_t194_sigheader.bin.encrypt [ 202.7051 ] [................................................] 100% [ 202.7171 ] Writing partition bootloader-dtb with tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt [ 202.7372 ] [................................................] 100% [ 202.7451 ] Writing partition bootloader-dtb_b with tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt [ 202.7647 ] [................................................] 100% [ 202.7726 ] Writing partition secure-os with tos-trusty_t194_sigheader.img.encrypt [ 202.7921 ] [................................................] 100% [ 202.8028 ] Writing partition secure-os_b with tos-trusty_t194_sigheader.img.encrypt [ 202.8229 ] [................................................] 100% [ 202.8338 ] Writing partition eks with eks_sigheader.img.encrypt [ 202.8536 ] [................................................] 100% [ 202.8548 ] Writing partition eks_b with eks_sigheader.img.encrypt [ 202.8734 ] [................................................] 100% [ 202.8746 ] Writing partition bpmp-fw with bpmp_t194_sigheader.bin.encrypt [ 202.8932 ] [................................................] 100% [ 202.9154 ] Writing partition bpmp-fw_b with bpmp_t194_sigheader.bin.encrypt [ 202.9391 ] [................................................] 100% [ 202.9615 ] Writing partition bpmp-fw-dtb with tegra194-a02-bpmp-p2888-a04_sigheader.dtb.encrypt [ 202.9968 ] [................................................] 100% [ 203.0161 ] Writing partition bpmp-fw-dtb_b with tegra194-a02-bpmp-p2888-a04_sigheader.dtb.encrypt [ 203.0388 ] [................................................] 100% [ 203.0581 ] Writing partition xusb-fw with xusb_sil_rel_fw [ 203.0807 ] [................................................] 100% [ 203.0848 ] Writing partition xusb-fw_b with xusb_sil_rel_fw [ 203.0913 ] [................................................] 100% [ 203.0954 ] Writing partition rce-fw with camera-rtcpu-rce_sigheader.img.encrypt [ 203.1021 ] [................................................] 100% [ 203.1097 ] Writing partition rce-fw_b with camera-rtcpu-rce_sigheader.img.encrypt [ 203.1291 ] [................................................] 100% [ 203.1368 ] Writing partition adsp-fw with adsp-fw_sigheader.bin.encrypt [ 203.1555 ] [................................................] 100% [ 203.1585 ] Writing partition adsp-fw_b with adsp-fw_sigheader.bin.encrypt [ 203.1771 ] [................................................] 100% [ 203.1801 ] Writing partition sc7 with warmboot_t194_prod_sigheader.bin.encrypt [ 203.1988 ] [................................................] 100% [ 203.2014 ] Writing partition sc7_b with warmboot_t194_prod_sigheader.bin.encrypt [ 203.2201 ] [................................................] 100% [ 203.2226 ] Writing partition BMP with bmp.blob [ 203.2412 ] [................................................] 100% [ 203.2462 ] Writing partition BMP_b with bmp.blob [ 203.2647 ] [................................................] 100% [ 203.2698 ] Writing partition recovery with recovery_sigheader.img.encrypt [ 203.2884 ] [................................................] 100% [ 205.1264 ] Writing partition recovery-dtb with tegra194-p2888-0001-p2822-0000.dtb_sigheader.rec.encrypt [ 205.1402 ] [................................................] 100% [ 205.1481 ] Writing partition kernel-bootctrl with kernel_bootctrl.bin [ 205.1675 ] [................................................] 100% [ 205.1686 ] Writing partition kernel-bootctrl_b with kernel_bootctrl.bin [ 205.1812 ] [................................................] 100% [ 205.1823 ] Writing partition kernel with boot_sigheader.img.encrypt [ 205.1949 ] [................................................] 100% [ 206.7481 ] Writing partition kernel_b with boot_sigheader.img.encrypt [ 206.7590 ] [................................................] 100% [ 208.3667 ] Writing partition kernel-dtb with kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt [ 208.3791 ] [................................................] 100% [ 208.3871 ] Writing partition kernel-dtb_b with kernel_tegra194-p2888-0001-p2822-0000_sigheader.dtb.encrypt [ 208.4077 ] [................................................] 100% [ 208.4349 ] [ 208.4362 ] tegradevflash_v2 --write BCT br_bct_BR.bct [ 208.4371 ] Bootloader version 01.00.0000 [ 208.4390 ] Writing partition BCT with br_bct_BR.bct [ 208.4392 ] [................................................] 100% [ 208.4960 ] [ 208.4991 ] tegradevflash_v2 --write MB1_BCT mb1_cold_boot_bct_MB1_sigheader.bct.encrypt [ 208.4999 ] Bootloader version 01.00.0000 [ 208.5017 ] Writing partition MB1_BCT with mb1_cold_boot_bct_MB1_sigheader.bct.encrypt [ 208.5021 ] [................................................] 100% [ 208.5221 ] [ 208.5232 ] tegradevflash_v2 --write MB1_BCT_b mb1_cold_boot_bct_MB1_sigheader.bct.encrypt [ 208.5239 ] Bootloader version 01.00.0000 [ 208.5257 ] Writing partition MB1_BCT_b with mb1_cold_boot_bct_MB1_sigheader.bct.encrypt [ 208.5261 ] [................................................] 100% [ 208.5461 ] [ 208.5487 ] tegradevflash_v2 --write MEM_BCT mem_coldboot_sigheader.bct.encrypt [ 208.5497 ] Bootloader version 01.00.0000 [ 208.5516 ] Writing partition MEM_BCT with mem_coldboot_sigheader.bct.encrypt [ 208.5520 ] [................................................] 100% [ 208.5687 ] [ 208.5698 ] tegradevflash_v2 --write MEM_BCT_b mem_coldboot_sigheader.bct.encrypt [ 208.5707 ] Bootloader version 01.00.0000 [ 208.5726 ] Writing partition MEM_BCT_b with mem_coldboot_sigheader.bct.encrypt [ 208.5730 ] [................................................] 100% [ 208.5898 ] [ 208.5899 ] Flashing completed [ 208.5899 ] Coldbooting the device [ 208.5909 ] tegrarcm_v2 --ismb2 [ 208.5935 ] [ 208.5943 ] tegradevflash_v2 --reboot coldboot [ 208.5951 ] Bootloader version 01.00.0000 [ 208.6103 ] *** The target t186ref has been flashed successfully. *** Reset the board to boot from internal eMMC.

2.2.2.2 Host Computer After

Flashing

After flashing, if the USB cable is still connected between your host

computer and Jetson

AGX Xavier , you'll see the following alteration from the same

command line: lsusb | grep NVIDIA.

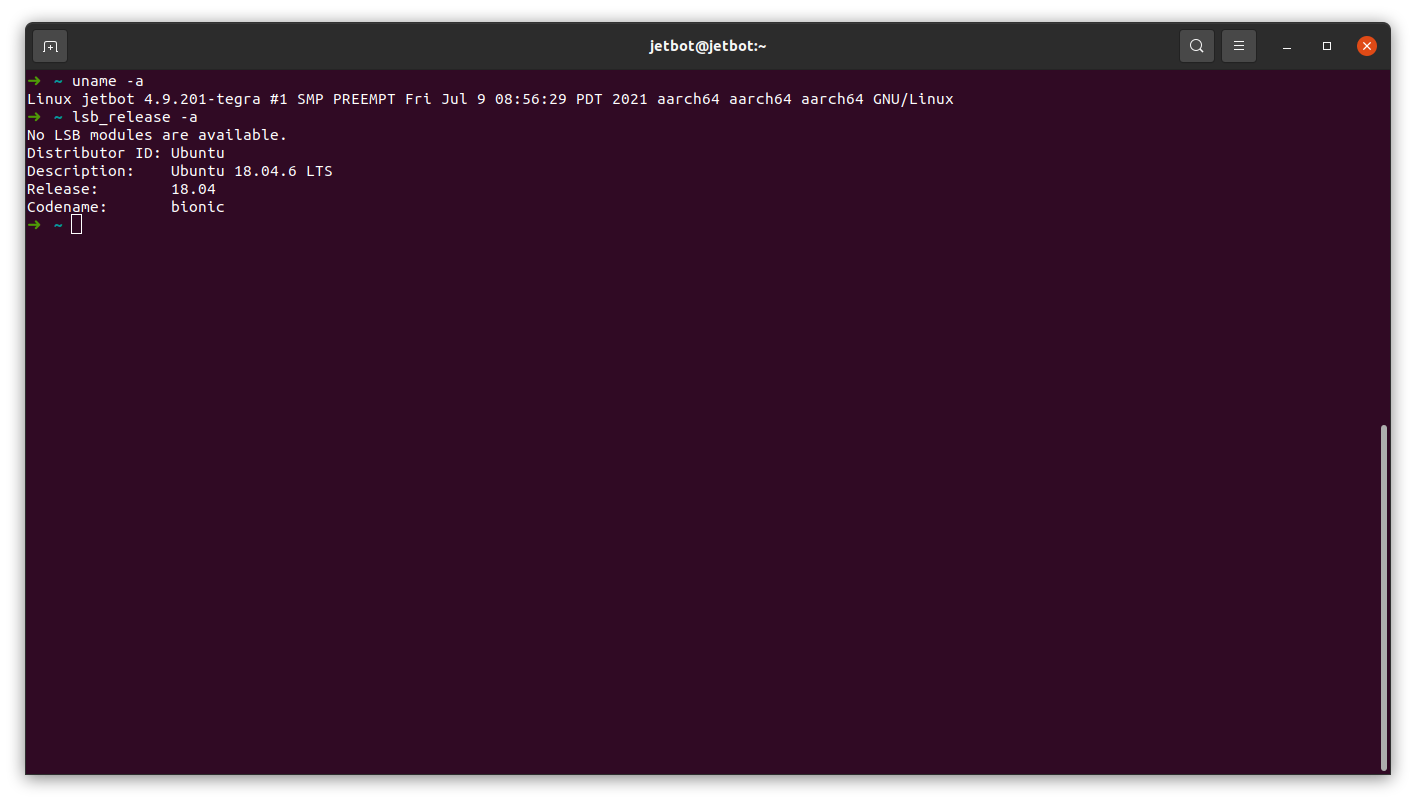

1 2 ➜ ~ lsusb | grep NVIDIA Bus 011 Device 056: ID 0955:7020 NVIDIA Corp. L4T (Linux for Tegra) running on Tegra

2.2.2.3

Test JetPack on Jetson

AGX Xavier 's eMMC After Flashing

1 2 ➜ ~ cat /etc/nv_tegra_release # R32 (release), REVISION: 6.1, GCID: 27863751, BOARD: t186ref, EABI: aarch64, DATE: Mon Jul 26 19:36:31 UTC 2021

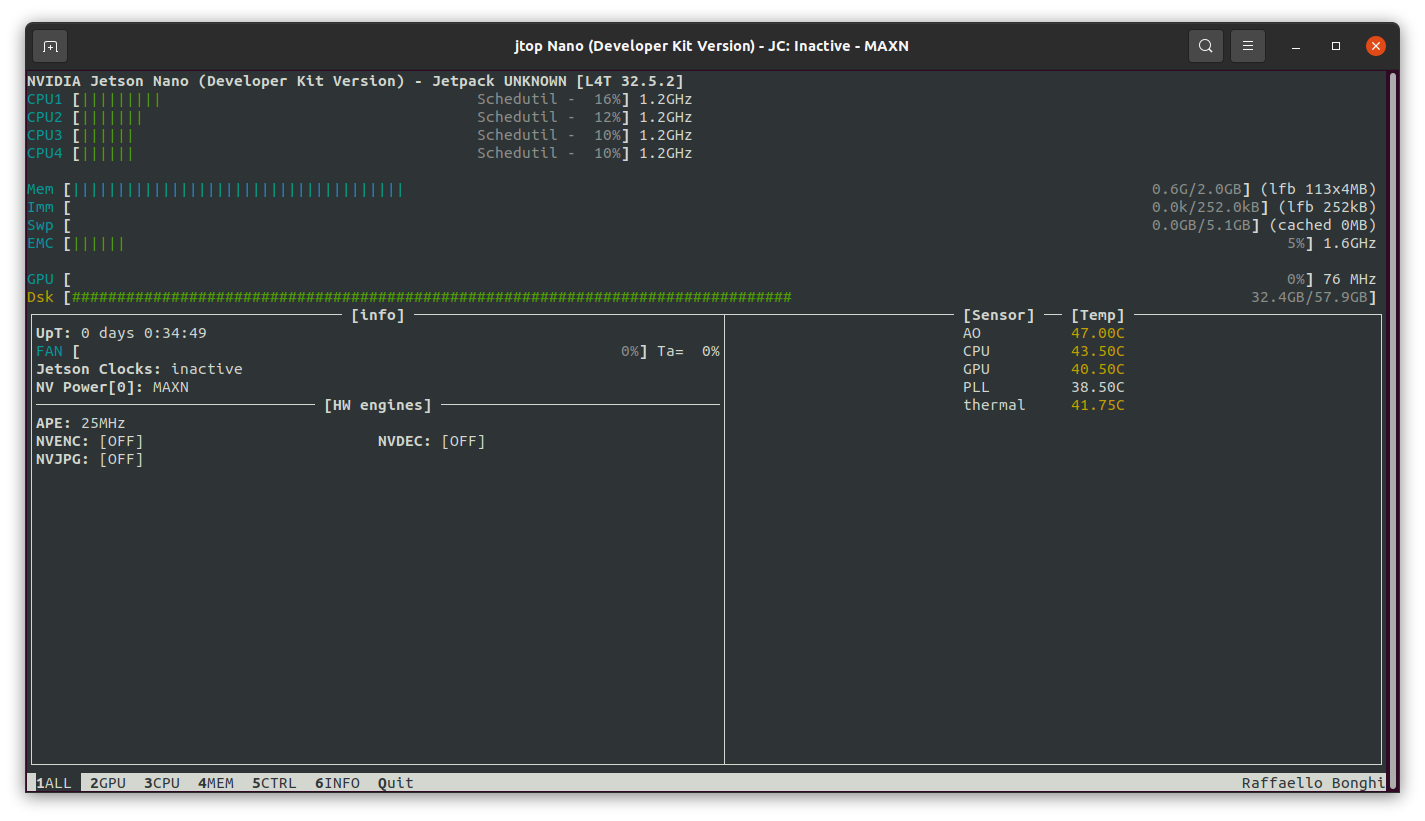

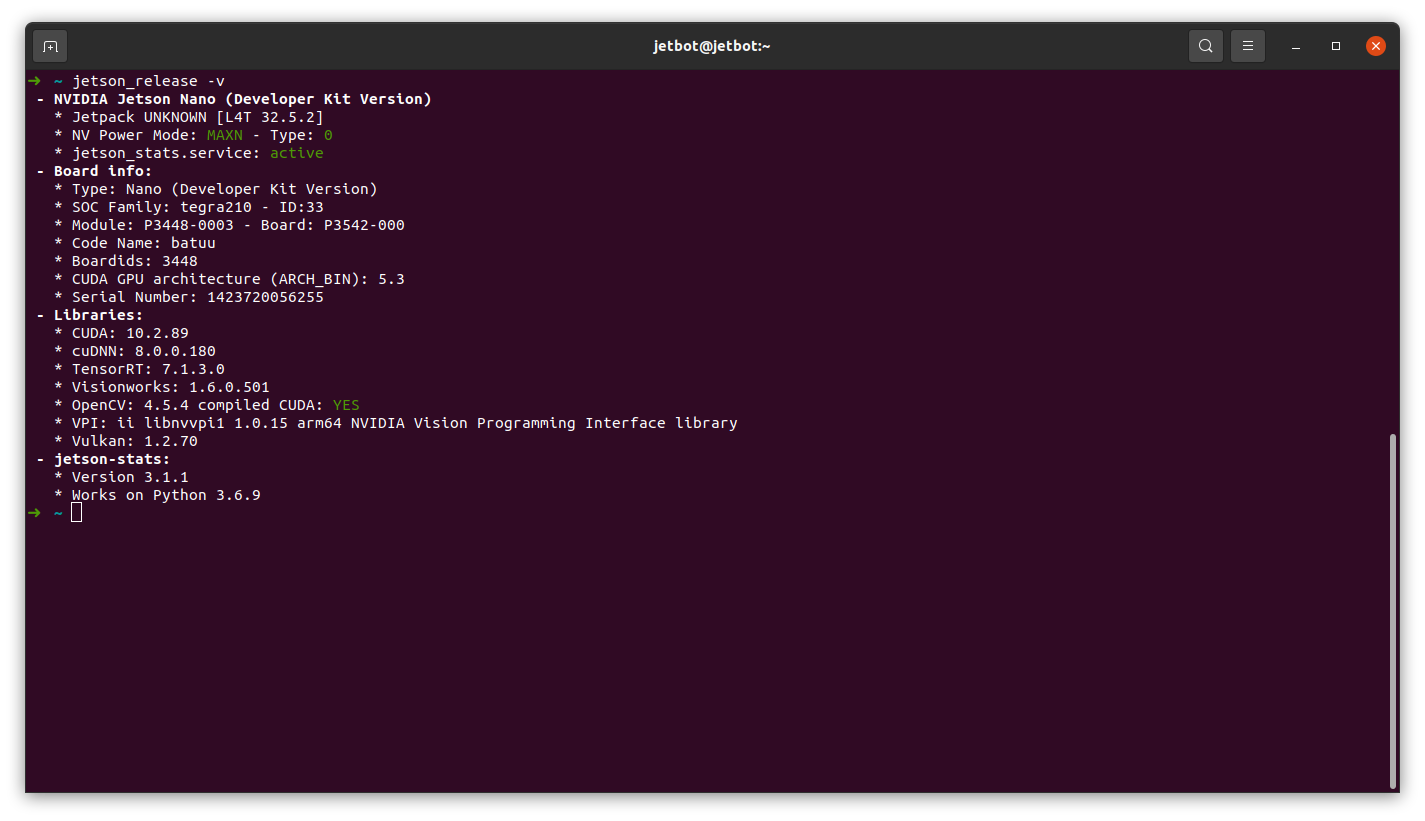

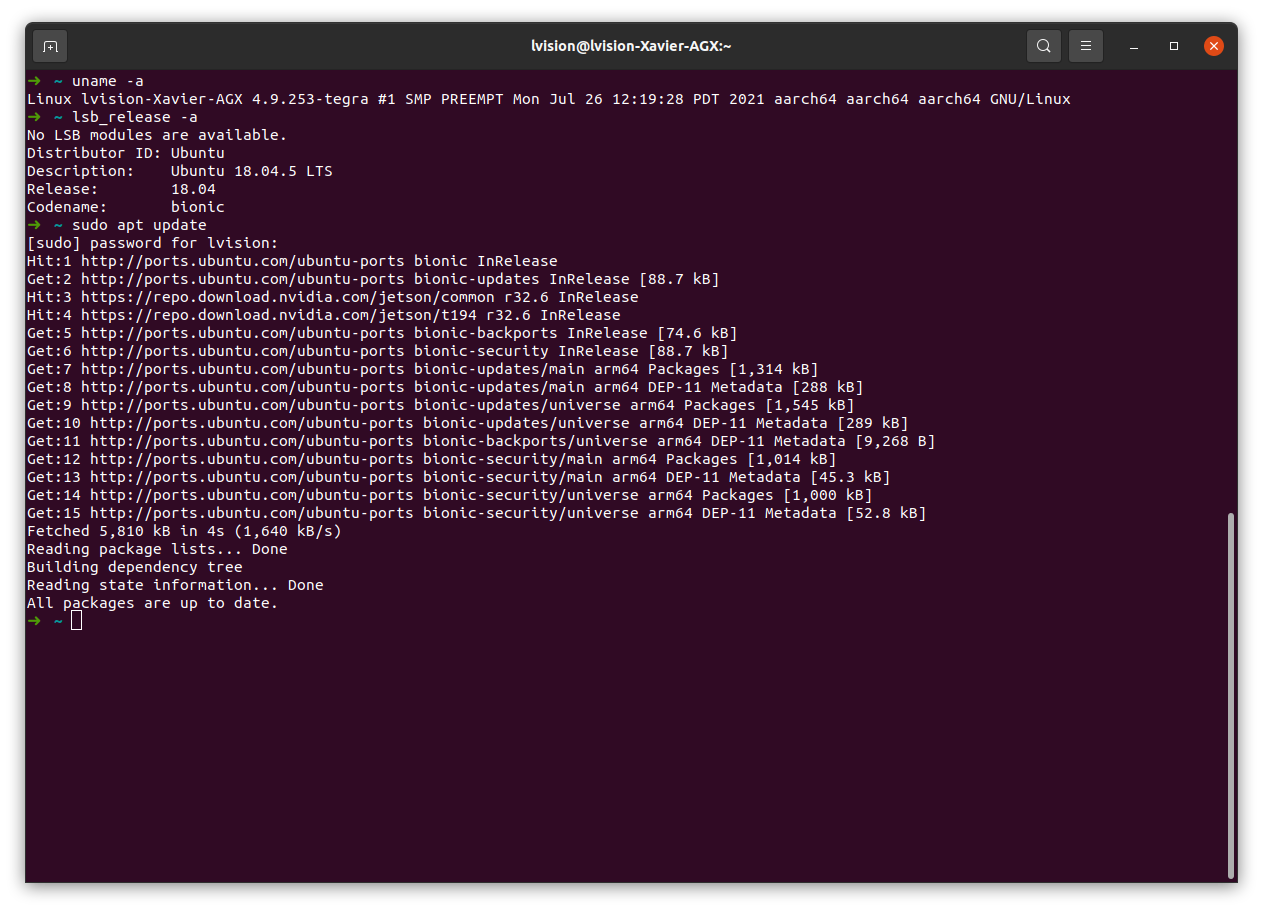

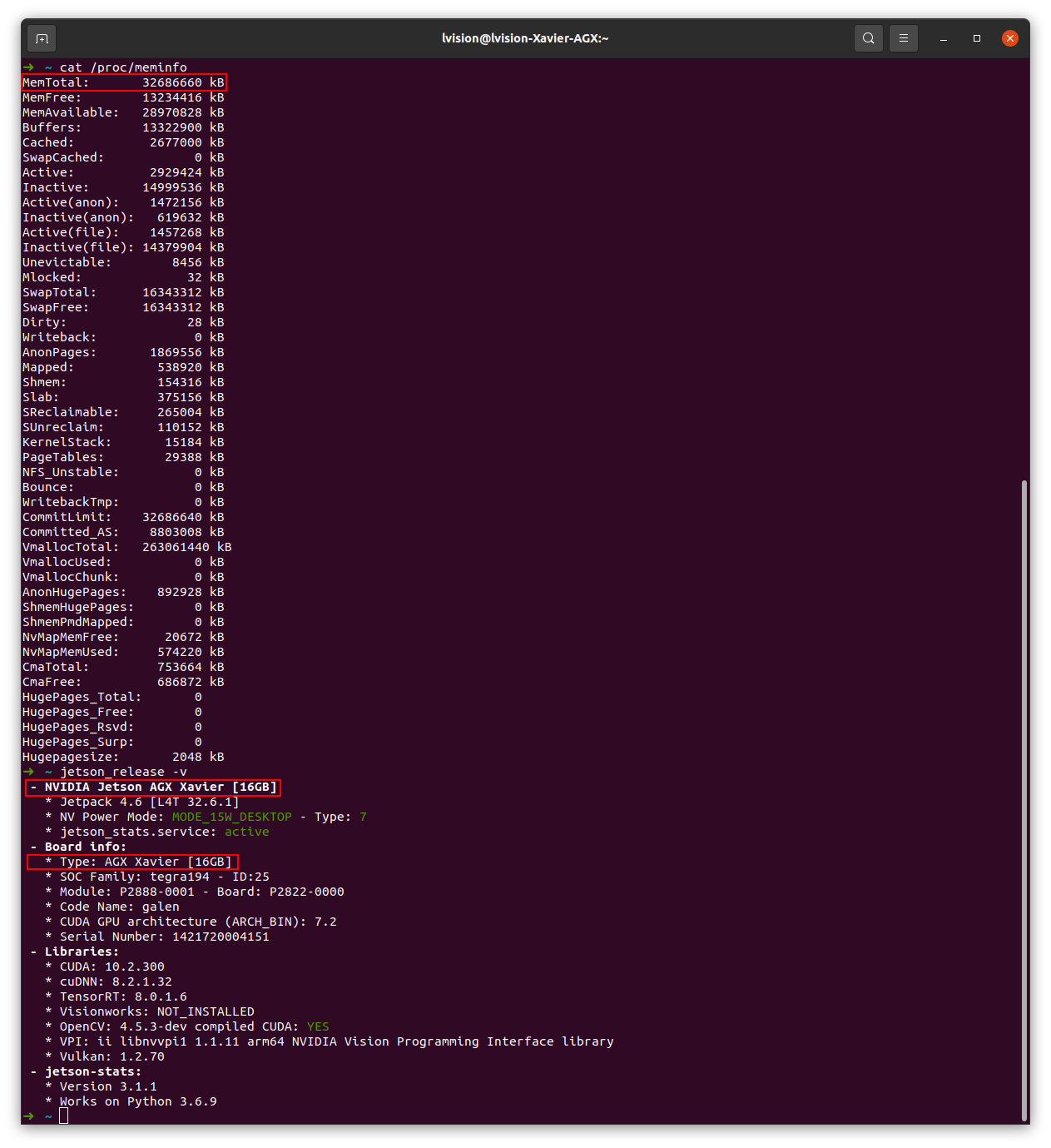

After having - upgraded ALL upgradable packages -

had CUDA, cuDNN, TensorRT,

VPI pip - had Jetson stats manually

built and installed - had OpenCV 4.5.3 manually built

and installed with CUDA 10.2 (provided with JetPack

4.6 )

I got the following result:

By default,

CUDA, cuDNN, TensorRT, Visionworks,

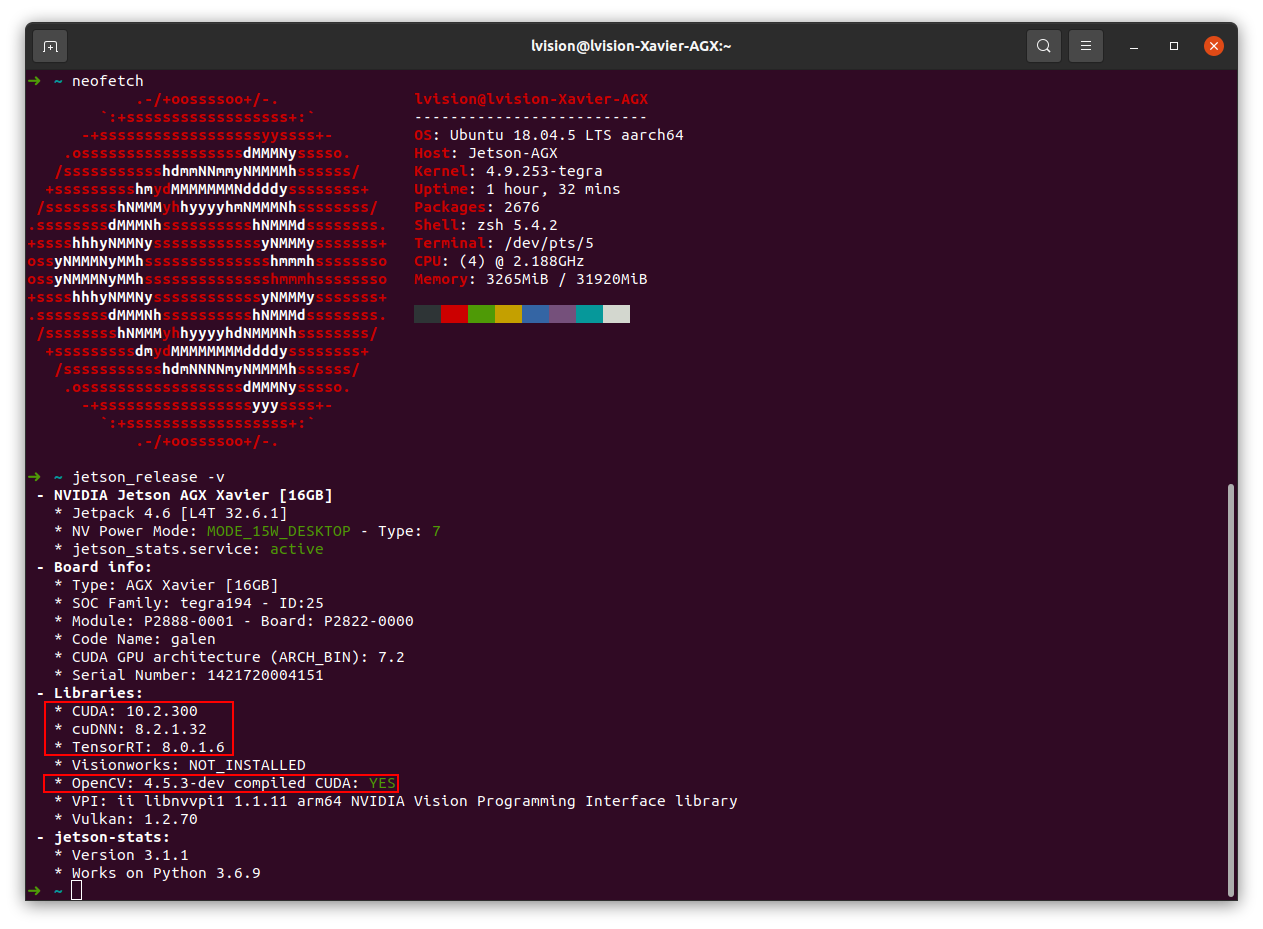

OpenCV, VPI are NOT installed with the above flashing. NOT hard to install CUDA, cuDNN, TensorRT, VPI How come the total memory size from command

cat /proc/meminfo and jetson_release -v are of

different values?

Note: in file

README_Massflash.txt

[!NOTE]: jetson-agx-xavier-devkit 16GB(SKU1) and

32GB(SKU4) are using same configuration. Only differences are BOARDSKU

and BOARDREV.

It looks

The following command does NOT flash the board

correctly, even with specified arguments? 1 ➜ Linux_for_Tegra sudo BOARDID=2888 FAB=400 BOARDSKU=0004 BOARDREV=K.0 ./flash.sh jetson-agx-xavier-devkit mmcblk0p1

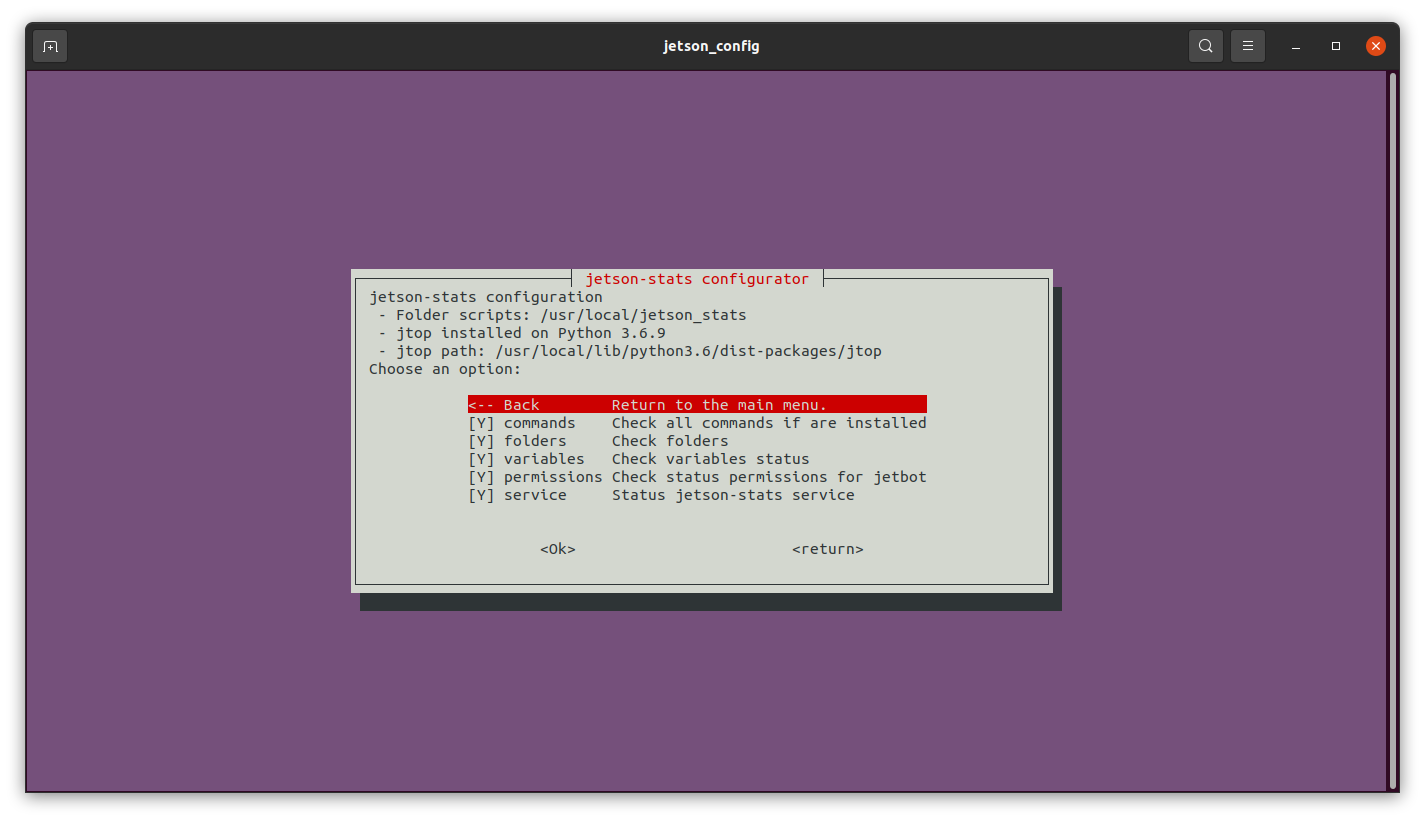

It might be a bug of

jetson_stats

2.2.3 Flash onto SD card

My recommendation is to use a SD card of at least 64G. eMMC on my Jetson

AGX Xavier is of size 32G ONLY . I failed to build

OpenCV on eMMC a couple

of times, due to size limit. However, from Jetson

AGX Xavier Developer Kit Carrier Board Specification on page Jetson Download

Center , it's clearly written that: 1 A combo UFS and Micro SD Card Socket (J4) is implemented. The SD card interface supports up to SDR104 mode (UHS-1). The UFS interface supports up to HS-GEAR 3.

That is to say, Jetson

AGX Xavier does NOT support SDXC

and SDUC (> 32GB), but ONLY support SD

and SDHC (<=32GB>).

2.2.4 Use External SD Card

to Build OpenCV

As mentioned, - the unused part of eMMC on my Jetson

AGX Xavier is NOT big enough to build OpenCV - SDXC

and SDUC which are of size >= 32GB are NOT

supported by Jetson

AGX Xavier 's SD card slot

I've got to insert my SDXC

card into an SD card reader, then insert the SD card reader into a USB

hub for Jetson

AGX Xavier to use. ONLY as such, can we build our required packages

under current Jetson

AGX Xavier environment.

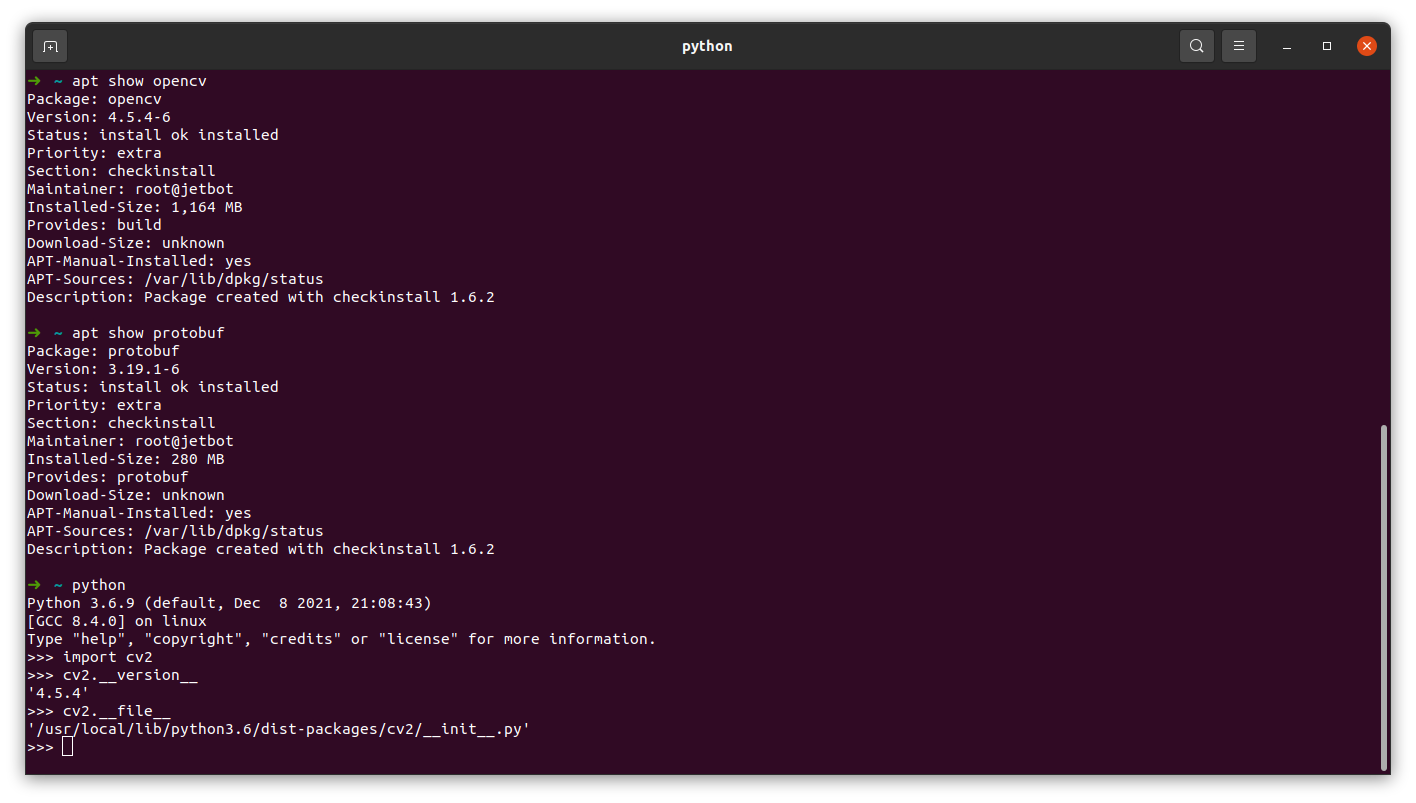

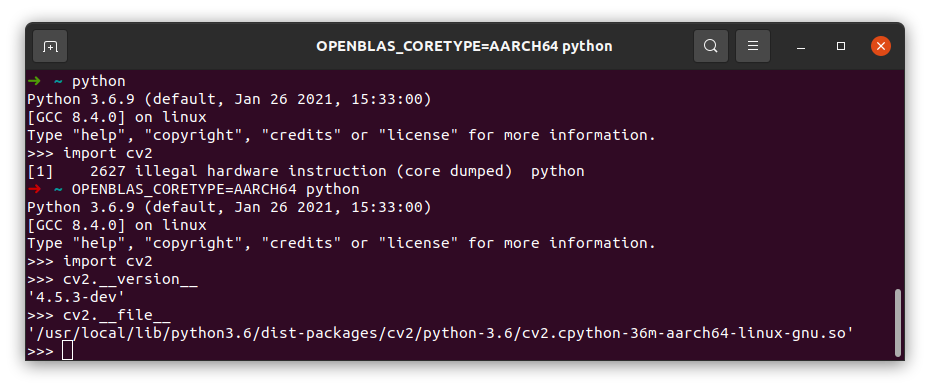

After having built and installed OpenCV on the SDXC

inserted into an SD card reader, let's try import cv2

(python OpenCV ).

As you can see, I got a new issue: import cv2 failed for

python OpenCV . This is actually an OPENBLAS

issue for a different hardware. Let's try run

python in this way:

OPENBLAS_CORETYPE=AARCH64 python, and now

import cv2 succeeds. Finally, it's recommended from me: export OPENBLAS_CORETYPE=AARCH64 in .bashrc or

/etc/environment.

If you NOT ONLY look on this SDXC

card as an extra bigger storage, BUT ALSO expect it to

be bootable, there is a way out - please refer to Jetson AGX

is started in the SD card .

3. Build Our Own OS

for Jetson on Host Desktop

Until now, can I draw a conclusion? Jetson

AGX Xavier has NOT been well supported. I may have

to build everything from scratch? Then, why NOT support

some Chinese ARM boards instead? Such as: Orange Pi , Banana Pi , etc.

How I miss China!

我想回国。

3.3 Can We

Build All Packages with the Newest Release?

From L4T

Documentation Overiew , we notice NONE of the key

packages supported by Jetson boards are up-to-date, probably for

Jetson's compatibility? Anyway, let me have a try.

3.3.1 Jetson Board Support

Package