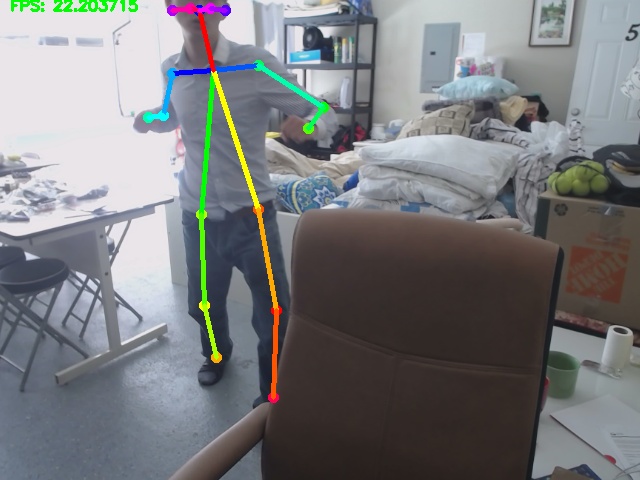

Busy again. Simple test about posture recognition. :laughing:

Yup… it is ME again, several minutes ago…

Tensorflow & Keras - Build from Source

Tensorflow is always problematic, particularly, for guys like me…

1. Configuration

Linux Kernel 4.17.3 + Ubuntu 18.04 + GCC 7.3.0 + Python

1 | $ uname -r |

1 | $ lsb_release -a |

1 | $ gcc --version |

1 | $ python --version |

2. Install Bazel

From Bazel’s official website Installing Bazel on Ubuntu:

1 | $ echo "deb [arch=amd64] http://storage.googleapis.com/bazel-apt stable jdk1.8" | sudo tee /etc/apt/sources.list.d/bazel.list |

Current bazel 0.15.0 will be installed.

3. Let’s Build Tensorflow from Source

3.1 Configuration

1 | $ ./configure |

3.2 FIRST Bazel Build

Follow Installing TensorFlow from Sources on Tensorflow‘s official website, have all required packages prepared, and run:

1 | bazel build --config=opt --config=cuda //tensorflow/tools/pip_package:build_pip_package |

you will meet several ERROR messages, which requires you to carry out the following modifications.

3.3 Modifications

- File ~/.cache/bazel/_bazel_jiapei/051cd94cedc722db8c69e42ce51064b5/external/jpeg/BUILD:

1 | config_setting( |

- Two Symbolic links

1 | $ sudo ln -s /usr/local/cuda/include/crt/math_functions.hpp /usr/local/cuda/include/math_functions.hpp |

3.4 Bazel Build Again

1 | bazel build --config=opt --config=cuda //tensorflow/tools/pip_package:build_pip_package |

It’ll take around 30 minutes to have Tensorflow successfully built.

1 | ...... |

4. Tensorflow Installation

4.1 Build the pip File

1 | $ bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg |

Let’s have a look at what’s been built:

1 | $ ls /tmp/tensorflow_pkg/ |

4.2 Pip Installation

And, let’s have tensorflow-1.9.0rc0-cp36-cp36m-linux_x86_64.whl installed.

1 | $ pip3 install /tmp/tensorflow_pkg/tensorflow-1.9.0rc0-cp36-cp36m-linux_x86_64.whl |

Let’s test if Tensorflow has been successfully installed.

4.3 Check Tensorflow

1 | $ python |

5. Keras Installation

After successfully check out Keras, we can easily have Keras installed by command python setup.py install.

1 | $ python setup.py install |

That’s all for today. I think Python is seriously cool, handy indeed. I myself will still recommend Pytorch, but it seems Tensorflow and Keras are also very popular in North America.

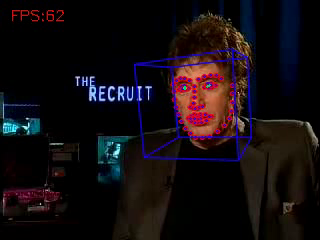

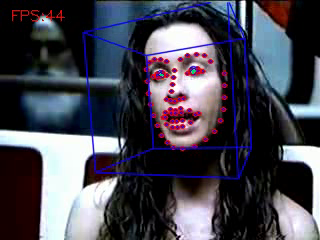

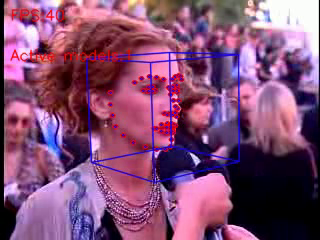

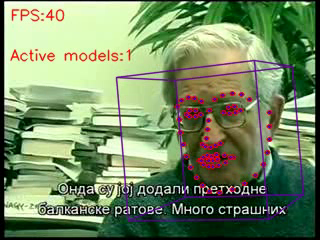

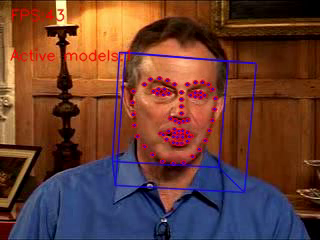

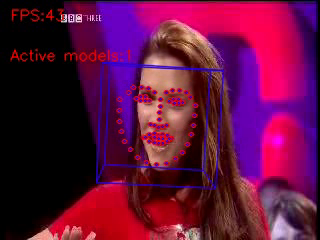

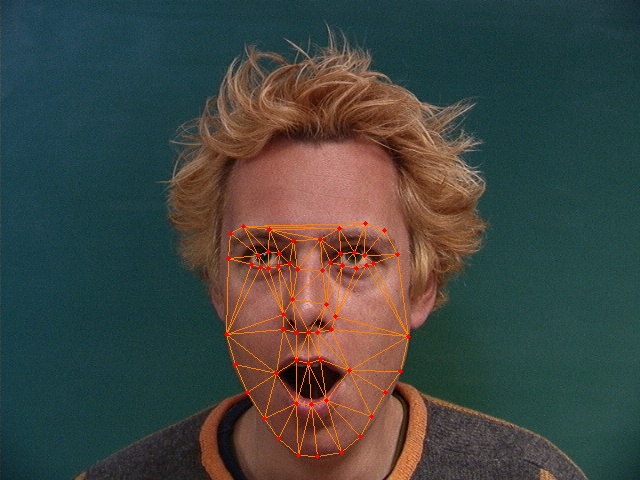

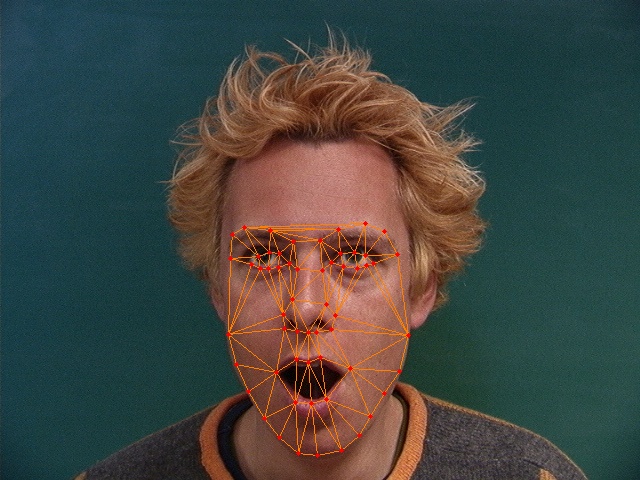

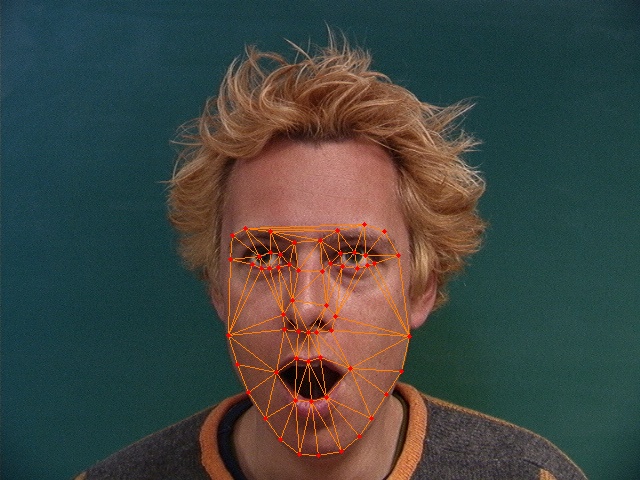

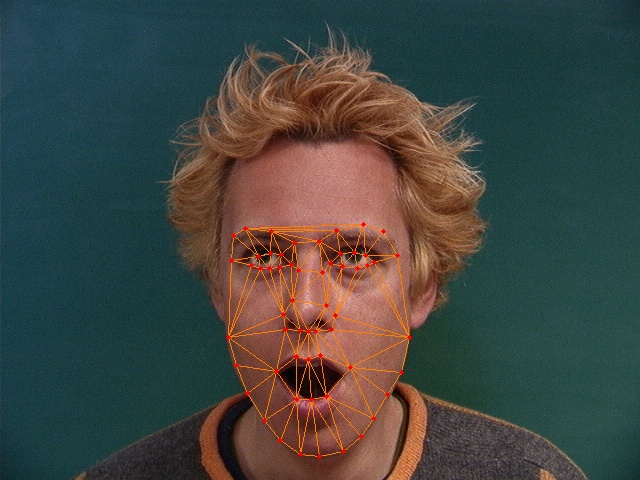

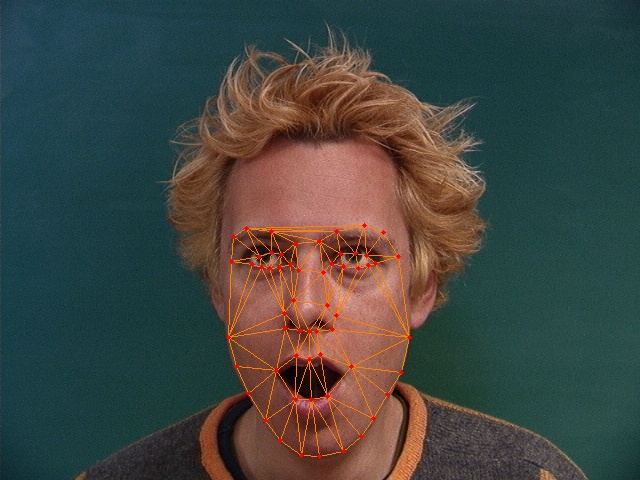

Face Key Point Localization & Orientation

I’m quite busy today. So, I’d just post some videos to show the performance about jiu bu gao su ni :laughing:

Click on the pictures to open an uploaded Facebook video.

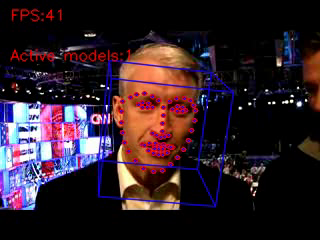

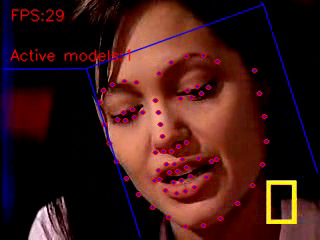

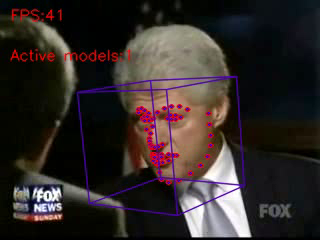

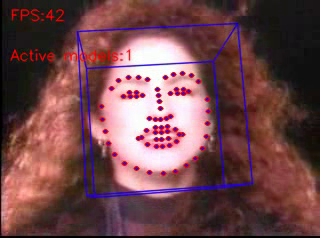

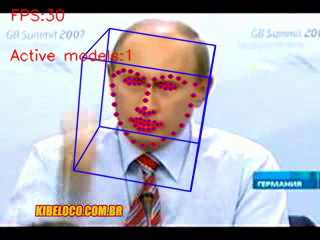

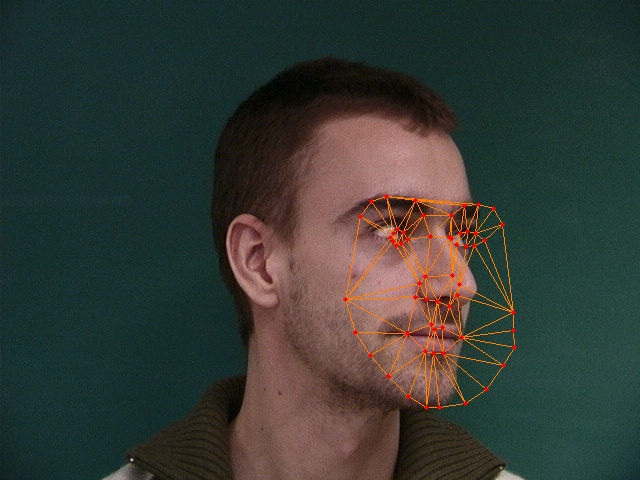

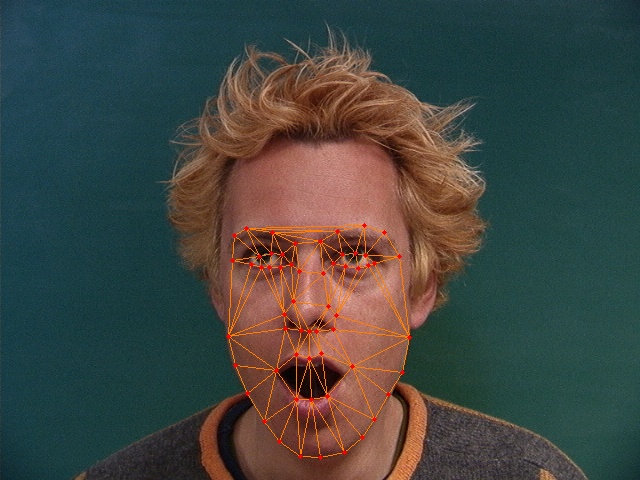

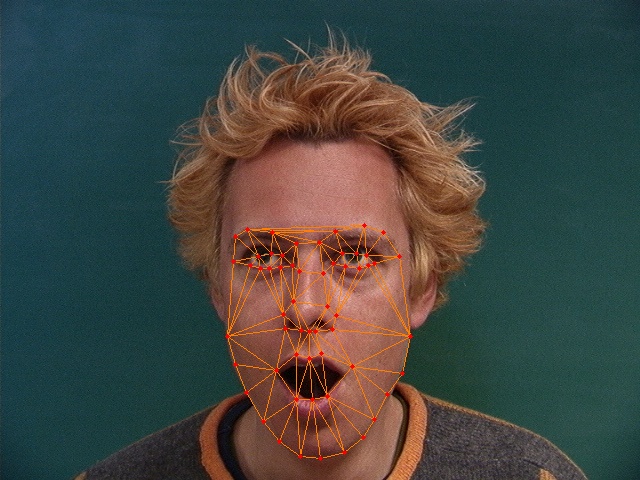

1. Key Point Localization

1.1 Nobody - Yeah, it’s ME, 10 YEARS ago. How time flies…

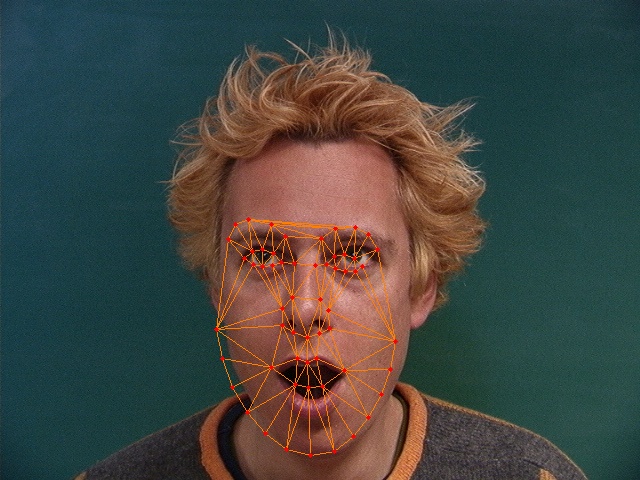

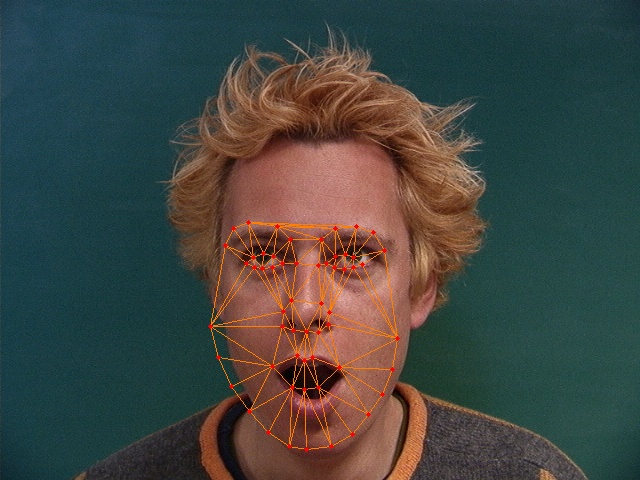

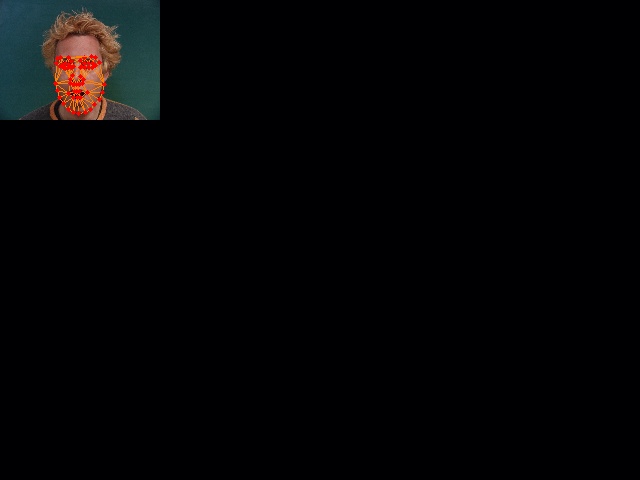

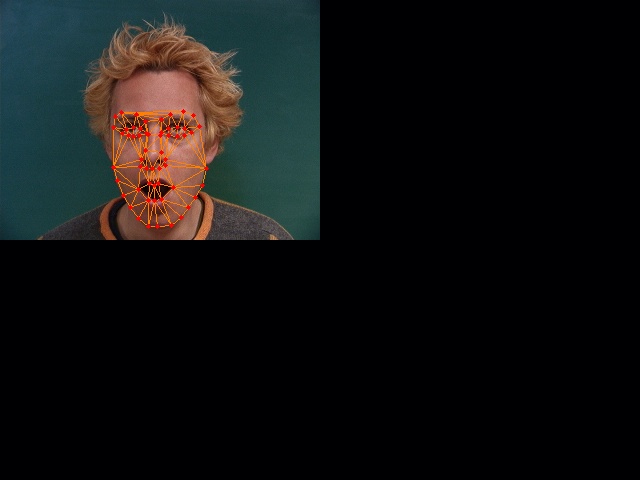

1.2 FRANCK - What a Canonical Annotated Face Dataset

2. Orientation

ImageAI - Happy Canada Day

Yesterday is Canada Day… ^_^ Happy Canada Day everybody…

Just notice this news about ImageAI today. So, I just had it tested for fun. I seriously don’t want to talk about ImageAI too much, you can follow the author’s github and it shouldn’t be that hard to have everything done in minutes.

1. Preparation

1.1 Prerequisite Dependencies

As described by on ImageAI’s Github, multiple Python dependencies need to be installed:

- Tensorflow

- Numpy

- SciPy

- OpenCV

- Pillow

- Matplotlib

- h5py

- Keras

All packages can be easily installed by command:

1 | pip3 install PackageName |

Afterwards, ImageAI can be installed by a single command:

1 | pip3 install https://github.com/OlafenwaMoses/ImageAI/releases/download/2.0.1/imageai-2.0.1-py3-none-any.whl |

1.2 CNN Models

Two models are adopted as in the examples prediction and detection.

- resnet50_weights_tf_dim_ordering_tf_kernels.h5 - can be downloaded at fchollet’s deep-learning-models

- resnet50_coco_best_v2.1.0.h5 - can be downloaded at fizyr’s keras-retinanet

2. Examples

2.1 Prediction

Simple examples are given at https://github.com/OlafenwaMoses/ImageAI/tree/master/imageai/Prediction.

I modified FirstPrediction.py a bit as follows:

1 | from imageai.Prediction import ImagePrediction |

For the 1st image:

From bash, you will get:

1 | $ python FirstPrediction.py |

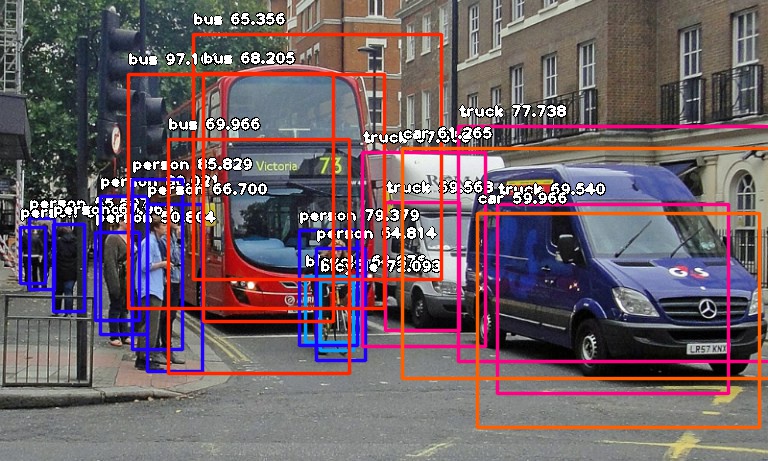

2.2 Detection

Simple examples are given at https://github.com/OlafenwaMoses/ImageAI/tree/master/imageai/Detection.

Trivial modification is also implemented upon FirstObjectDetection.py.

1 | from imageai.Detection import ObjectDetection |

2.2.1 For the 2nd image:

From bash, you will get

1 | $ python FirstDetection.py |

And, under the program folder, you will get an output image:

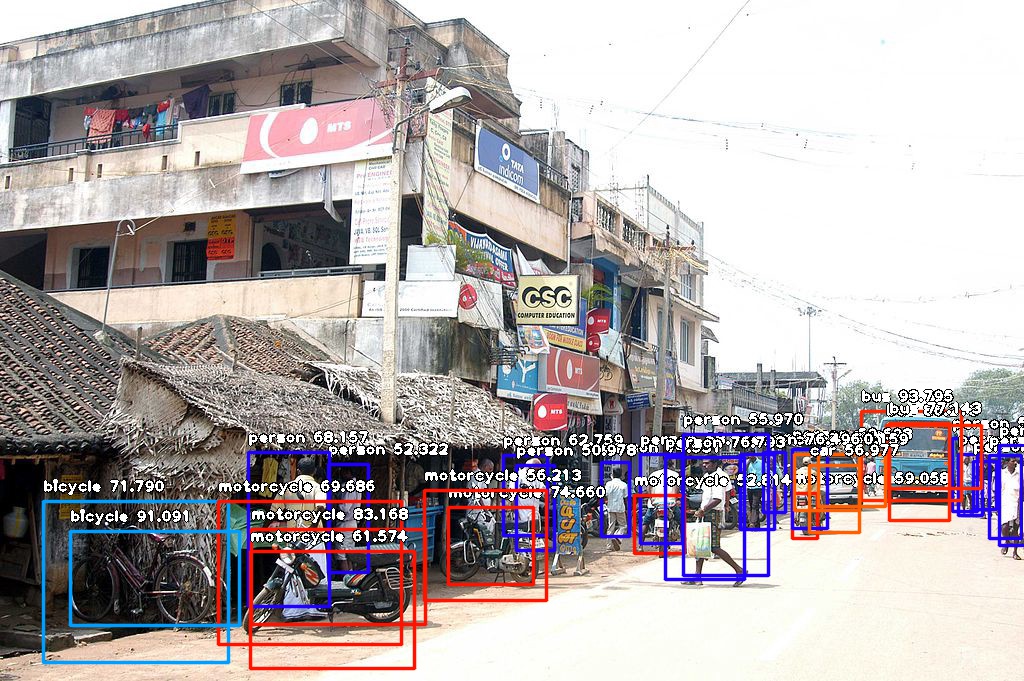

2.2.2 For the 3rd image:

From bash, you will get

1 | $ python FirstDetection.py |

And, under the program folder, you will get an output image:

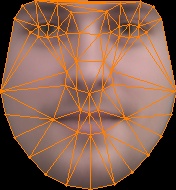

Vision Open Statistic Models

It has been quite a while that my VOSM has NOT been updated. My bad for sure. But today, I have it updated, and VOSM-0.3.5 is released. Just refer to the following 3 pages on github:

We’ll still explain a bit on How to use VOSM in the following:

1. Building

1.1 Building Commands

Currently, there are 7 types of models to be built. Namely, there are 7 choices for the parameter “-t”:

- SM

- TM

- AM

- IA

- FM

- SMLTC

- SMNDPROFILE

1 | $ testsmbuilding -o "./output" -a "./annotations/training/" -i "./images/training/" -s "../VOSM/shapeinfo/IMM/ShapeInfo.txt" -d "IMM" -c 1 -t "SM" -l 4 -p 0.95 |

1.2 Output Folders

After these 7 commands, 9 folders will be generated:

- Point2DDistributionModel

- ShapeModel

- TextureModel

- AppearanceModel

- AAMICIA

- AFM

- AXM

- ASMLTCs

- ASMNDProfiles

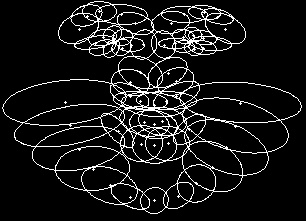

1.3 Output Images

Under folder TextureModel, 3 key images are generated:

| Reference.jpg | edges.jpg | ellipses.jpg |

|---|---|---|

|

|

|

Under folder AAMICIA, another 3 key images are generated:

| m_IplImageTempFace.jpg | m_IplImageTempFaceX.jpg | m_IplImageTempFaceY.jpg |

|---|---|---|

|

|

|

2. Fitting

2.1 Fitting Commands

Current VOSM supports 5 fitting methods.

- ASM_PROFILEND

- ASM_LTC

- AAM_BASIC

- AAM_CMUICIA

- AAM_IAIA

1 | $ testsmfitting -o "./output/" -t "ASM_PROFILEND" -i "./images/testing/" -a "./annotations/testing/" -d "IMM" -s true -r true |

2.2 Fitting Results

Let’s just take ASM_PROFILEND as an example.

1 | $ testsmfitting -o "./output/" -t "ASM_PROFILEND" -i "./images/testing/" -a "./annotations/testing/" -d "IMM" -s true -r true |

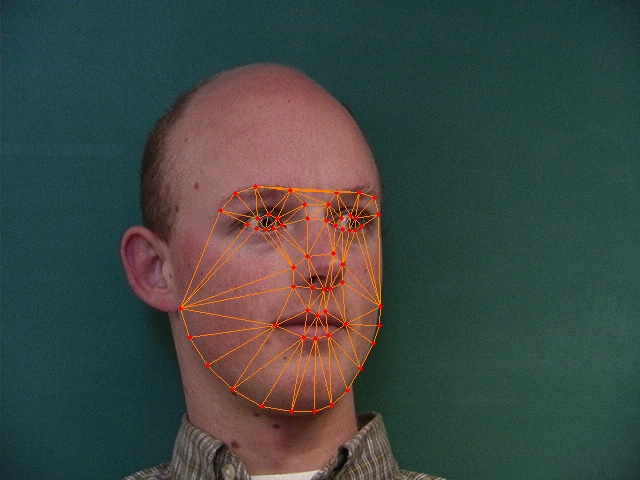

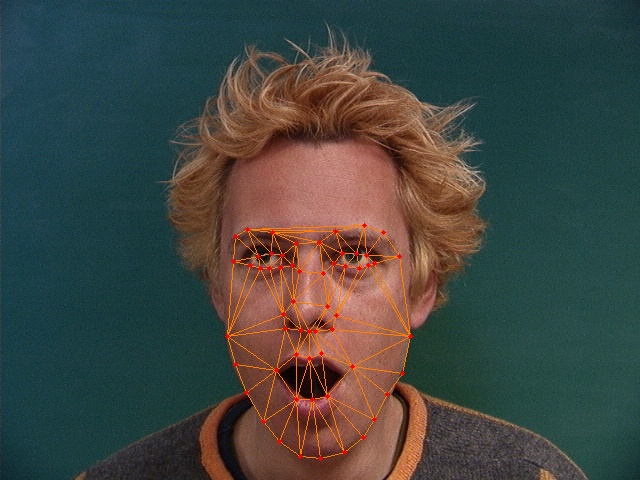

All fitted images are generated under current folder, some are well fitted:

| 11-1m.jpg | 33-4m.jpg | 40-6m.jpg |

|---|---|---|

|

|

|

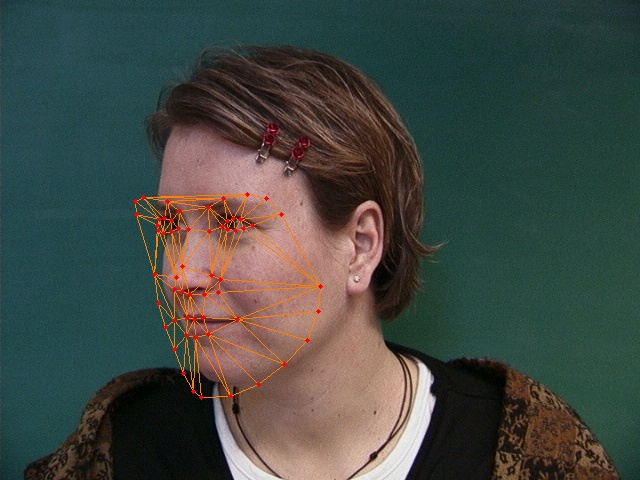

others are NOT well fitted:

| 12-3f.jpg | 20-6m.jpg | 23-4m.jpg |

|---|---|---|

|

|

|

2.3 Process of Fitting

The fitting process can also be recorded for each image if the parameter “-r” is enabled by -r true. Let’s take a look at what’s in folder 40-6m.

| 00.jpg | 01.jpg | 02.jpg |

|---|---|---|

|

|

|

| 03.jpg | 04.jpg | 05.jpg |

|

|

|

| 06.jpg | 07.jpg | 08.jpg |

|

|

|

| 09.jpg | 10.jpg | 11.jpg |

|

|

|

| 09.jpg | 10.jpg | 11.jpg |

|

|

|

| 15.jpg | 16.jpg | |

|

|

Clearly, the technology of pyramids is adopted during the fitting process.

Use VLC To Download Youtube Videos

To download videos from Youtube is sometimes required. Some Chrome plugins can be used to download Youtube videos, such as: Youtube Downloader. Other methods can also be found on various resources, such as: WikiHow.

In this blog, I’m going to cite (copy and paste) from WikiHow about How to download Youtube videos by using VLC.

STEP1: Copy Youtube URL

Find the Youtube video that you would like to download, and copy the Youtube URL.

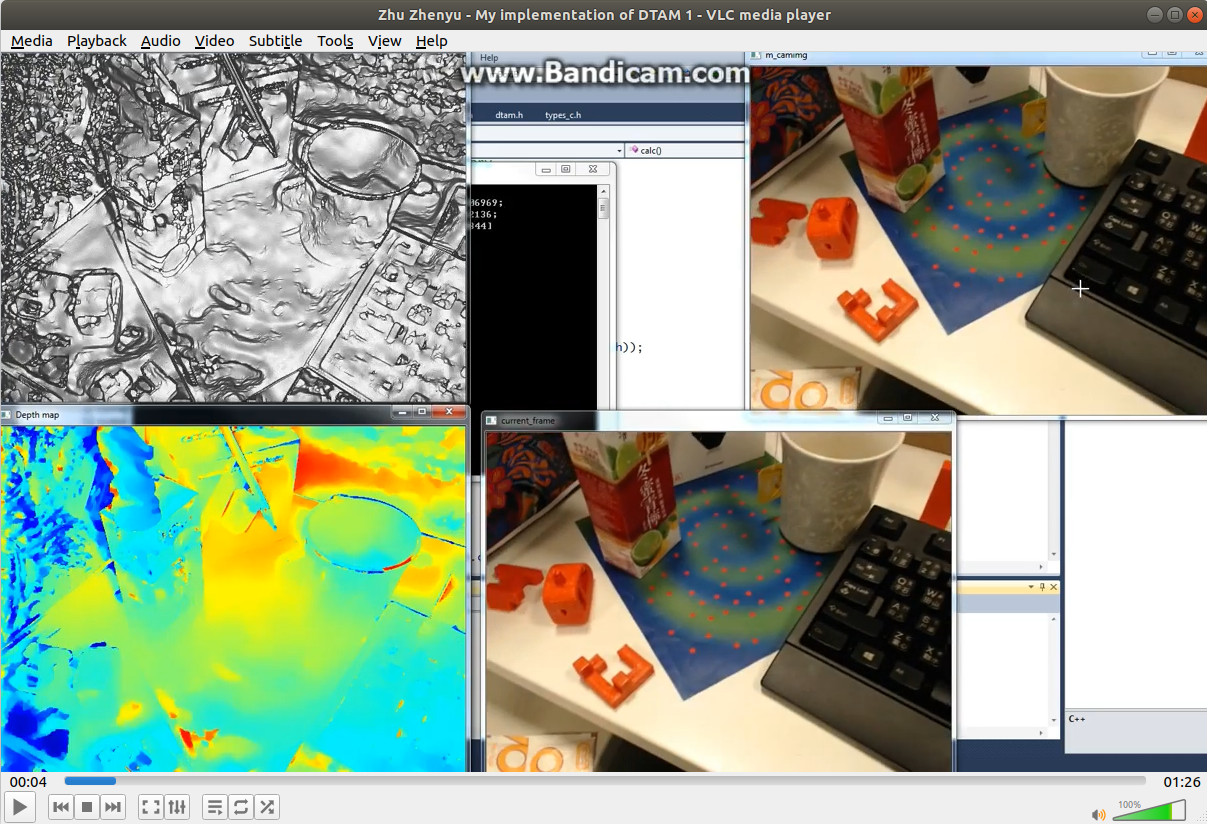

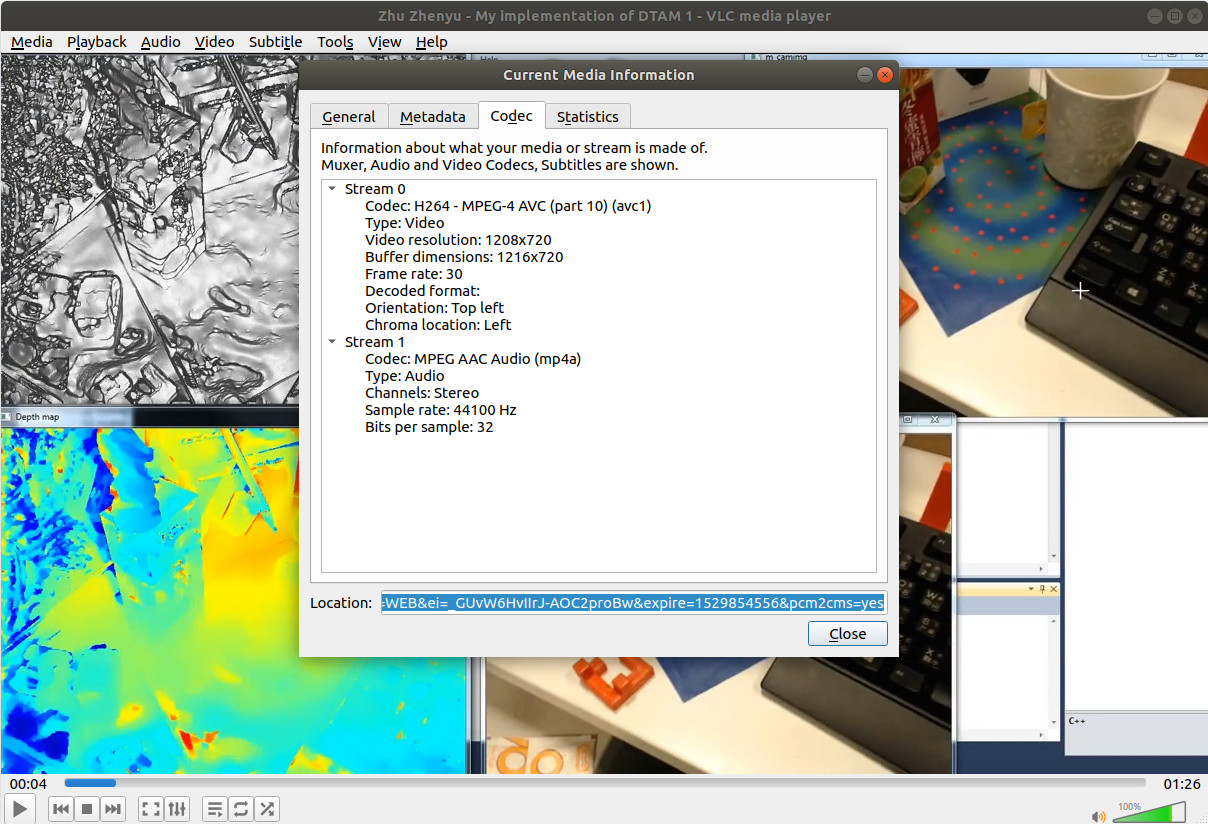

STEP 2: Broadcast Youtube Video in VLC

Paste the URL under VLC Media Player->Media->Open Network Stream->Network Tab->Please enter a network URL:, and Play:

STEP 3: Get the Real Location of Youtube Video

Then, copy the URL under Tools->Codec Information->Codec->Location:

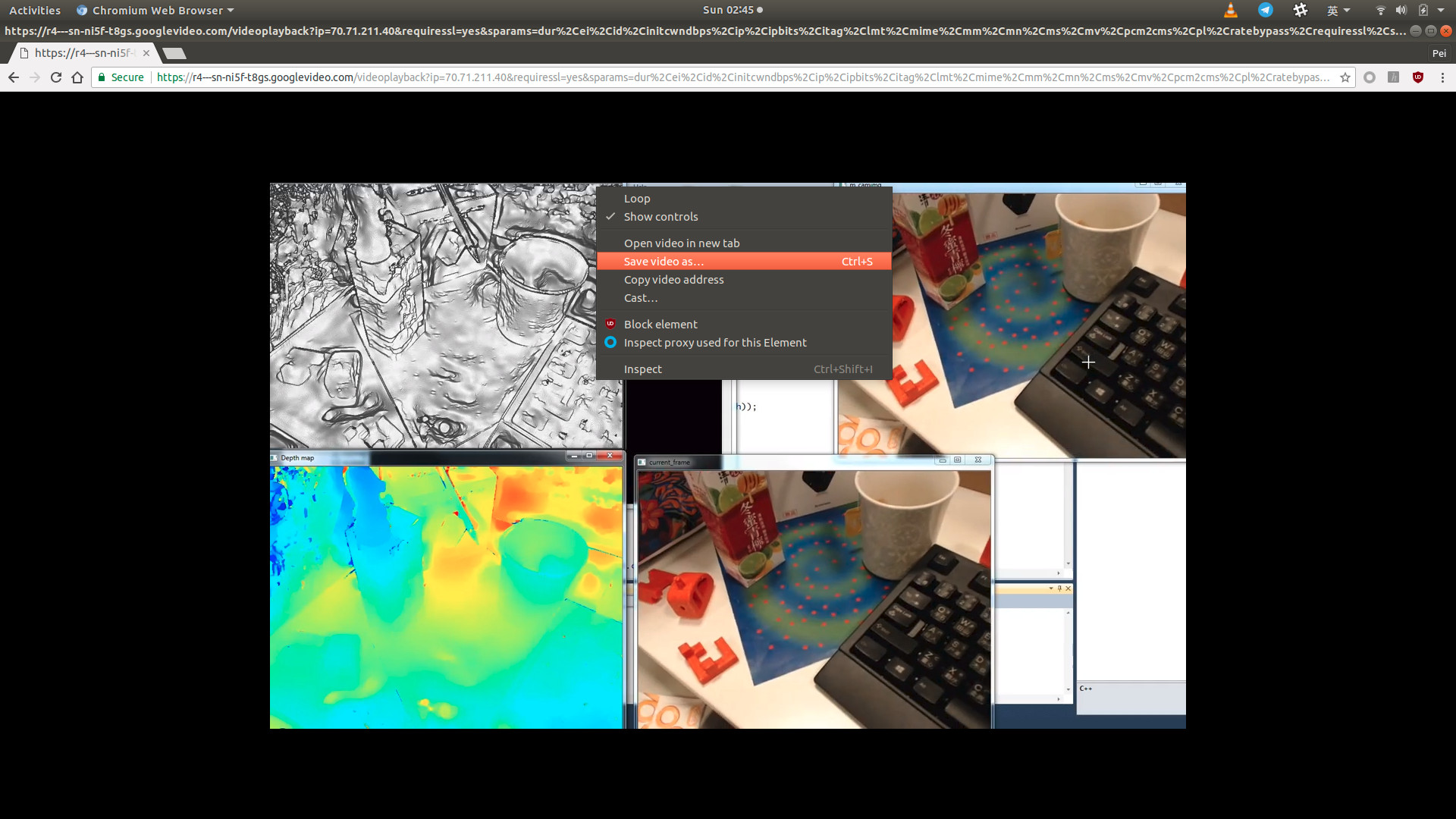

STEP 4: Save Video As

Finally, paste the URL in Chrome:

and Save video as….

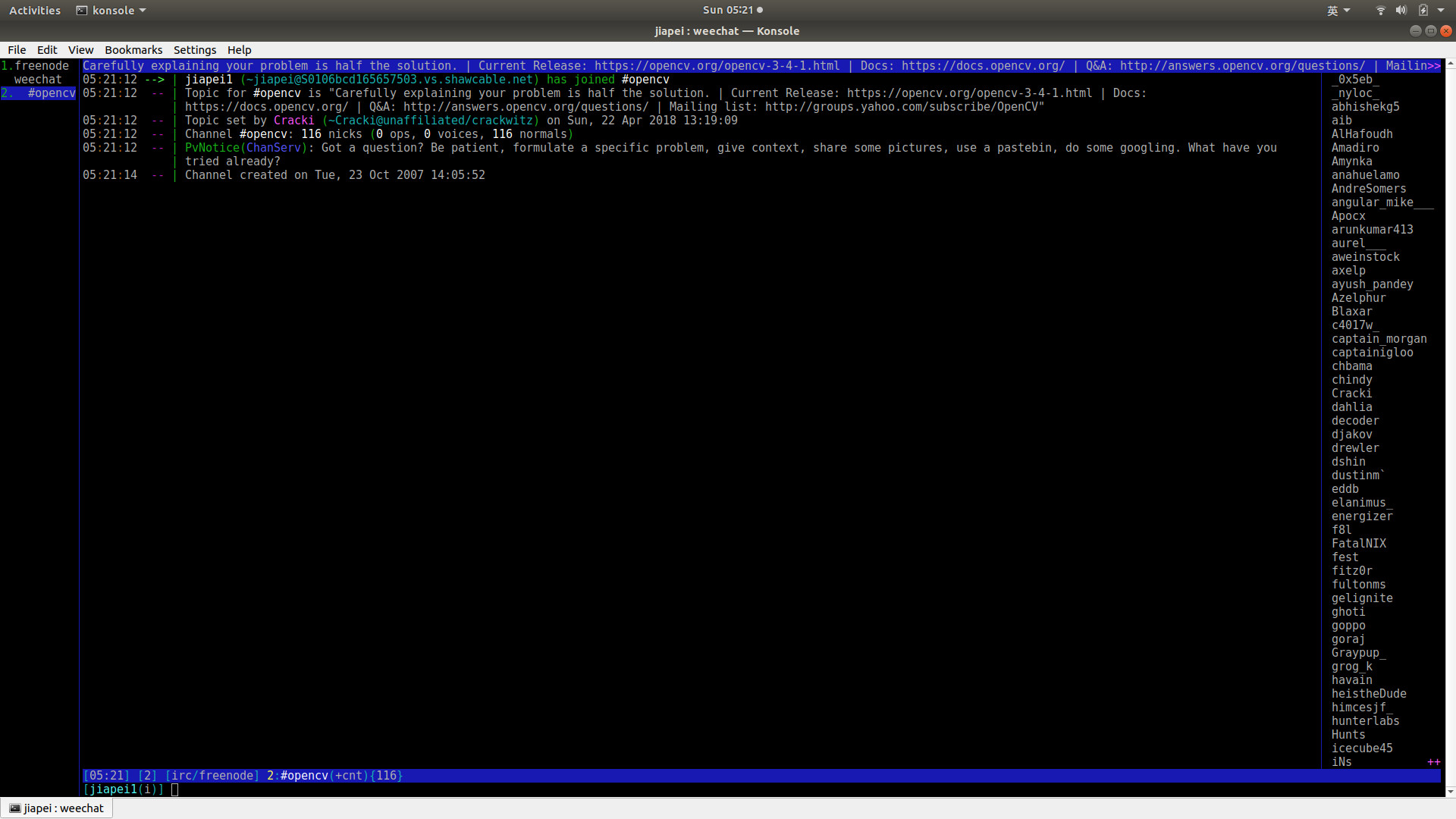

IRC Via WeeChat in Linux

1. IRC and Freenode

People have been using IRC for decades.

Freenode is an IRC network used to discuss peer-directed projects.

cited from Wikipedia.

It’s recommended to have some background about IRC and Freenode on Wikipedia:

2. Popular IRC Clients

Lots of IRC clients can be found on Google. Here, we just summarize some from IRC Wikipedia:

3. 7 Best IRC Clients for Linux

Please just refer to https://www.tecmint.com/best-irc-clients-for-linux/.

4. WeeChat in Linux

4.1 Installation

We can of course directly download the package from WeeChat Download, and have it installed from source. However, installing WeeChat from repository is recommended.

Step 1: Add the WeeChat repository

1 | $ sudo sh -c 'echo "deb https://weechat.org/ubuntu $(lsb_release -cs) main" >> /etc/apt/sources.list.d/WeeChat.list' |

Step 2: Add WeeChat repository key

1 | $ sudo apt-key adv --keyserver keys.gnupg.net --recv-keys 11E9DE8848F2B65222AA75B8D1820DB22A11534E |

Step 3: WeeChat Installation

1 | $ sudo apt update |

4.2 How to use WeeChat

Refer to WeeChat Official Documentation.

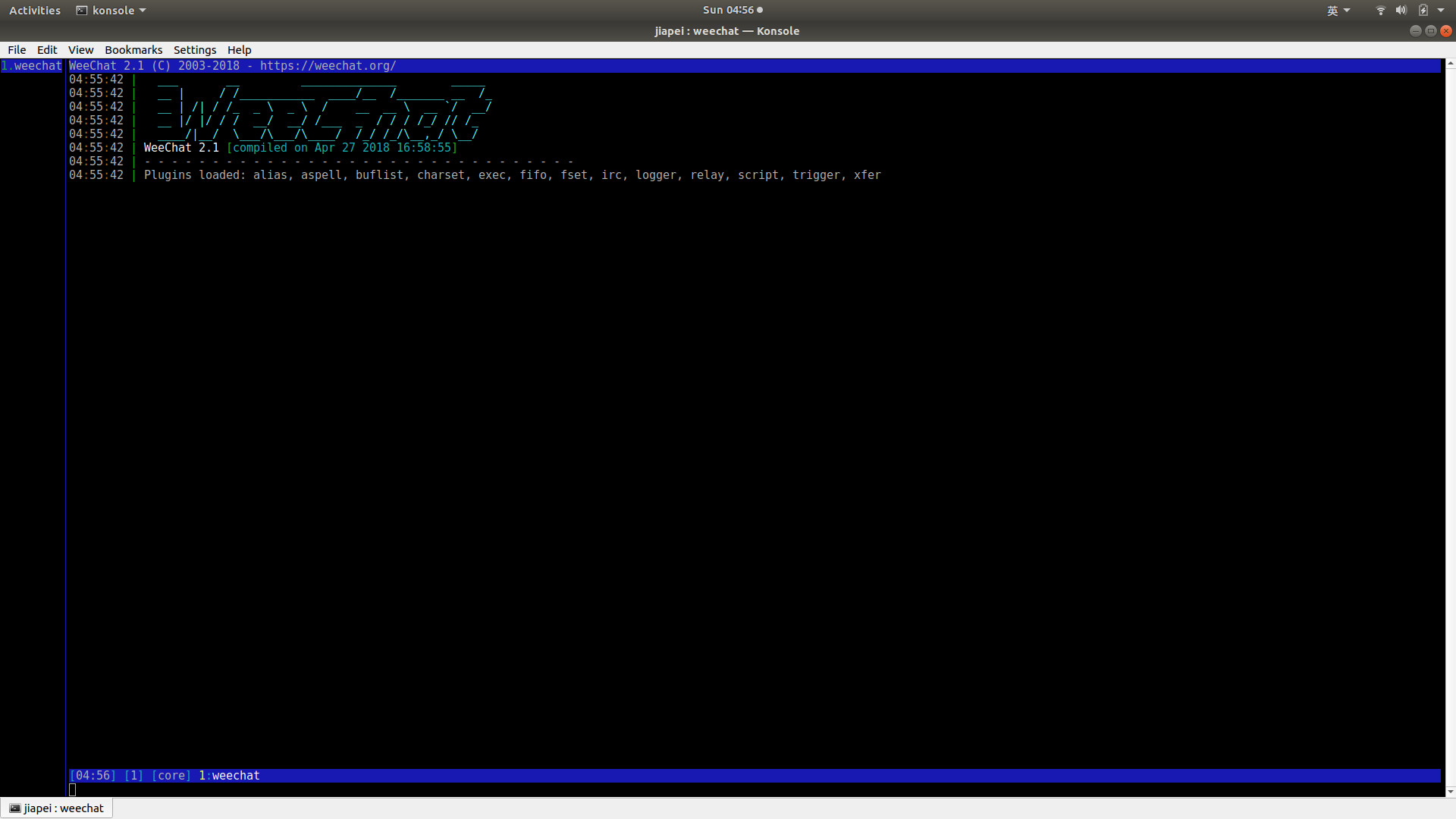

Step 1: Run WeeChat

1 | $ weechat |

will display:

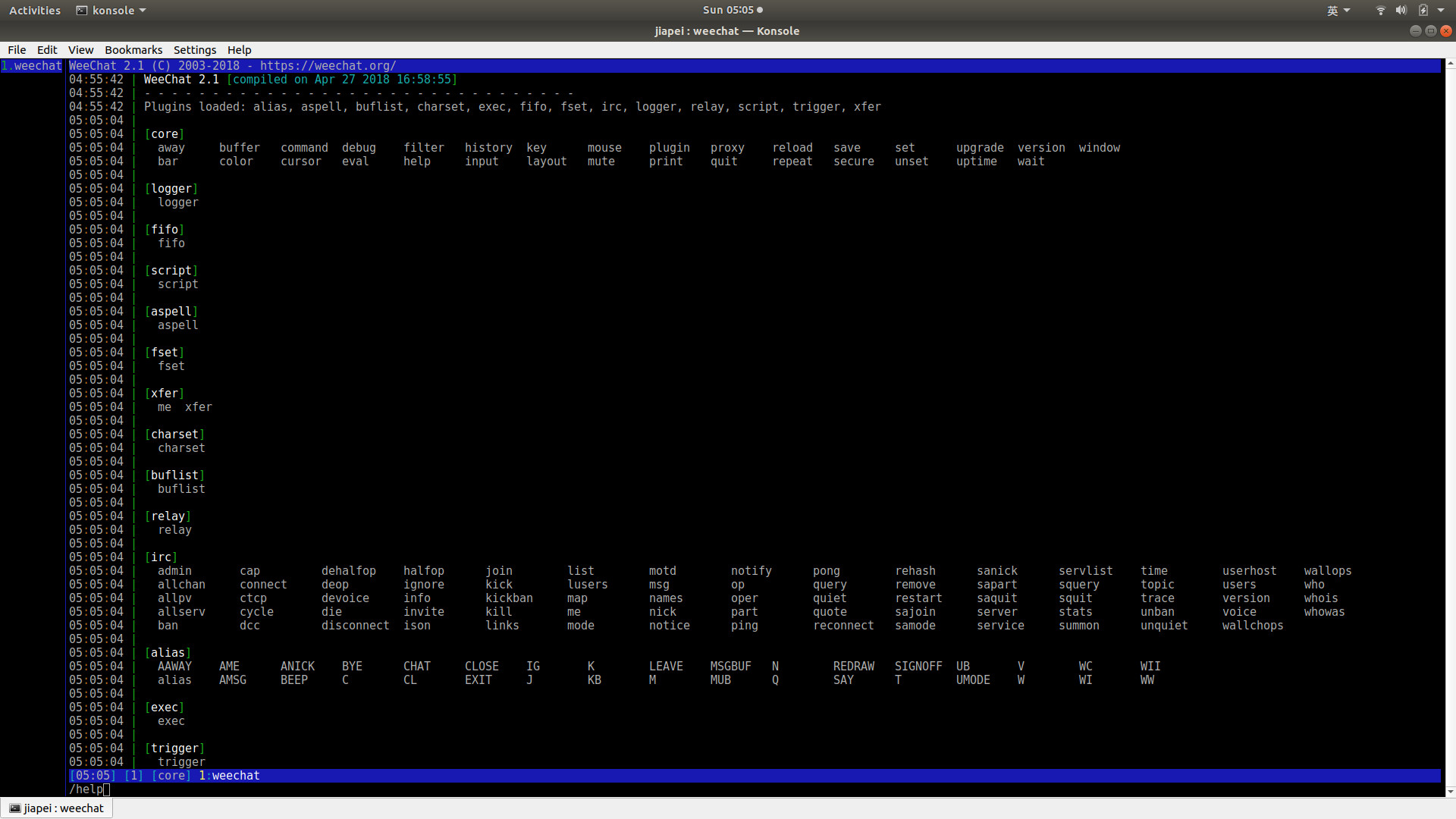

And we FIRST type in /help

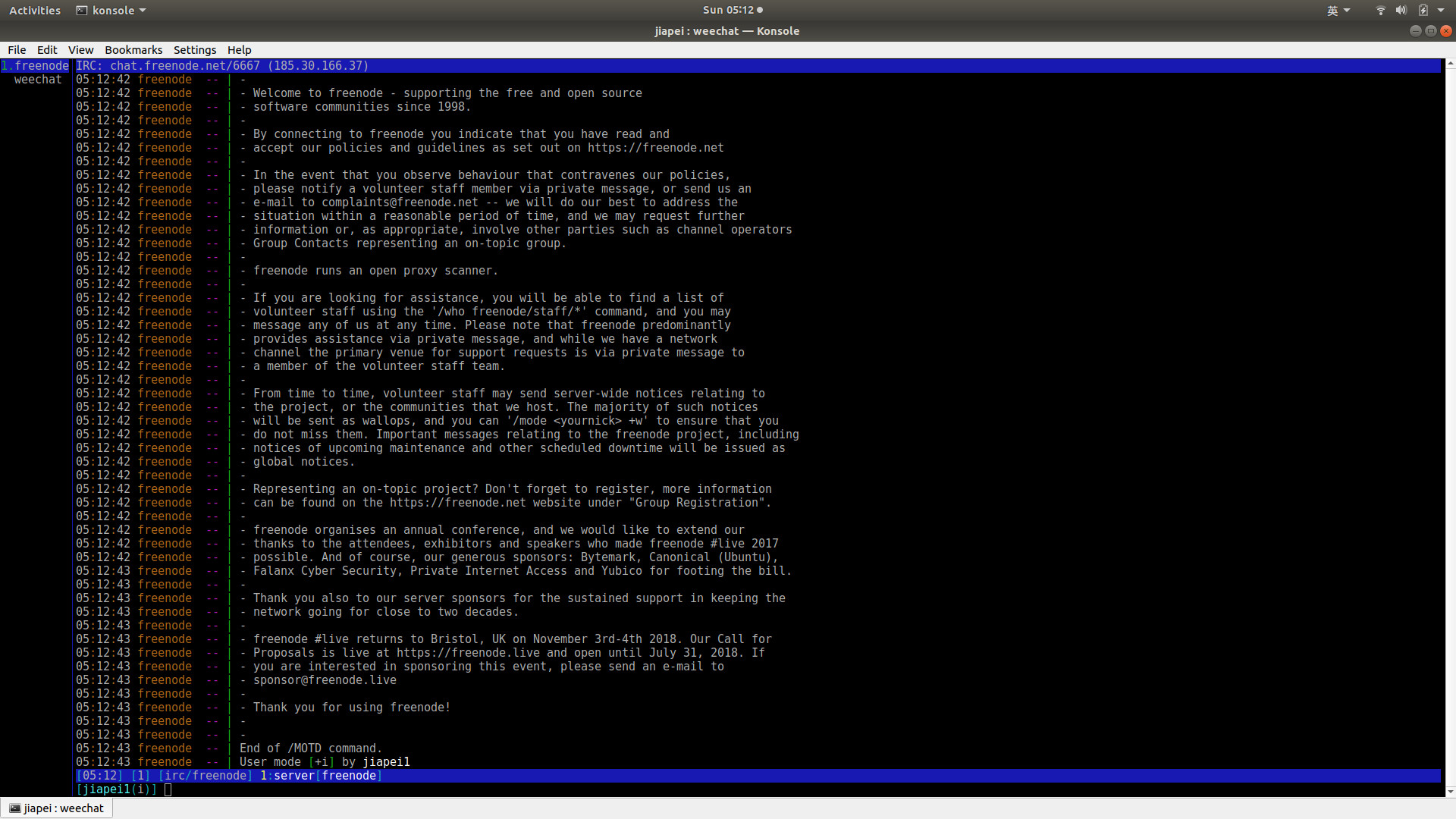

Step 2: Connect Freenode

1 | /connect freenode |

Step 3: Join a Channel

Here, we select channel OpenCV as an example:

1 | /join #opencv |

Step 4: Close a Channel

1 | /close |

5. Pidgin (Optional)

We’re NOT going to talk about it in this blog.

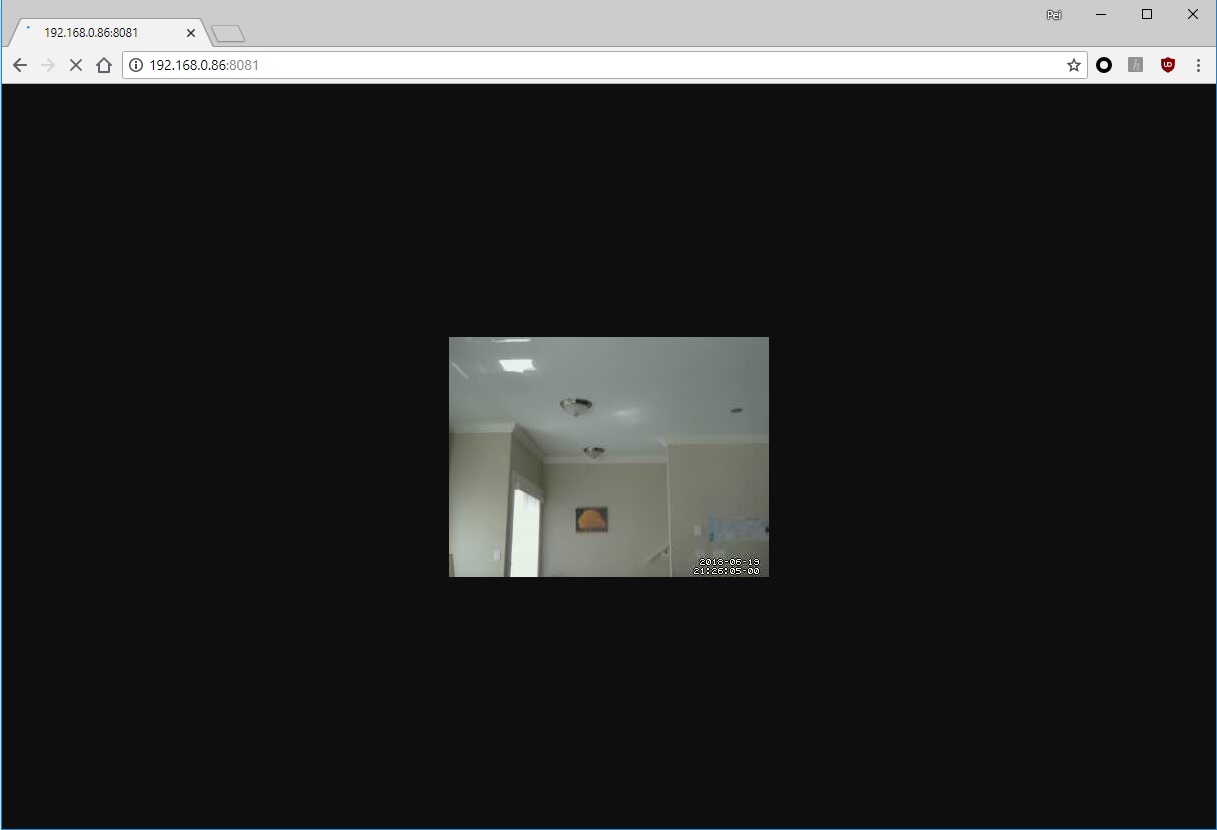

Video Surveillance With Motion

To DIY a home security camera is comparatively simple by using a low-cost embedded board with Linux installed. There are **ONLY 3 steps ** in total.

STEP1: Install MOTION from Repository

1 | $ sudo apt install motion |

STEP 2: start_motion_daemon=yes

1 | $ sudo vim /etc/default/motion |

Change start_motion_daemon from no to yes.

STEP 3: stream_localhost off

1 | $ sudo vim /etc/motion/motion.conf |

Change stream_localhost on to stream_localhost off.

STEP 4: Restart Motion Service

Run the following command:

1 | $ sudo /etc/init.d/motion restart |

STEP 5: Run Motion

1 | $ sudo motion |

STEP 6: Video Surveillance Remotely

Open up Chrome and type in the IP address:8081 to show the captured video at 1FPS. In my case, 192.168.0.86:8081, and the video effect is as:

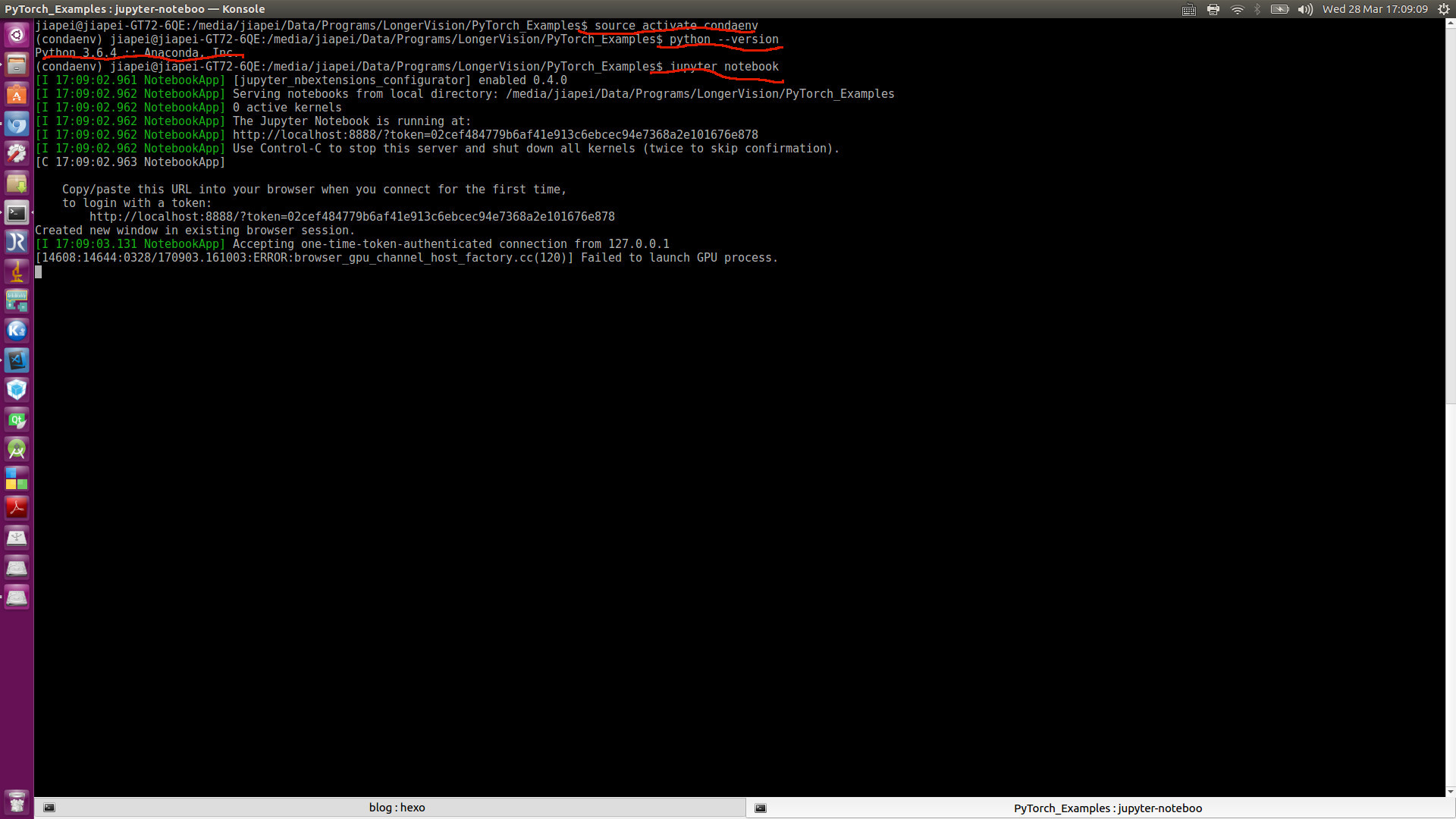

CNN by PyTorch via Anaconda

Finally, I’ve got some time to write something about PyTorch, a popular deep learning tool. We suppose you have had fundamental understanding of Anaconda Python, created Anaconda virtual environment (in my case, it’s named condaenv), and had PyTorch installed successfully under this Anaconda virtual environment condaenv.

Since I’m using Visual Studio Code to test my Python code (of course, you can use whichever coding tool you like), I suppose you’ve already had your own coding tool configured. Now, you are ready to go!

In my case, I’m giving a tutorial, instead of coding by myself. Therefore, Jupyter Notebook is selected as my presentation tool. So, I’ll demonstrate everything both in .py files, as well as .ipynb files. All codes can be found at Longer Vision PyTorch_Examples. However, ONLY Jupyter Notebook presentation is given in my blogs. Therefore, I suppose you’ve already successfully installed Jupyter Notebook, as well as any other required packages under your Anaconda virtual environment condaenv.

Now, let’s pop up Jupyter Notebook server.

Clearly, Anaconda comes with the NEWEST version. So far, it is Python 3.6.4.

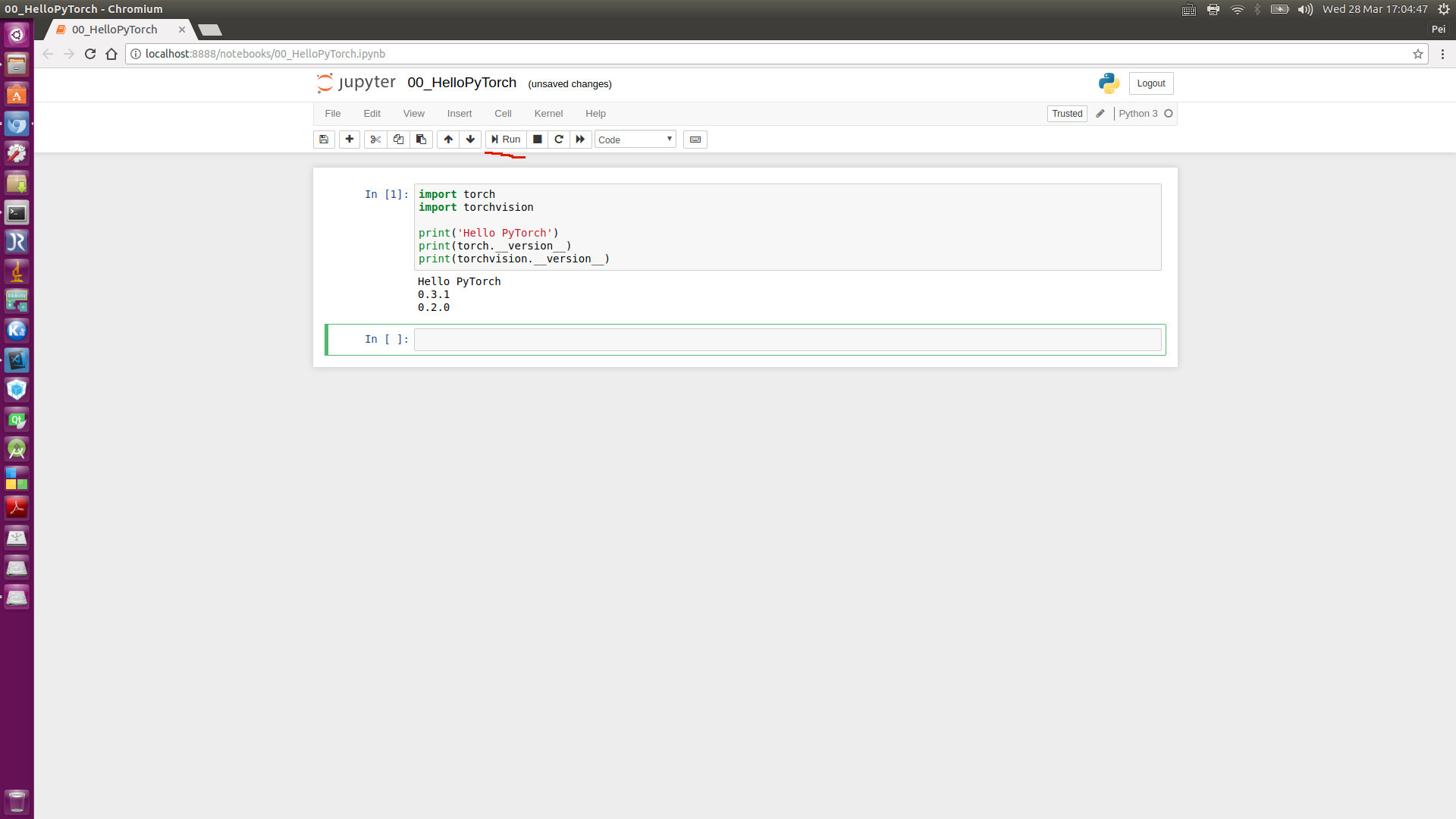

PART A: Hello PyTorch

Our very FIRST test code is of only 6 lines including an empty line:

After popping up Jupyter Notebook server, and click Run, you will see this view:

Clearly, the current torch is of version 0.3.1, and torchvision is of version 0.2.0.

PART B: Convolutional Neural Network in PyTorch

1. General Concepts

1) Online Resource

We are NOT going to discuss the background details of Convolutional Neural Network. A lot online frameworks/courses are available for you to catch up:

Several fabulous blogs are strongly recommended, including:

2) Architecture

One picture for all (cited from Convolutional Neural Network )

3) Back Propagation (BP)

The ONLY concept in CNN we want to emphasize here is Back Propagation, which has been widely used in traditional neural networks, which takes the solution similar to the final Fully Connected Layer of CNN. You are welcome to get some more details from https://brilliant.org/wiki/backpropagation/.

Pre-defined Variables

Training Database

- $X={(\vec{x_1},\vec{y_1}),(\vec{x_2},\vec{y_2}),…,(\vec{x_N},\vec{y_N})}$: the training dataset $X$ is composed of $N$ pairs of training samples, where $(\vec{x_i},\vec{y_i}),1 \le i \le N$

- $(\vec{x_i},\vec{y_i}),1 \le i \le N$: the $i$th training sample pair

- $\vec{x_i}$: the $i$th input vector (can be an original image, can also be a vector of extracted features, etc.)

- $\vec{y_i}$: the $i$th desired output vector (can be a one-hot vector, can also be a scalar, which is a 1-element vector)

- $\hat{\vec{y_i} }$: the $i$th output vector from the nerual network by using the $i$th input vector $\vec{x_i}$

- $N$: size of dataset, namely, how many training samples

- $w_{ij}^k$: in the neural network’s architecture, at level $k$, the weight of the node connecting the $i$th input and the $j$th output

- $\theta$: a generalized denotion for any parameter inside the neural networks, which can be looked on as any element from a set of $w_{ij}^k$.

Evaluation Function

- Mean squared error (MSE): $$E(X, \theta) = \frac{1}{2N} \sum_{i=1}^N \left(||\hat{\vec{y_i} } - \vec{y_i}||\right)^2$$

- Cross entropy: $$E(X, prob) = - \frac{1}{N} \sum_{i=1}^N log_2\left({prob(\vec{y_i})}\right)$$

Particularly, for binary classification, logistic regression is often adopted. A logistic function is defined as:

$$f(x)=\frac{1}{(1+e^{-x})}$$

In such a case, the loss function can easily be deducted as:

$$E(X,W) = - \frac{1}{N} \sum_{i=1}^N [y_i log({\hat{y_i} })+(1-y_i)log(1-\hat{y_i})]$$

where

$$y_i=0/1$$

$$\hat{y_i} \equiv g(\vec{w} \cdot \vec{x_i}) = \frac{1}{(1+e^{-\vec{w} \cdot \vec{x_i} })}$$

Some PyTorch explaination can be found at torch.nn.CrossEntropyLoss.

BP Deduction Conclusions

Only MSE is considered here (Please refer to https://brilliant.org/wiki/backpropagation/):

$$

\frac {\partial {E(X,\theta)} } {\partial w_{ij}^k} = \frac {1}{N} \sum_{d=1}^N \frac {\partial}{\partial w_{ij}^k} \Big( {\frac{1}{2} (\vec{y_d}-y_d)^2 } \Big) = \frac{1}{N} \sum_{d=1}^N {\frac{\partial E_d}{\partial w_{ij}^k} }

$$

The updating weights is also determined as:

$$

\Delta w_{ij}^k = - \alpha \frac {\partial {E(X,\theta)} } {\partial w_{ij}^k}

$$

2. PyTorch Implementation of Canonical CNN

Setup Repeater Bridge Using A dd-wrt Router

Hi, today, I’m going to revisit a very old topic, Setup Repeater Bridge Using A dd-wrt Router. This reminds me of my FIRST startup Misc Vision that used to resell Foscam home security IP cameras. That is a long story. Anyway, this blog is going to heavily cite the contents on this page.

PART A: Flash A Supported Router with DD-WRT Firmware

Before we start to tackle the problem, the open source firmware DD-WRT is strongly recommended to flash your router.

1. DD-WRT and OpenWRT

Two widely used open source router firmware have been published online for quite a bit of time: DD-WRT, OpenWRT In fact, each of these two are OK for router firmware flashing.

2. DD-WRT Supported Routers

To check if your router is supported by DD-WRT, you need to check this page.

3. DD-WRT SL-R7202

In my case, I got a lot of such routers in hand and am selling them on Craigslist (if anybody is interested in it, please let me know). You can also find some either continued or discontinued such products on the market, such as Gearbest.

DD-WRT SL-R7202 looks good:

In one word, find some DD-WRT supported router, and have it successfully flashed FIRST. As a lazy man, I’m NOT going to flash anything but just use a router coming with an existing DD-WRT firmware, namely, DD-WRT SL-R7202.

PART B: The Problem

The problem that we are going to deal with is how to connect multiple routers, so that the Internet range can be expanded. For routers with dd-wrt firmware, there are mulitple ways to connect routers, please refer to https://www.dd-wrt.com/wiki/index.php/Linking_Routers.

1. Linking Routers

In the following, most contents are CITED directly from Linking Routers and its extended webpages.

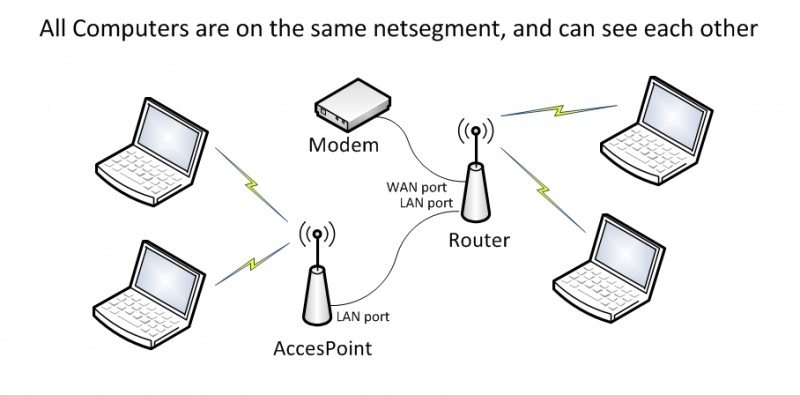

Access Point / Switch - Extend the Wireless access area using more routers, with WIRED connections between routers, or turn a WIRED port on an existing network into a Wireless Access Point. All computers will be on the same network segment, and will be able to see one another in Windows Network. This works with all devices with LAN ports, and does not require dd-wrt to be installed. “

- Wireless Access Point - Extend Wi-Fi & LAN (Requires physical(just WIRED) ethernet connection between routers)

- Switch - Similar config as WAP, but radio disabled (accepts only WIRED connections)

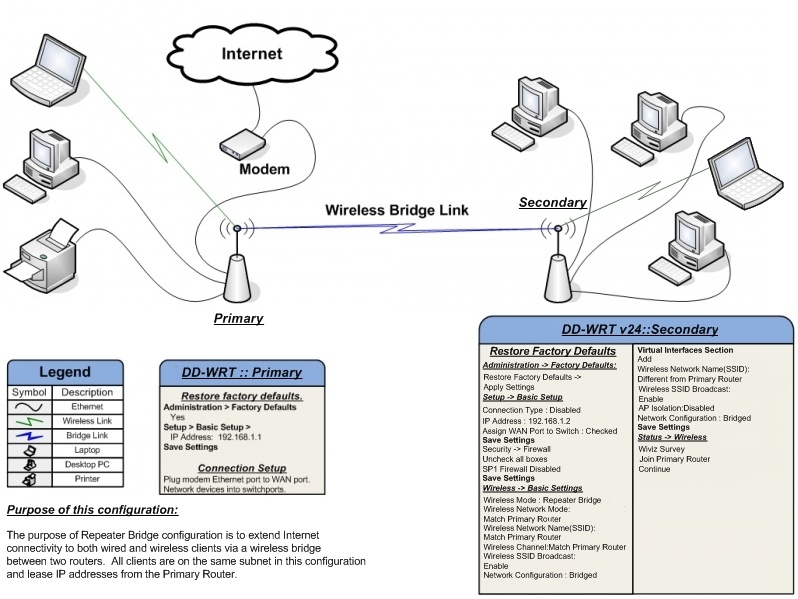

Repeater / Repeater Bridge - Extend the Wireless access area using a second router WIRELESSLY connected to the primary. The secondary router must have dd-wrt installed; the primary does not need dd-wrt.

- Repeater Bridge - A wireless repeater with DHCP & NAT disabled, clients on same subnet as host AP (primary router). That is, all computers can see one another in Windows Network.

- Repeater - A wireless repeater with DHCP & NAT enabled, clients on different subnet from host AP (primary router). Computers connected to one router can not see computers connected to other routers in Windows Network.

- Universal Wireless Repeater - Uses a program/script called AutoAP to keep a connection to the nearest/best host AP.

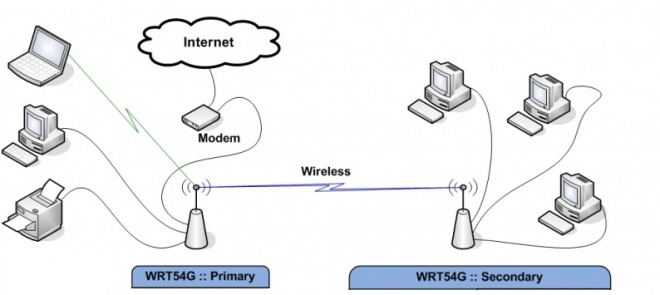

Client / Client Bridge - Connect two wired networks using a WiFi link (WIRELESS connection between two routers). The secondary router must have dd-wrt installed; the primary router does not need to have dd-wrt.

- Client Bridged - Join two wired networks by two Wireless routers building a bridge. All computers can see one another in Windows Network.

- Client Mode - Join two wired networks by two Wireless routers (unbridged). Computers on one wired network can not see computers on other wired network in Windows Network.

WDS - Extend the Wireless access area using more routers connected WIRELESSLY. WDS is a mesh network. Routers must almost always have the SAME chipset type for WDS to work, and any non dd-wrt routers must be WDS compatible. Using identical routers is best, but not always necessary if all devices have the same chipset types. (All Broadcom or all Atheros etc)

OLSR - Extend the Wireless access area using more routers. Extra routers do not need any wired connections to each other. Use several ISP (Internet) connections. OLSR is a mesh network.

2. Down-to-earth Case

In my case, my laptop and some of my IoT devices can be easily connected to the ShawCable Modem/Router combo, which is on the 2nd floor in my living room and directly connected to the Internet. However, my R&D office is located in my garage on the 1st floor, where dd-wrt router is put in. In such a case, my dd-wrt router must be connected to my ShawCable Modem/Router WIRELESSLY.

Due to the reason explained in the section Difference between Client Bridge and Repeater Bridge. A standard wireless bridge (Client Bridge) connects wired clients to a secondary router as if they were connected to your main router with a cable. Secondary clients share the bandwidth of a wireless connection back to your main router. Of course, you can still connect clients to your main router using either a cable connection or a wireless connection.

The limitation with standard bridging is that it only allows WIRED clients to connect to your secondary router. WIRELESS clients cannot connect to your secondary router configured as a standard bridge. Repeater Bridge allows both WIRELESS AND WIRED clients to connect to a the Repeater Bridge router, and through that device WIRELESSLY to a primary router. You can still use this mode if you only need to bridge wired clients; the extra wireless repeater capability comes along for free; however, you are not required to use it.

Therefore, we select Repeater Bridge as our solution. Here, DD-WRT SL-R7202 is selected as the Repeater Bridge. The difficulty is how to setup DD-WRT SL-R7202 to make it work as a wireless Repeater Bridge?

PART C: About Firmware (Optional)

1. Do We Need Firmware Upgrading?

Actually, before I come to this step, I’ve already:

- strictly followed the configuration process written on https://www.dd-wrt.com/wiki/index.php/Repeater_Bridge, but FAILED many times.

- and afterwards, I found a video solution at https://www.youtube.com/watch?v=ByB8vVGBjh4, which seems to be suitable for my case.

However, it finally turns out that my DD-WRT SL-R7202 router comes with a firmware version DD-WRT (build 13064), but the tutorial on Youtube uses a router with firmware version DD-WRT (build 21061).

It seems to me that a firmware upgrading is a must before we setup the Wireless Repeater Bridge Mode. But, How?

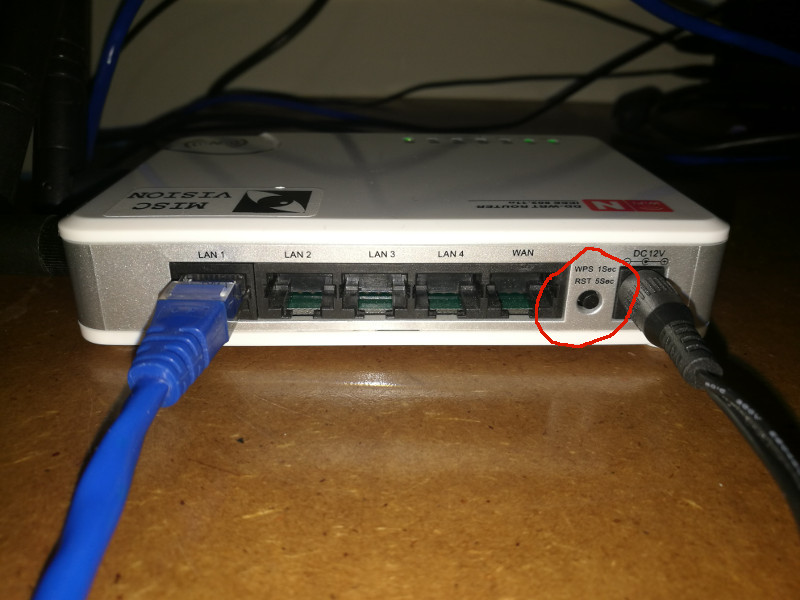

2. Reset to Factory Defaults on DD-WRT Router (The 2nd Router)

First of all, we need to reset DD-WRT. There is a black button on my DD-WRT SL-R7202, as shown:

In fact, how to reset DD-WRT needs to be paid attention to. > Hold the reset button until lights flash (10-30sec) or 30-30-30 if appropriate for your router. (DO NOT 30-30-30 ARM routers.)

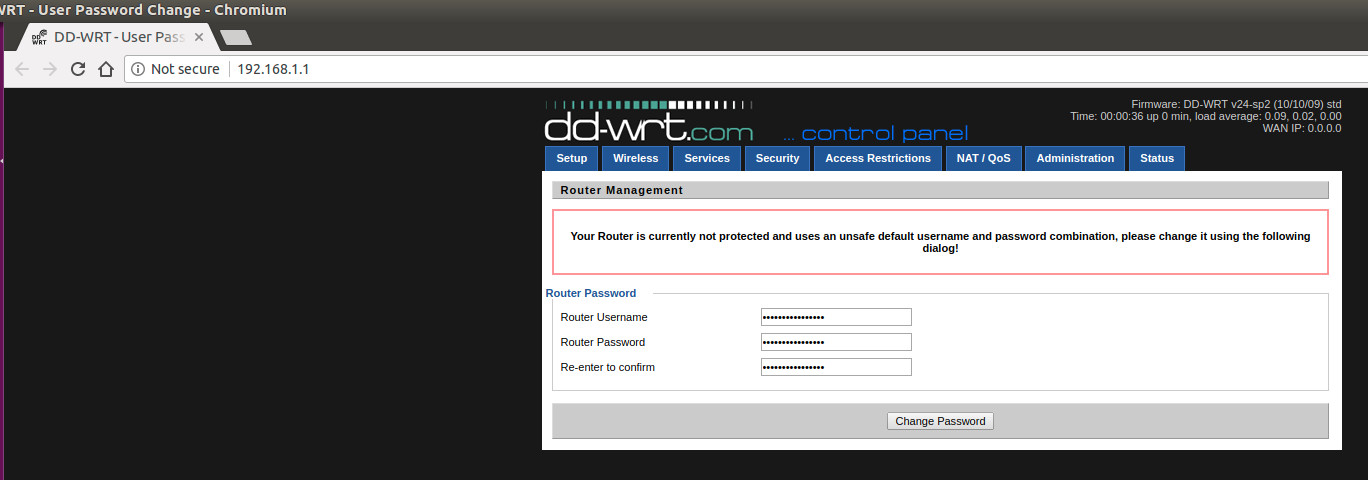

3. Visit http://192.168.1.1:

After successfully reset DD-WRT router to factory default, connect it with your host computer via a WIRED cable, for better stablity. Then, we change our network connection from our FIRST router to SECOND router: dd-wrt router. Afterwards, let’s visit http://192.168.1.1.

- NOTE: My host computer’s IP address on the FIRST router, namely, ShawCable Modem/Router is staticly set as 192.168.0.8 (which is able to connect to the Internet). After I switch to the Wifi dd-wrt, my host’s IP is DHCP allocated to 192.168.1.103. The gateway on ShawCable is 192.168.0.1, which is different from the gateway on dd-wrt 192.168.1.1 by default.

1) FIRST Page

DD-WRT home page looks like:

And it’s suggested we input a new router username root and password admin. After the button Change Password is clicked, we are entering the very first page of DD-WRT Web GUI.

- We can easily tell the firmware’s info on the top right corner.

1 | Firmware: DD-WRT v24-sp2 (10/10/09) std |

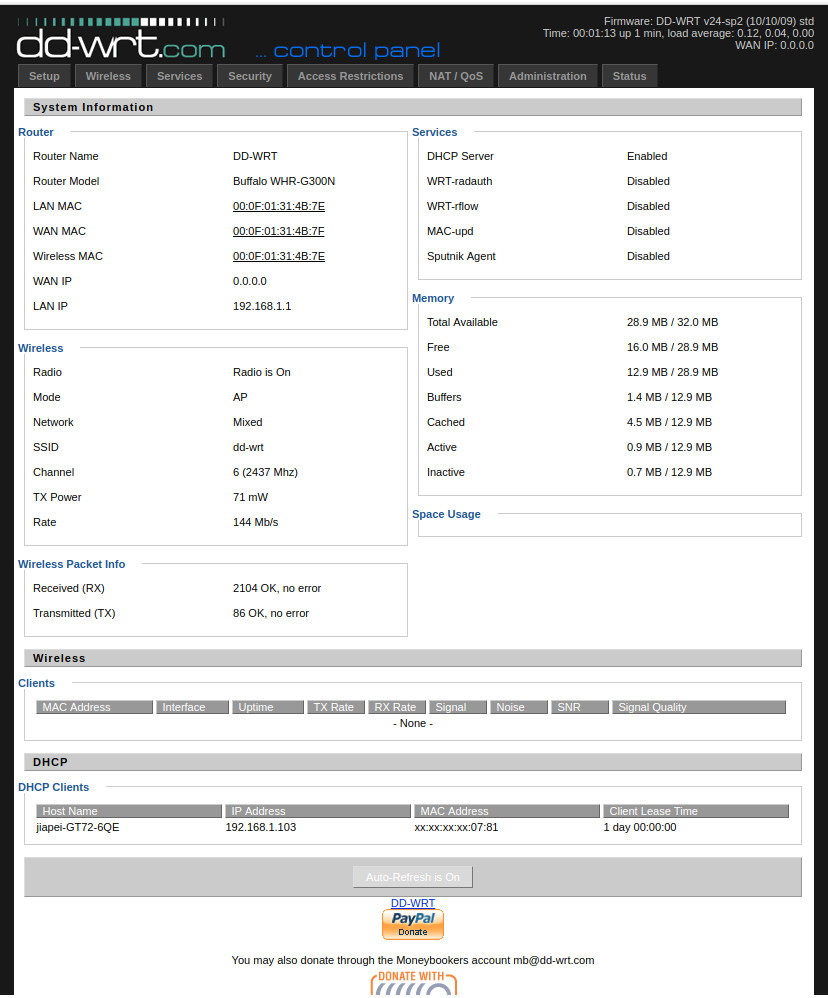

- And, we can also easily tell from the bottom of this page that for now, only 1 DHCP client is connected to this router, which is just our host computer: jiapei-GT72-6QE.

2) System Info on Status Page

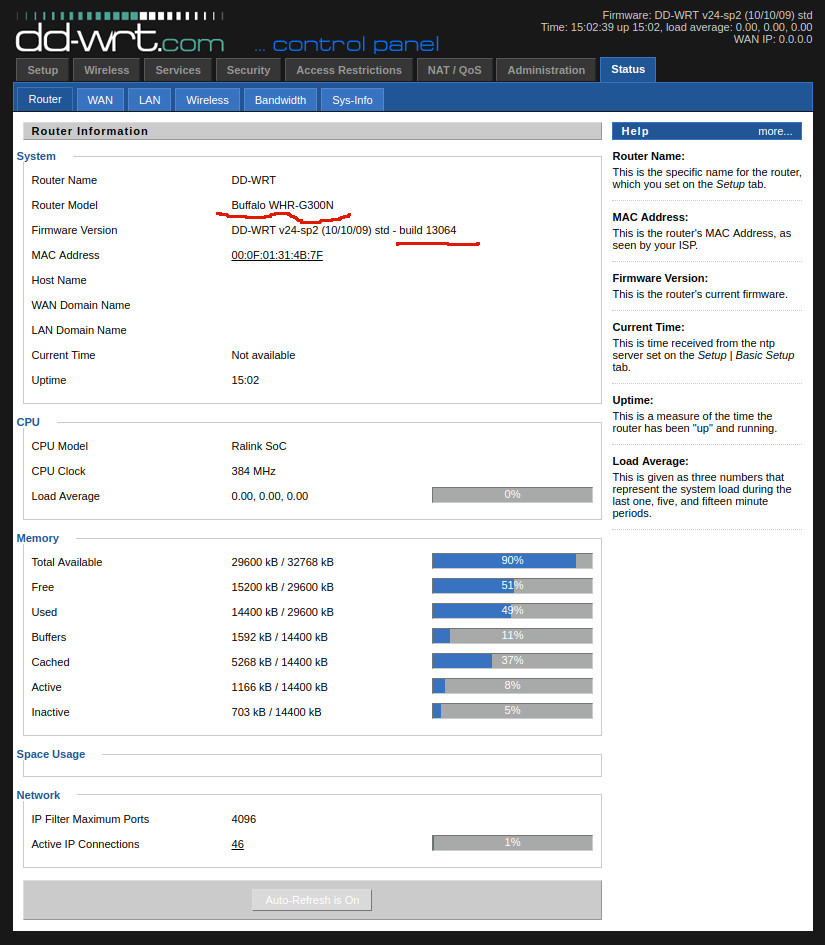

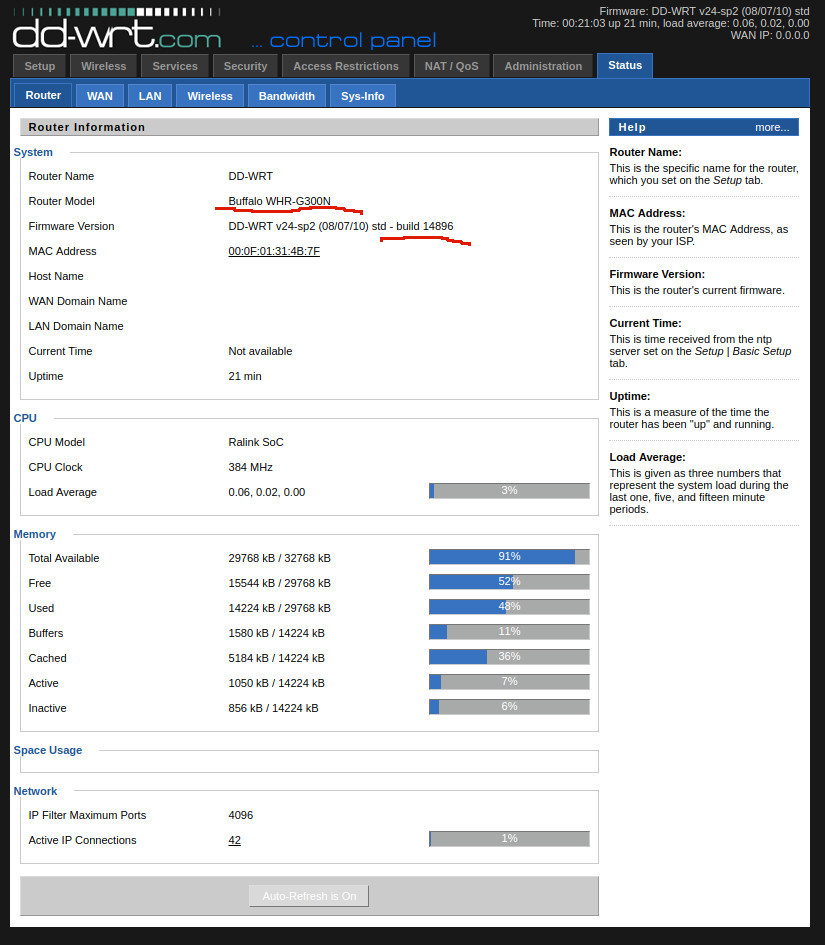

DD-WRT Status page tells a lot about what’s in this router:

Clearly, our router model is Buffalo WHR-G300N, and our firmware build version is 13064.

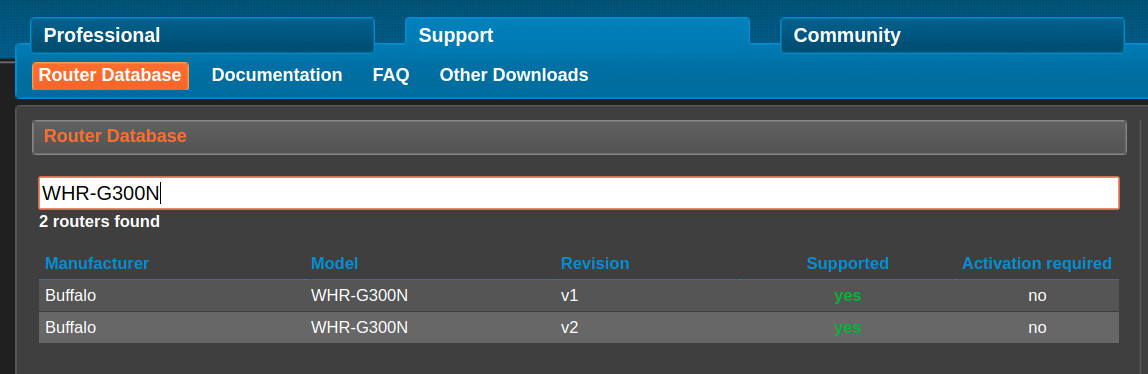

3) Upgradable?

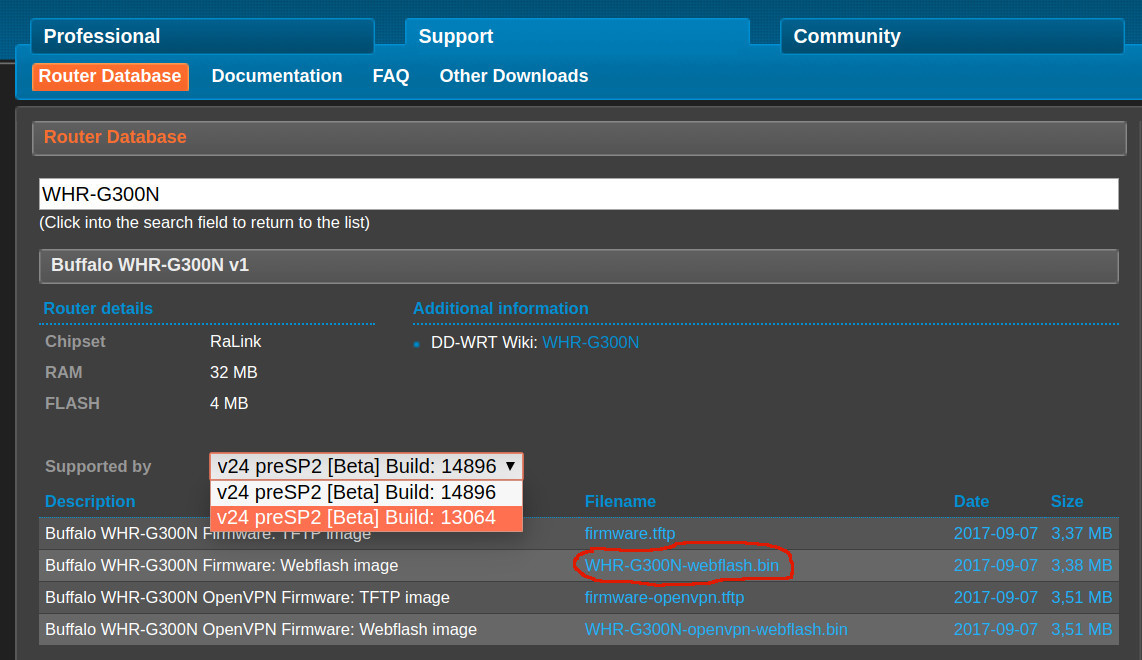

We then visit https://www.dd-wrt.com/site/support/router-database, and input WHR-G300N, two routers will be listed as:

By click the FIRST one, namely v1, we get:

Clearly, we should be able to upgrade from our current firmware version 13064 to the newer one 14896.

With the existing firmware version 13064 installed on the router SL-R7202, we can directly upgrade the firmware through web flash by downloading the firmware WHR-G300N-webflash.bin, but ignoring downloading all TFTP and openvpn files.

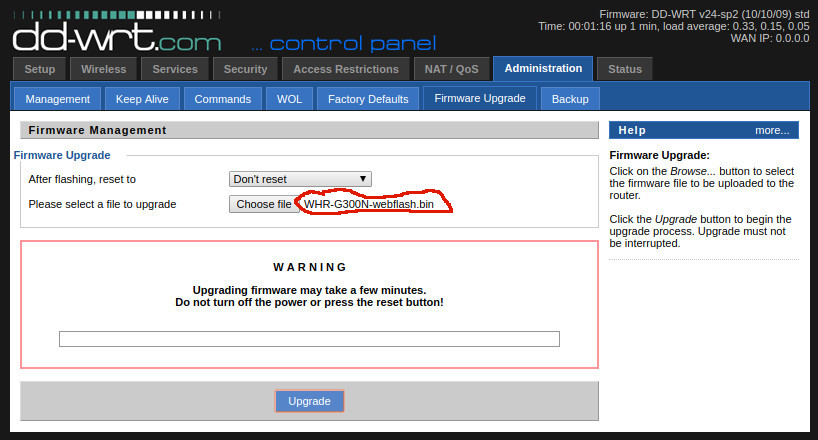

4) Firmware Upgrading

Just click Administration -> Firmware Upgrade, and then choose WHR-G300N-webflash.bin.

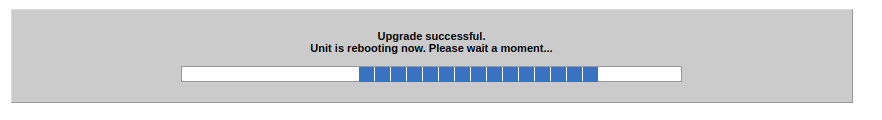

After clicking Choose file, the system is automatically upgraded after several minutes.

NOTE: Do NOT touch anything during the upgrading process.

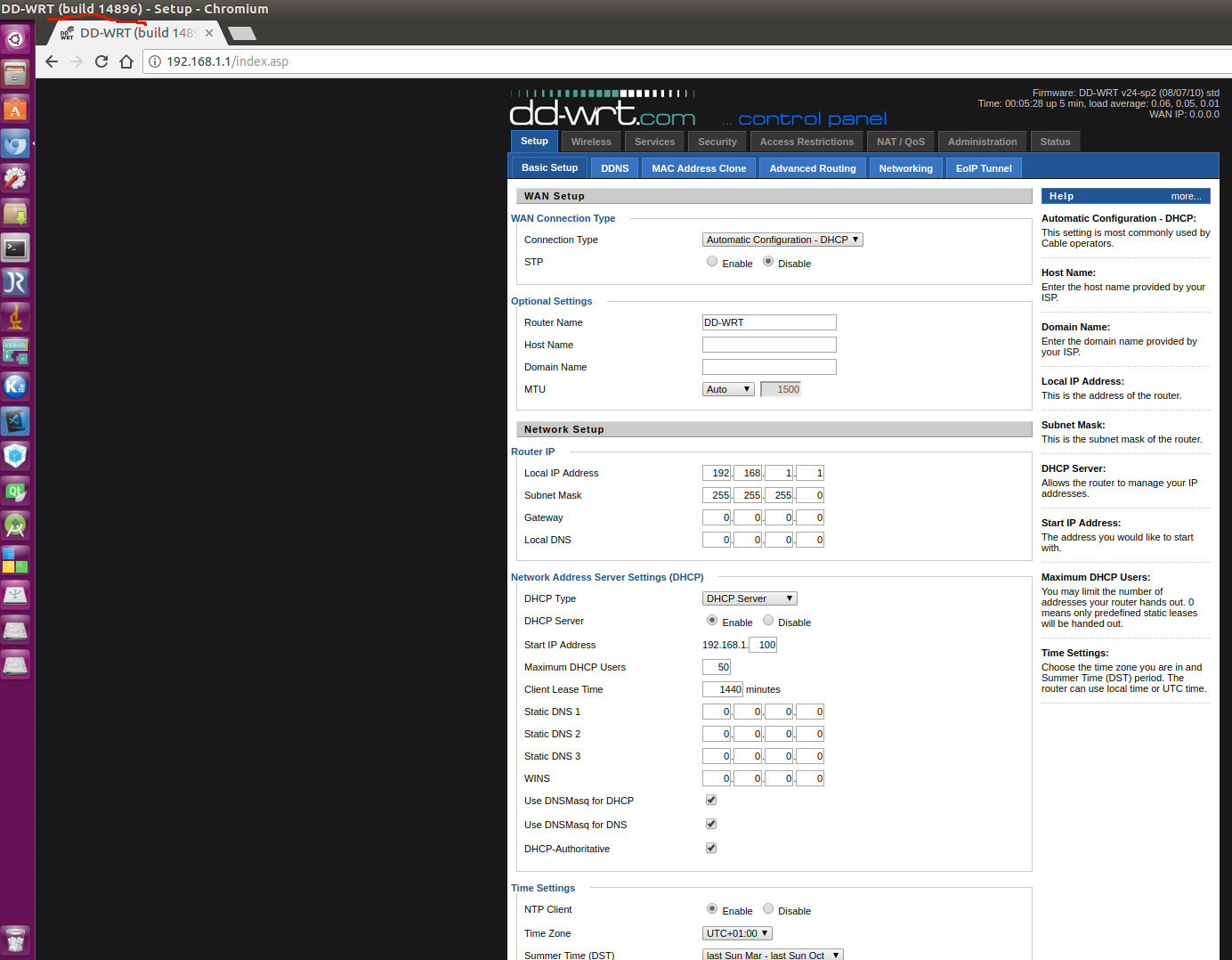

After firmware upgrading, we can easily tell some difference: From Setup Page, on the left-top corner, we can see the builder version is now 14896. And from the right-top corner, the current firmware info is:

1 | Firmware: DD-WRT v24-sp2 (08/07/10) std |

From the Status Page, we can doublecheck the firmware version is now 14896.

5) Firmware Upgrading is Problematic !

However, upgrading the firmware version from 13064 to the newer one 14896 is problematic, please refer to https://jgiam.com/2012/07/06/setting-up-a-repeater-bridge-with-dd-wrt-and-d-link-dir-600. After carefully reading this article, and due to my practical try later on, I summarized the conclusion here first:

- NOTE:

- KEEP using firmware version 13064, instead of 14896

- NEVER adding the Virtual Interfaces

PART D: Wireless Repeater Bridge (Same Subset)

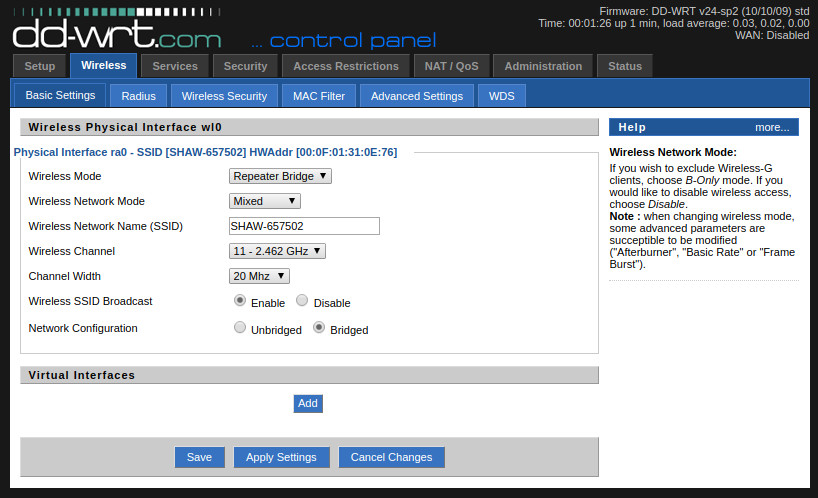

1. Wireless -> Basic Settings

We modify the parameters accordingly (Cited from the blog Setting up a repeater bridge with DD-WRT and D-Link DIR-600 ):

- Physical Interface Section

- Wireless Mode: Repeater Bridge

- Wireless Network Mode: Must Be Exactly The Same As Primary Router

- Wireless Network Name (SSID): Must Be Exactly The Same As Primary Router. Be Careful: DD-WRT is ONLY a 2.4G router. If your primary router has two bands, namely, 2.4G and 5G, make sure you connect to the 2.4G network.

- Wireless Channel: Must Be Exactly The Same As Primary Router

- Channel Width: Must Be Exactly The Same As Primary Router

- Wireless SSID Broadcast: Enabled

- Network Configuration: Bridged

Then, click Save without Apply, we get:

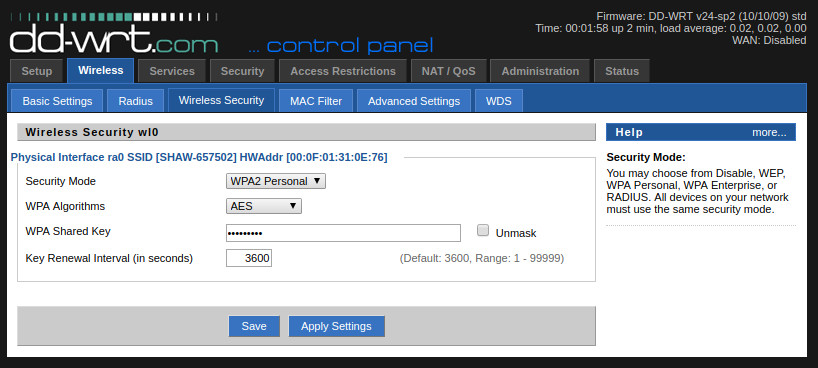

2. Wireless -> Wireless Security

- Physical Interface section

- Security Mode: Must Be WPA2 Personal, and The Primary Router Must Be Exactly The Same.

- WPA Algorithms: Must Be AES, and The Primary Router Must Be Exactly The Same.

- WPA Shared Key: Must Be Exactly The Same As Primary Router

- Key Renewal Interval (in seconds): Leave as it is, normally, 3600.

Then, click Save without Apply, we get:

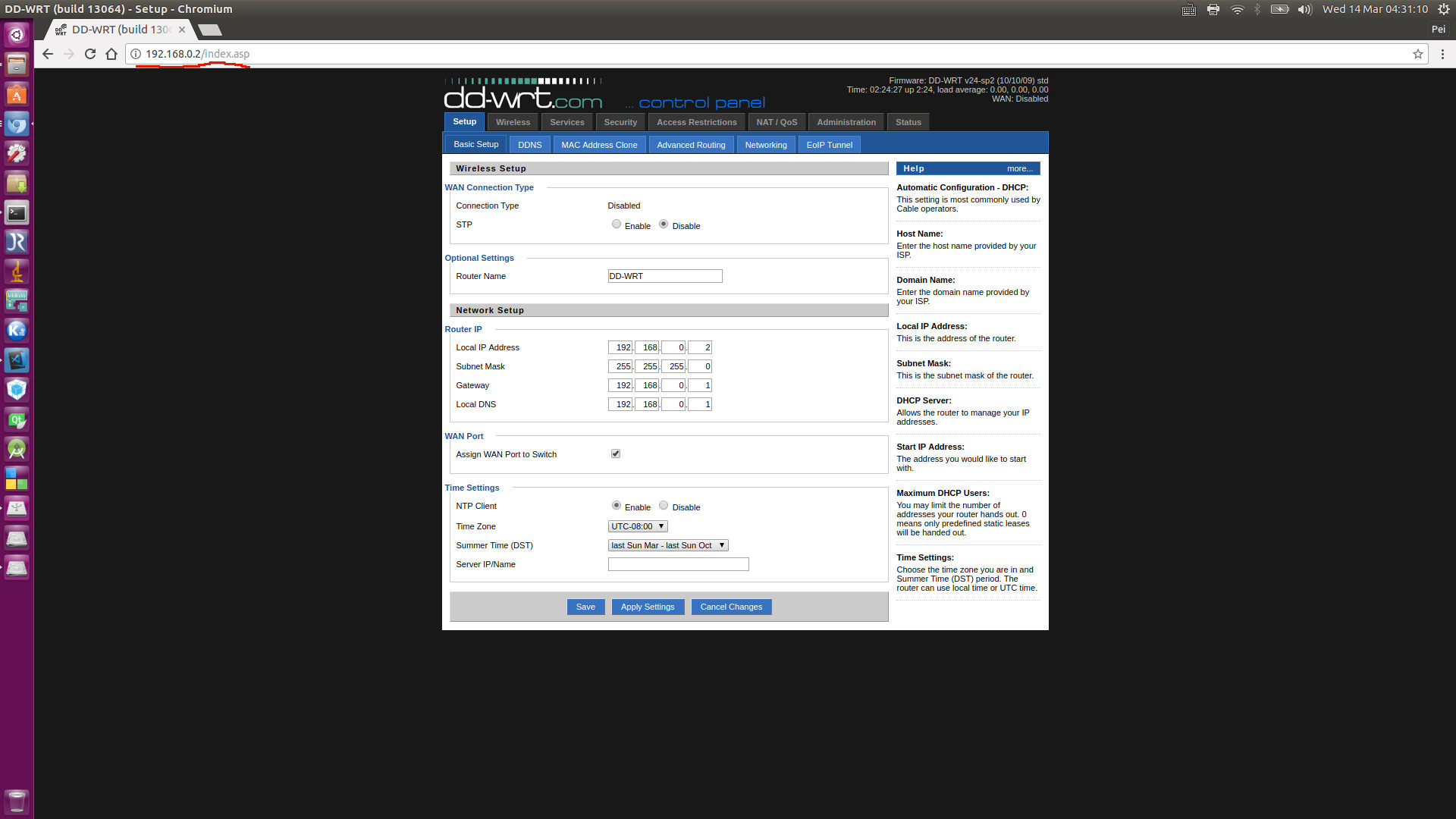

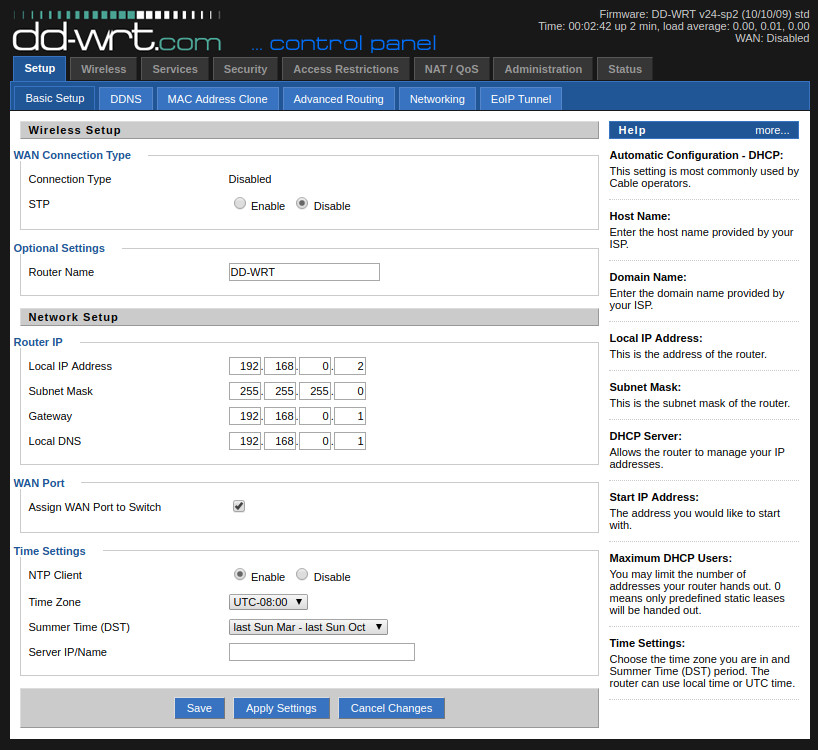

3. Setup -> Basic Setup

- WAN Connection Type Section

- Connection Type: Disabled

- STP: Disabled

- Optional Settings

- Router Name: DD-WRT, just let it be

- Router IP

- IP Address: 192.168.0.2 (in my case, my Primary Router IP is 192.168.0.1, and 192.168.0.2 has NOT been used yet.)

- Subnet Mask: 255.255.255.0

- Gateway: 192.168.0.1 (Must Be Primary Router IP)

- Local DNS: 192.168.0.1 (Must Be Primary Router IP)

- WAN Port

- Assign WAN Port to Switch: tick

- Time Settings

- Time Zone: set correspondingly

Then, click Save without Apply, we get:

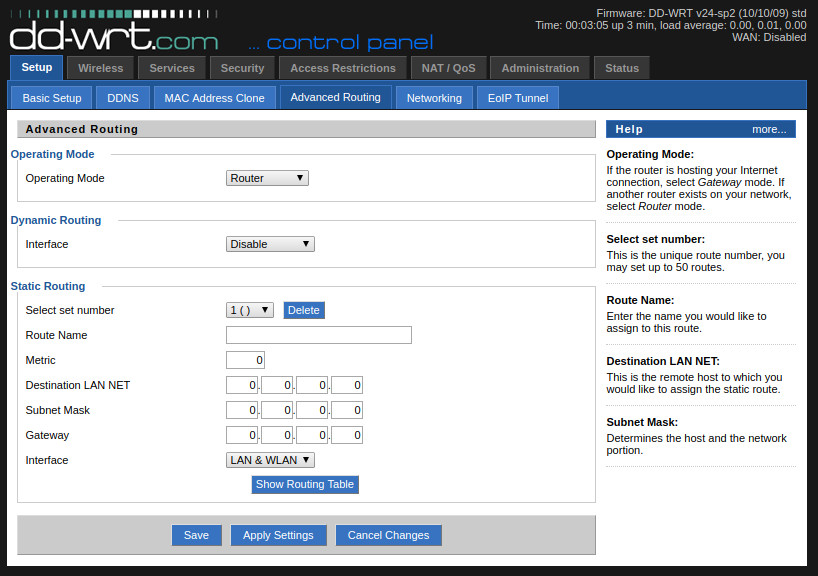

4. Setup -> Advanced Routing

- Operating Mode Section

- Operating Mode: Router

Then, click Save without Apply, we get:

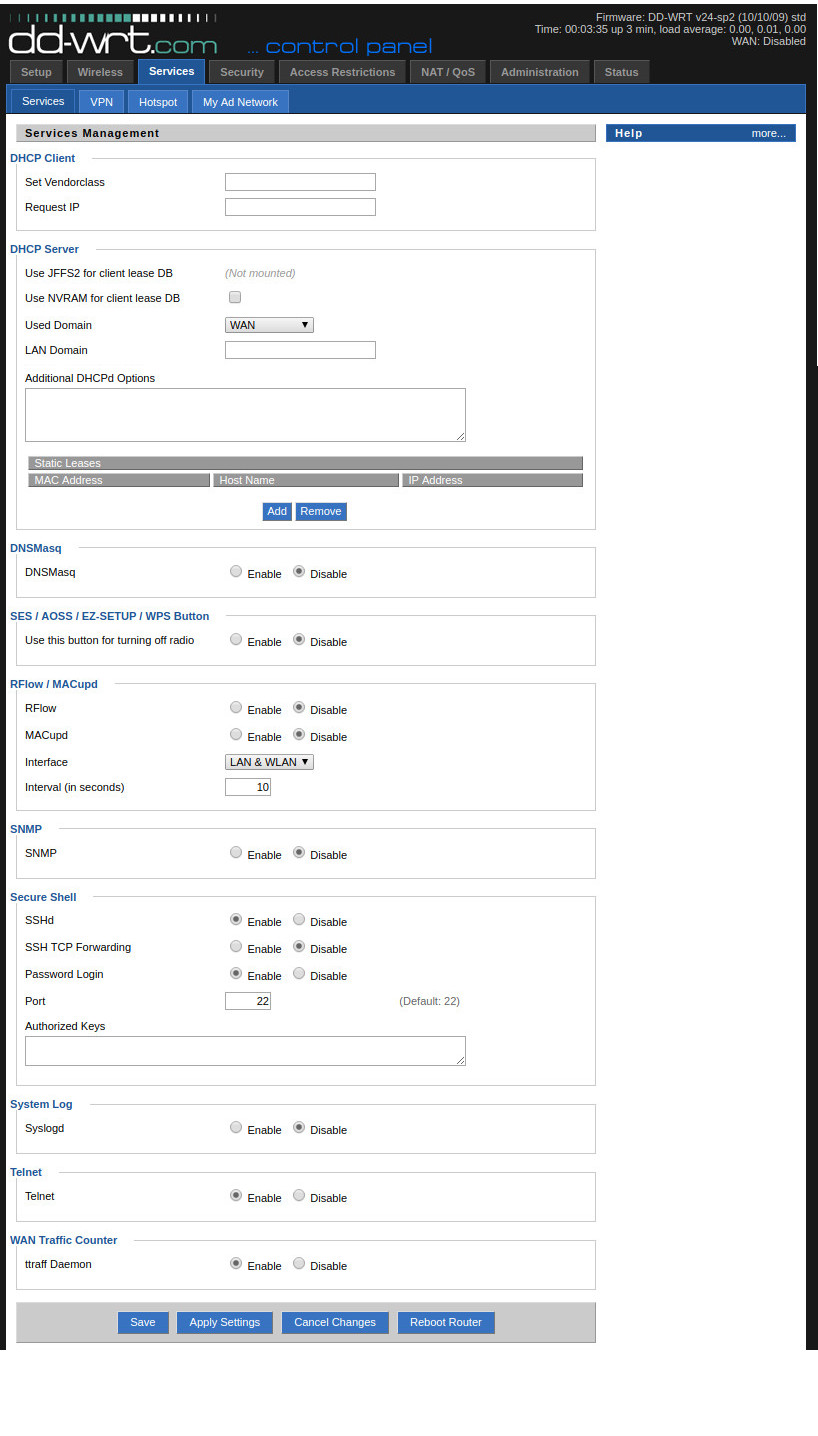

5. Services -> Services

- DNSMasq Section

- DNSMasq: Diable

- Secure Shell Section

- SSHd: Enable

- Telnet Section

- Telnet: Enable

Then, click Save without Apply, we get:

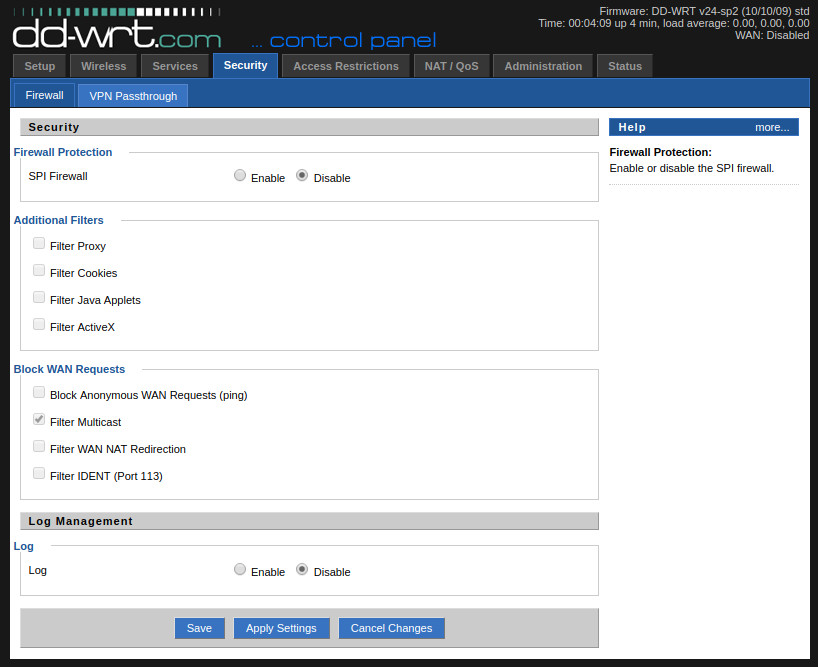

6. Security -> Firewall

Note: Process in Sequence.

- Block WAN Requests

- Block Anonymous WAN Requests (Ping): untick

- Filter Multicast: tick

- Filter WAN NAT Redirection: untick

- Filter IDENT (Port 113): untick

- Firewall Protection

- SPI Firewall: Disable

Then, click Save without Apply, we get:

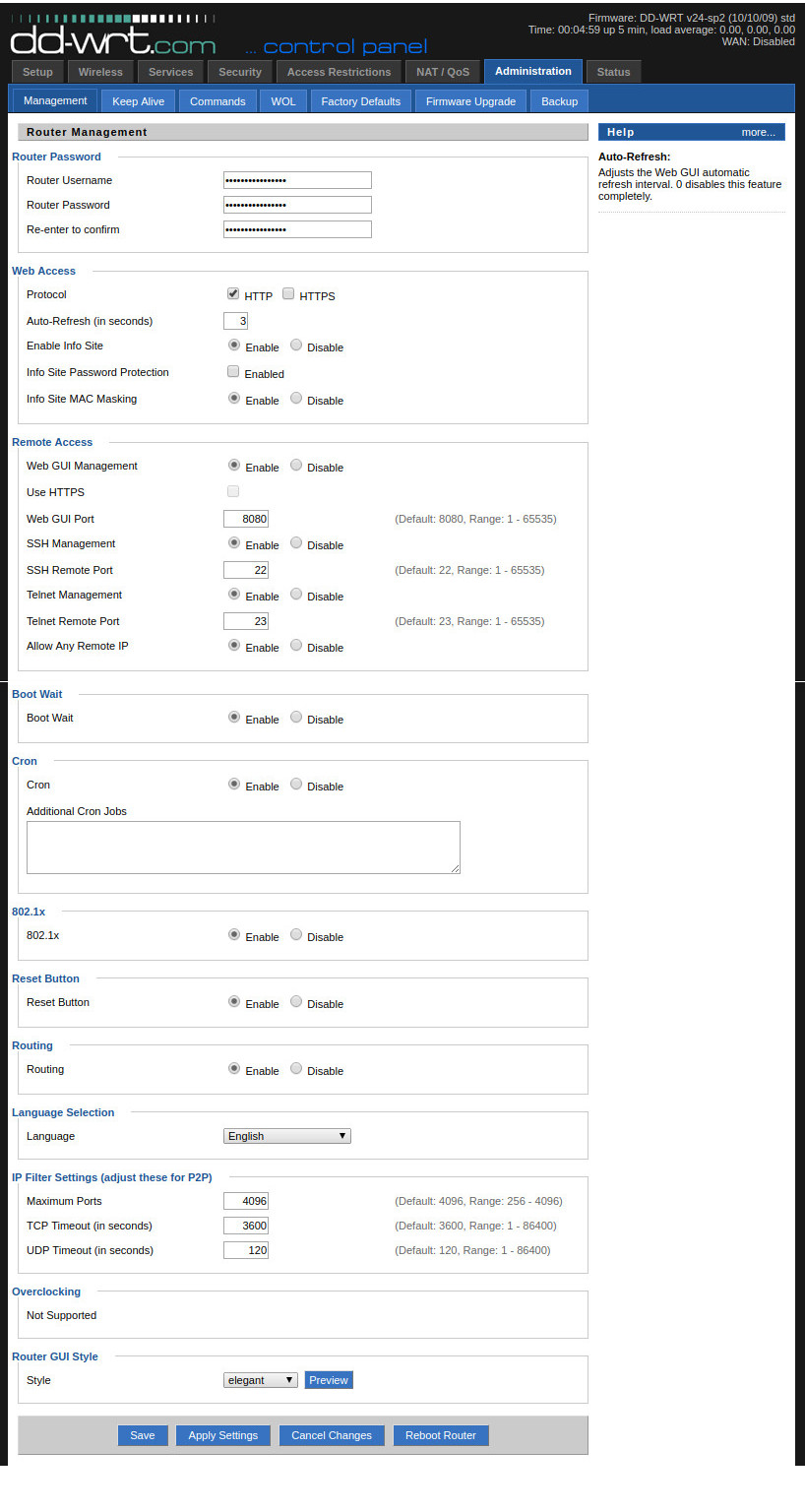

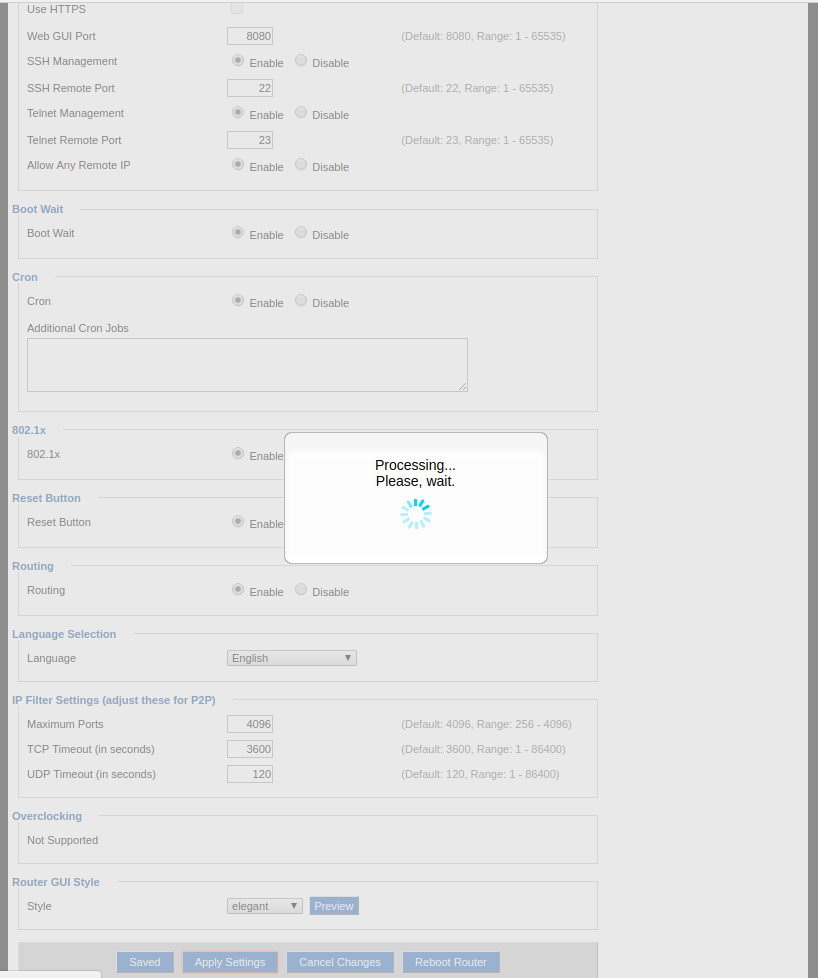

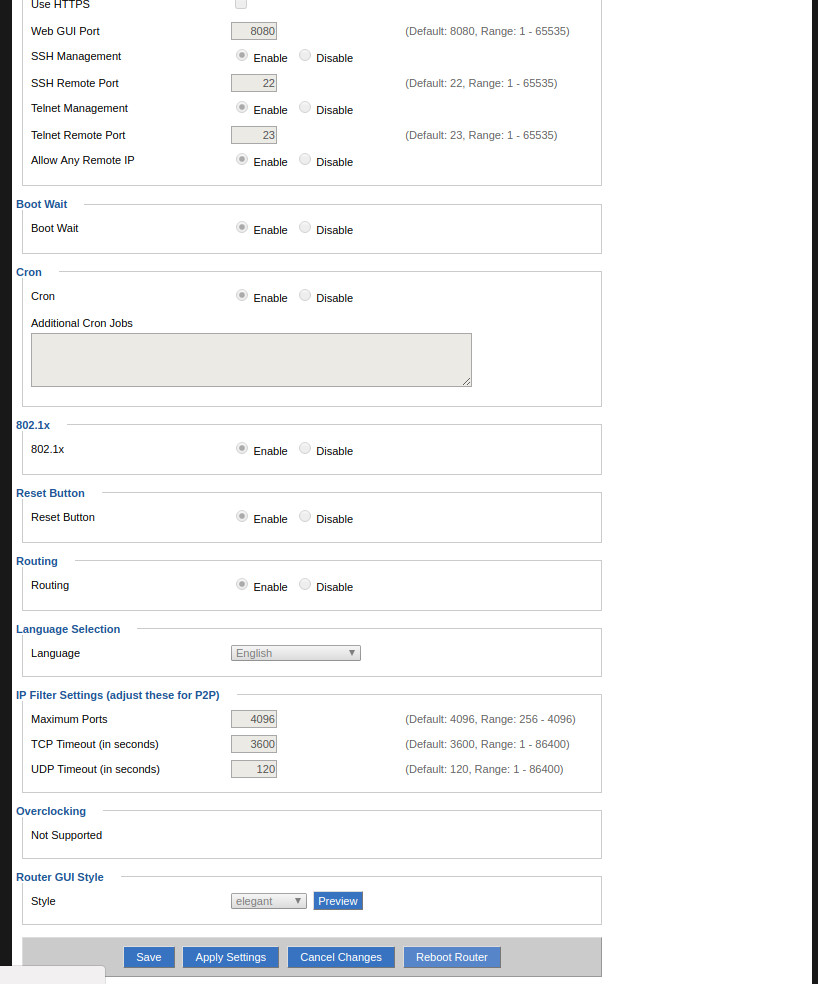

7. Administration Management

- Remote Access Section

- Web GUI Management: Enable

- SSH Management: Enable

- Telnet Remote Port: Enable

- Allow Any Remote IP: Enable

Then, click Save without Apply, we get:

Now, it’s the time to Apply Settings

And finally, click Reboot Router

PART E: Doublechek Repeater Bridge

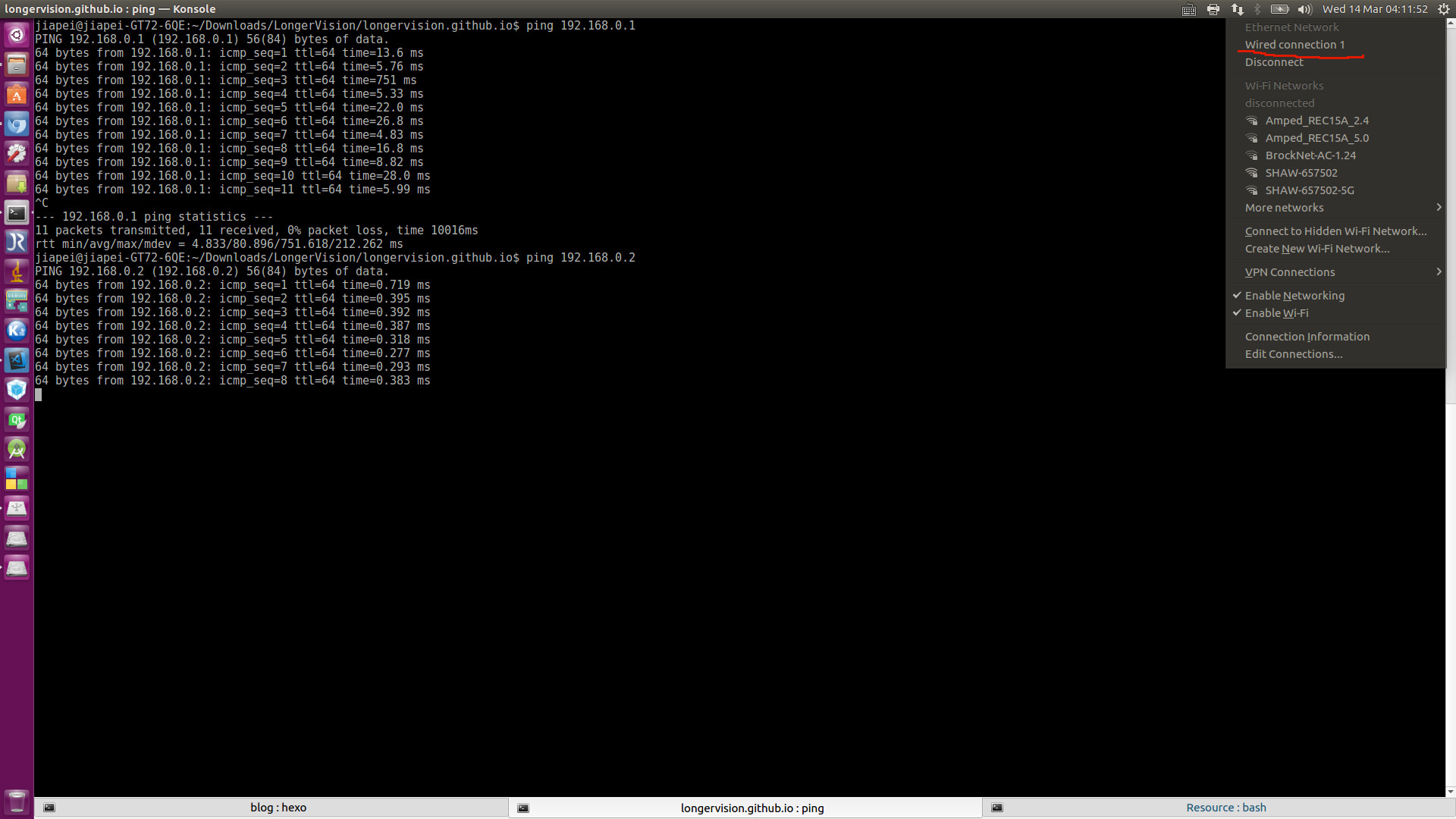

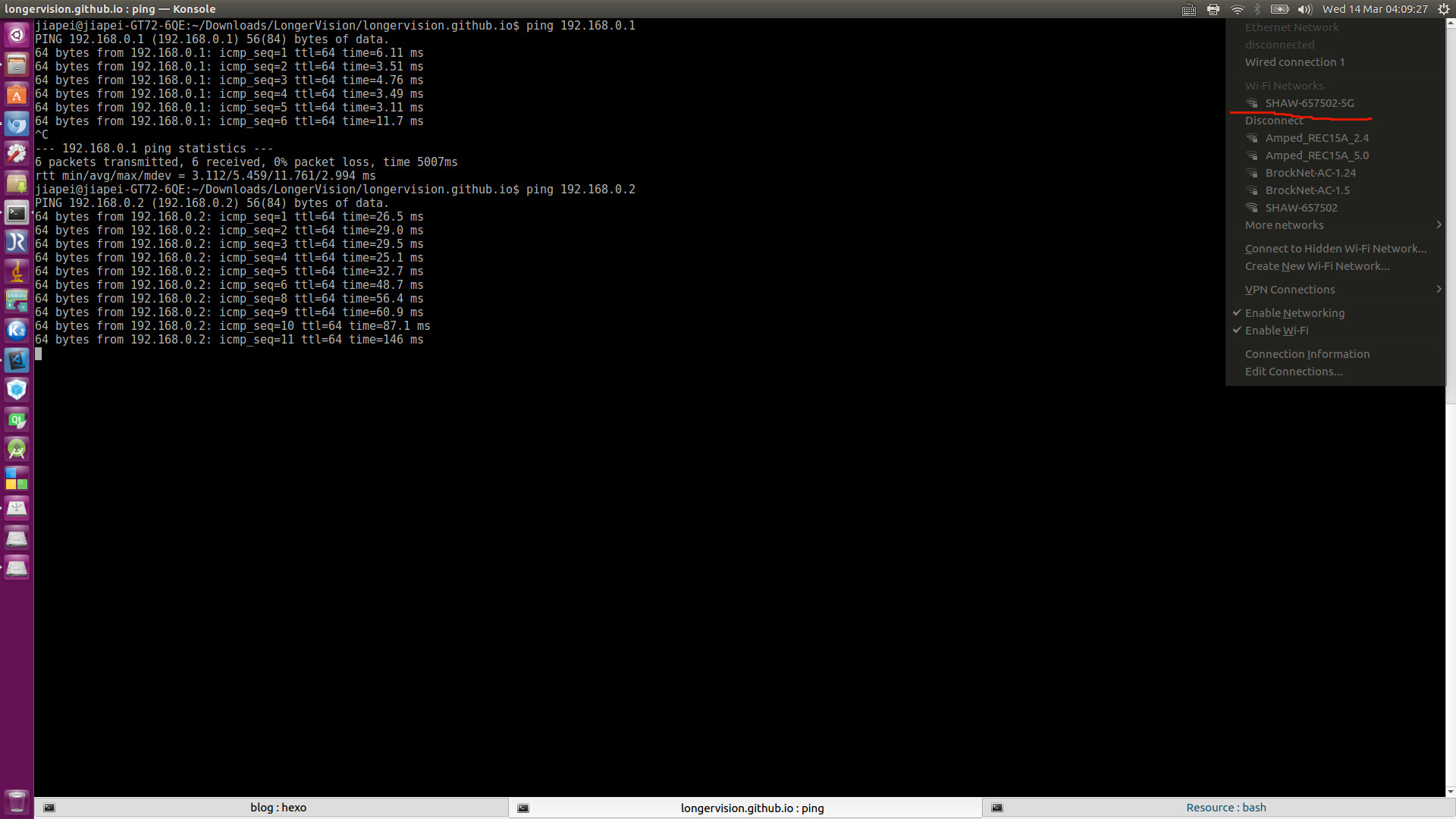

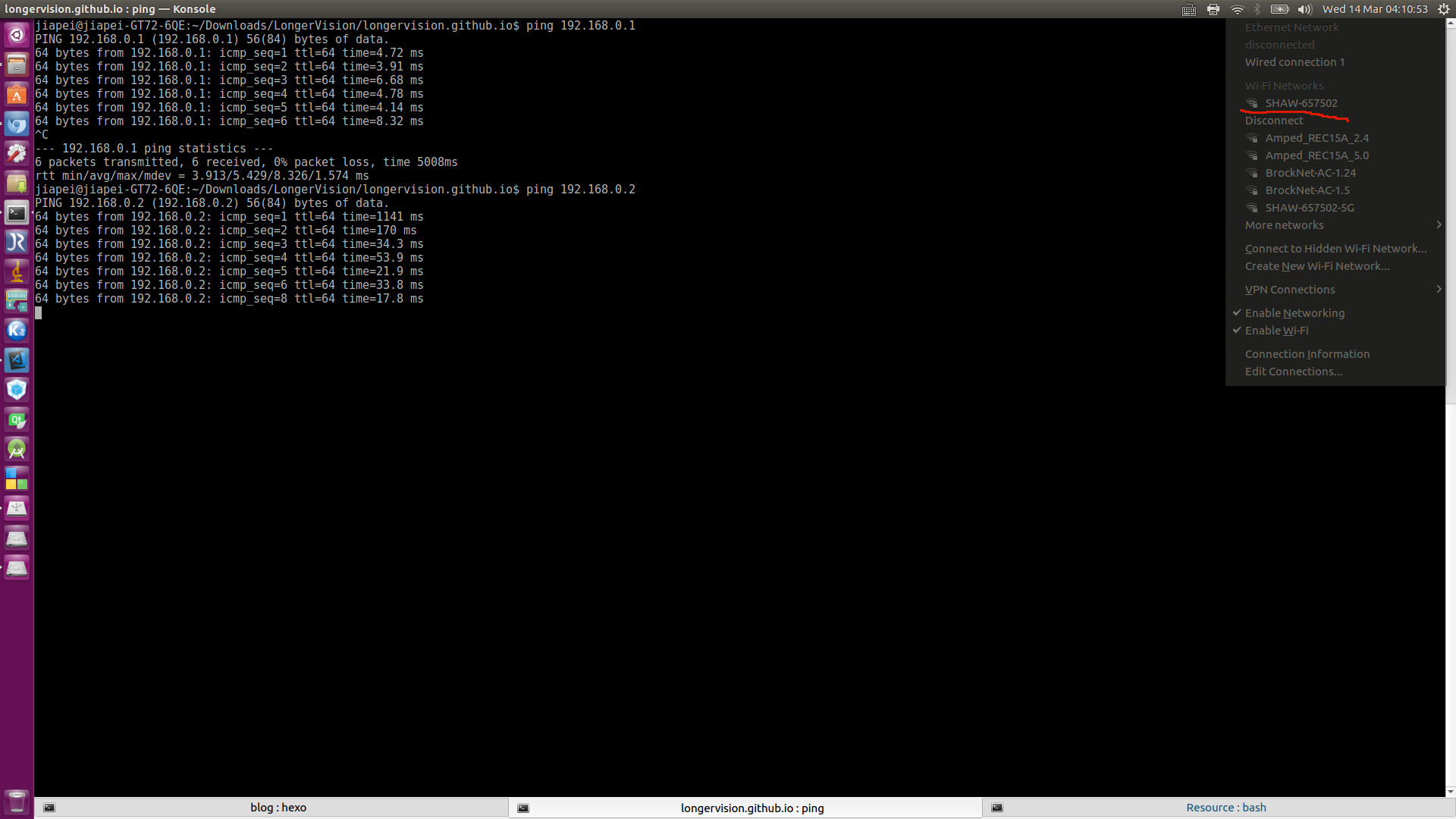

1. Ping

Let’s see the ping results directly:

1) PC Wired Connecting to DD-WRT (Router 2)

2) PC Wirelessly Connecting to Router 1 - 5G

3) PC Wirelessly Connecting to Router 1 - 2.4G

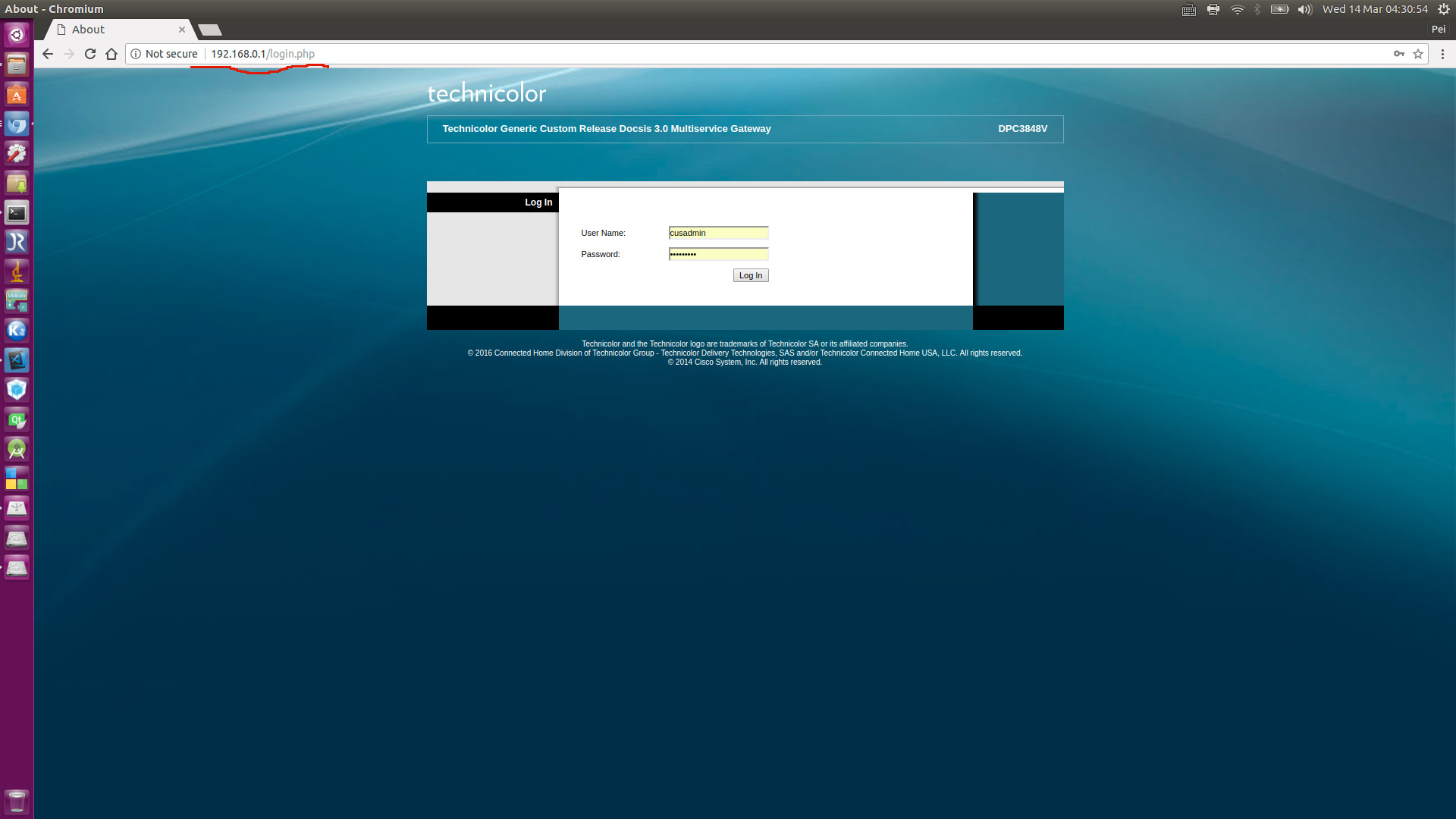

2. Visit http://192.168.0.1 and http://192.168.0.2

Most of the time, in order to get the fastest Internet speed, I use Primary Router 1’s 5G network. Therefore, for my final demonstration, my PC is wirelessly connected to the 5G network. And, I can successfully visit http://192.168.0.1 and http://192.168.0.2.

1) http://192.168.0.1

2) http://192.168.0.2