I’m currently in ShenZhen, China. Required by a business project, I’ll have to test out Video.JS these days.

Getting Started

Code

1 | <head> |

I’m currently in ShenZhen, China. Required by a business project, I’ll have to test out Video.JS these days.

1 | <head> |

Today seems to be the FIRST big day pf 2019? So many important packages (including operating systems) have released their NEW updates. Alright, let’s take a look what I’ve done today.

1 | ➜ ~ uname -r |

1 | ➜ ~ nvidia-smi |

1 | ➜ ~ nvcc --version |

1 | ➜ ~ cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2 |

1 | NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running. |

The solution is inside BIOS. Please refer to How do I disable UEFI Secure Boot?.

Yup… The solution is just to turn from Enable to Disable inside BIOS Secure Boot.

It seems Gazebo is NOW a part of Ignition Robotics??? Please take a look at my Ignition Robotics Bitbucket Issue What’s the realationship between ign-gazebo and gazebo? And, today, let’s build IgnitionRobotics from source.

NOTE: In order to have the LATEST version built, please make sure you always give the priority to branch gz11 rather than branch default.

For instance ign-cmake:

1 | git clone https://bitbucket.org/ignitionrobotics/ign-cmake/src/gz11/ |

Two things to emphasize before building.

1 | #add_subdirectory(unifiedvideoinertialtracker) |

Now, let’s start building Ignition Robotics Development Libraries.

1 | //#include <filesystem> // line 35 |

1 | -- BUILD WARNINGS |

1 | 21:31:15: Starting .../IgnitionRobotics/Ogre2Demo... |

In my last blog, I talked about TorchSeg, a PyTorch open source developed by my master’s lab, namely: The State-Level key Laboratory of Multispectral Signal Processing in Huazhong University of Science and Technology.

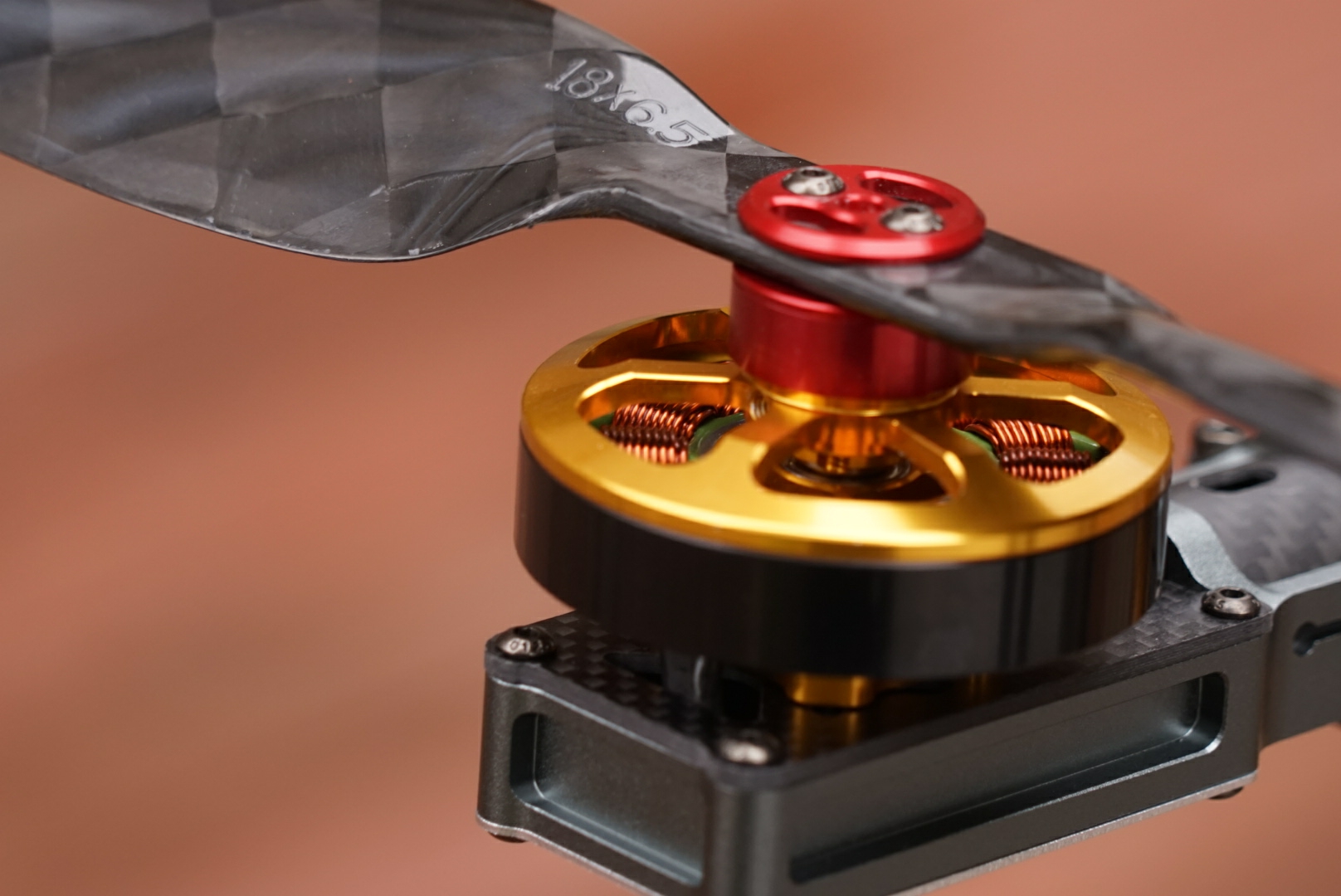

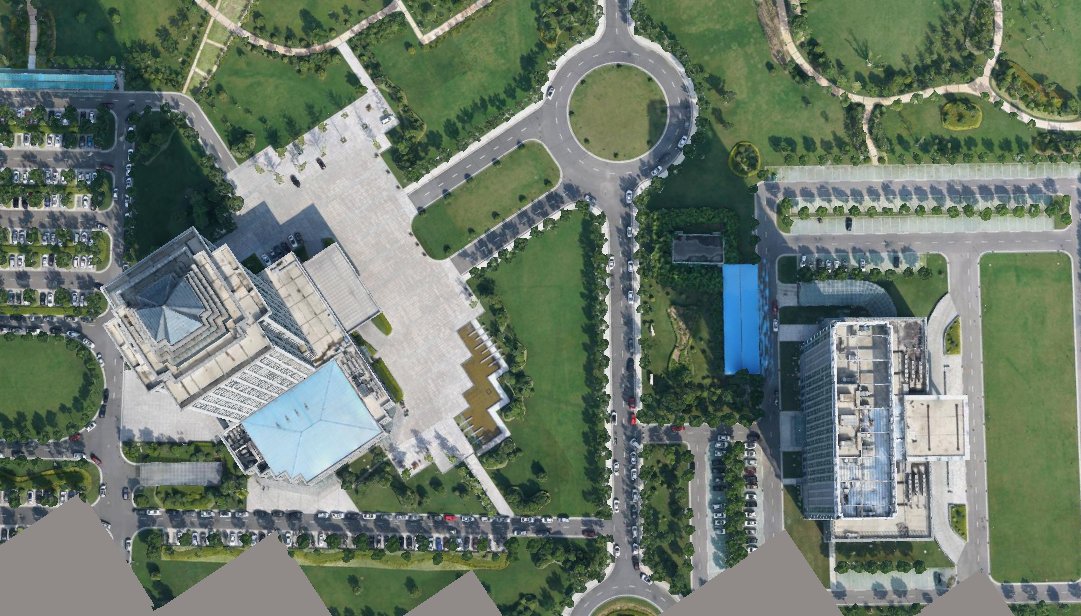

Today, I’m going to introduce professional drones developed by a start-up company ZR3D, which is spinned-out from my bachelor’s department, namely: School of Remote Sensing and Information Engineering in Wuhan University. By the way, The State key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing in Wuhan University is specialized in Tilt photogrammetry.

OK, now it’s the time to show some of ZR3D‘s products.

Outdoor video capturing can be stably done by ZR3D drones. In the following, we show some pictures of an OEMed drone manufactured/assembled by ZR3D.

| ZR3D | South Survey | Longer Vision |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

| Side View | Half Side View | Top View |

|---|---|---|

|

|

|

Indoor surveying & mapping is done by a cluster of servers, namely, on a small cloud. Currently, we are still dockerizing our own SDK.

Three videos are used to briefly explain the MOST important three steps of indoor surveying & mapping, as shown:

| Point Cloud | Meshlized | Texturized |

|---|---|---|

|

|

|

Popular open source drone firmwares and websites that I’ve been testing are briefly listed in the following:

Happily got the info that my master’s supervisor’s lab, namely: The State-Level key Laboratory of Multispectral Signal Processing in Huazhong University of Science and Technology released TorchSeg just yesterday. I can’t helping testing it out.

According to the README.md on TorchSeg, serveral packages need to be prepared FIRST:

1 | ➜ ~ pip show torch |

Download all PyTorch models provided from within all .py files from PyTorch Vision Models. Let’s briefly summarize the models as follows:

After TorchSeg is checked out, we need to modify all the config.py files and ensure all variables C.pretrained_model are specified to the RIGHT location and with the RIGHT names. In my case, I just downloaded all PyTorch models under the same directory as TorchSeg, therefore, all C.pretrained_model are designated as:

1 | C.pretrained_model = "./resnet18-5c106cde.pth" |

etc.

We also need to modify all variables C.dataset_path and make sure we are using the RIGHT dataset. In fact, ONLY two datasets are directly adopted in the originally checked-out code of TorchSeg.

Currently, it seems there is still some tricks about how to configure these datasets, please refer to my Github issue.

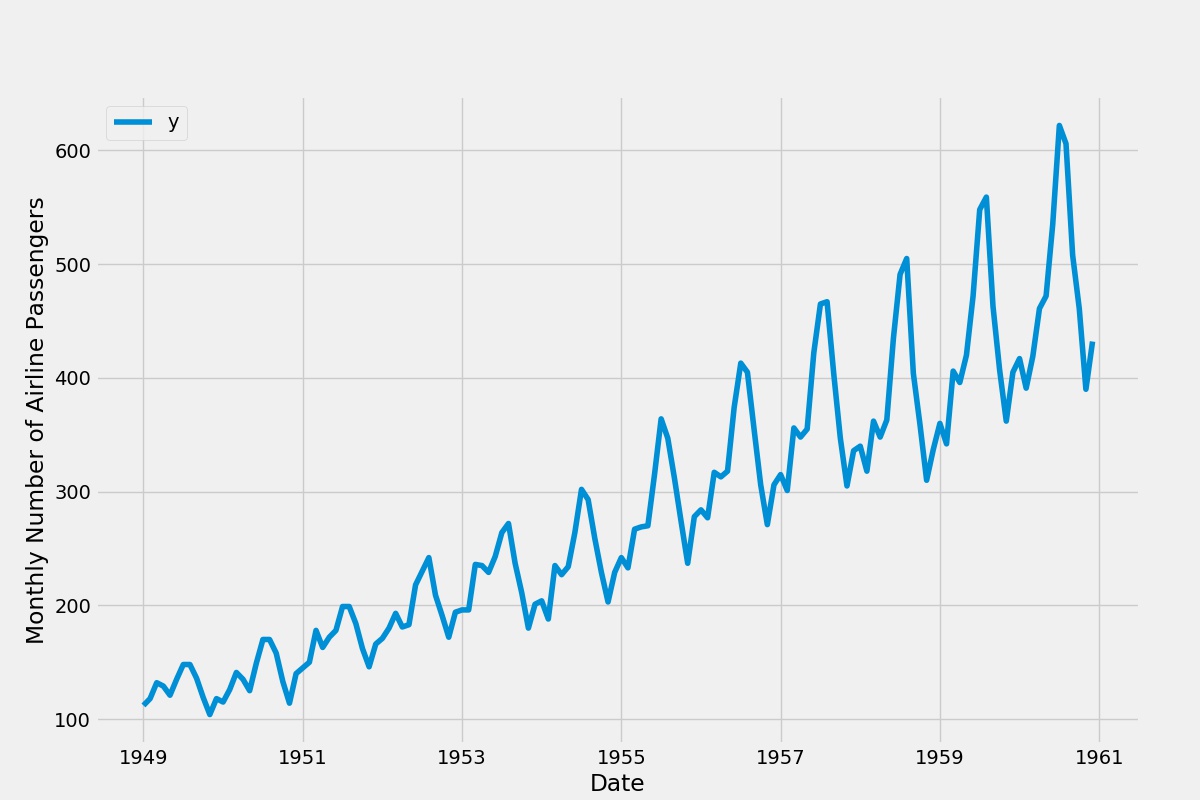

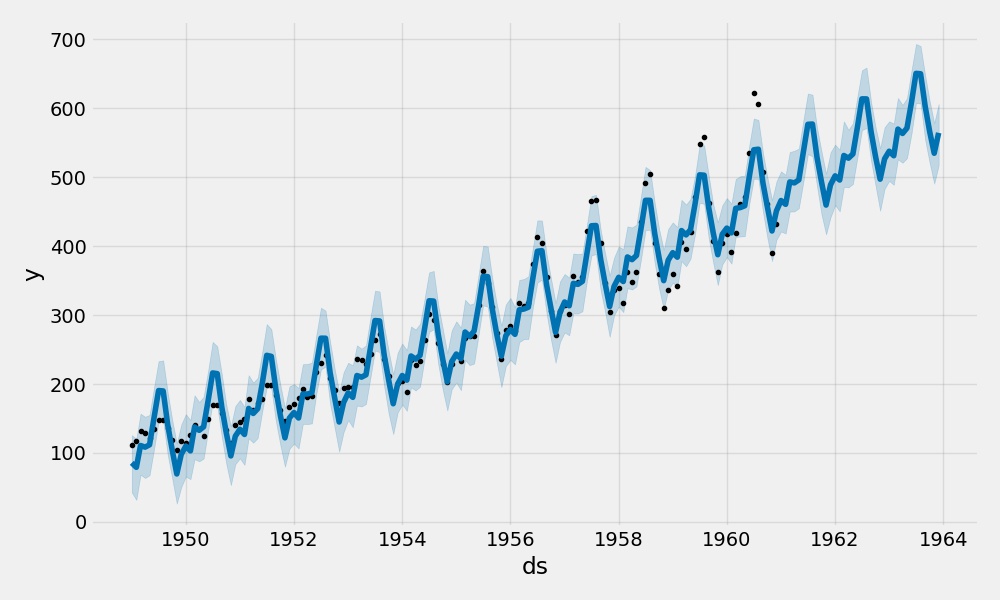

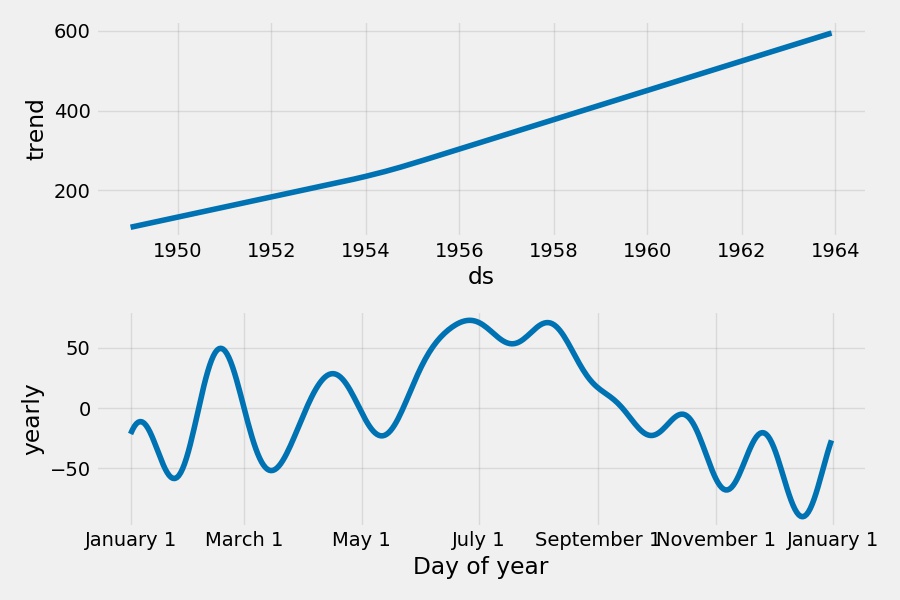

Today, we are going to test out Facebook Prophet by following this DigitalOcean Tutorial.

We FIRST make sure 2 Python packages - Prophet and PyStan have been successfully installed.

1 | ➜ ~ pip show prophet |

We just need to download the CSV file to some directory:

1 | ➜ facebookprophet curl -O https://assets.digitalocean.com/articles/eng_python/prophet/AirPassengers.csv |

Trivial modifications have been done upon the code on this DigitalOcean Tutorial, as follows:

1 | import pandas as pd |

This error message is found while I was trying to from matplotlib.pyplot as plt today. I’ve got NO idea what had happened to my python. But, it seems this issue had been met and solved in some public posts. For instance:

In my case, I need to manually modify 3 files under folder ~/.local/lib/python3.6/site-packages/matplotlib.

1 | if not USE_FONTCONFIG and sys.platform != 'win32': |

to

1 | if not USE_FONTCONFIG and sys.platform != 'win32': |

change all

1 | mkdir(parents=True, exist_ok=True) |

to

1 | mkdir(parents=True) |

Haven’t successfully tested three packages (all related to PyTorch), PyTorch, FlowNet2-Pytorch and vid2vid. Looking forward to assistance…

After having successfully installed PyTorch current version 1.1, I still failed to import torch. Please refer to the following ERROR.

1 | ➜ ~ python |

In order to have PyTorch successully imported, I’ve got to remove the manually installed PyTorch v 1.1, but had it installed by pip

1 | pip3 install https://download.pytorch.org/whl/cu100/torch-1.0.0-cp36-cp36m-linux_x86_64.whl |

This is PyTorch v1.0, which seems NOT come with caffe2, and of course should NOT be compatible with the installed caffe2 built with PyTorch v1.1. Can anybody help to solve this issue? Please also refer to Github issue.

Remove anything/everything related to your previously installed PyTorch. In my case, file /usr/local/lib/libc10.s0 is to be removed. In order to analyze which files are possibly related to the concerned package, we can use the command ldd.

1 | ➜ lib ldd libcaffe2.so |

It’s not hard to have FlowNet2-Pytorch installed by one line of commands:

1 | ➜ flownet2-pytorch git:(master) ✗ ./install.sh |

After installation, there will be 3 packages installed under folder ~/.local/lib/python3.6/site-packages:

1 | ➜ site-packages ls -lsd correlation* |

That is to say: you should NEVER import flownet2, nor correlation, nor channelnorm, nor resampled2d, but

Here comes the ERROR:

1 | ➜ ~ python |

I’ve already posted an issue on github. Had anybody solved this problem?

import torch FIRST.

1 | ➜ ~ python |

Where can we find and download checkpoints? Please refer to Inference on FlowNet2-Pytorch:

1 | python main.py --inference --model FlowNet2 --save_flow --inference_dataset MpiSintelClean --inference_dataset_root /path/to/mpi-sintel/clean/dataset --resume /path/to/checkpoints |

Training on FlowNet2-Pytorch gives the following ERROR:

1 | ➜ flownet2-pytorch git:(master) ✗ python main.py --batch_size 8 --model FlowNet2 --loss=L1Loss --optimizer=Adam --optimizer_lr=1e-4 --training_dataset MpiSintelFinal --training_dataset_root /path/to/mpi-sintel/training/final/mountain_1 --validation_dataset MpiSintelClean --validation_dataset_root /path/tompi-sintel/training/clean/mountain_1 |

Therefore, I posted a further issue on Github.

There are 4 scripts under folder scripts to run before testing vid2vid.

1 | ➜ vid2vid git:(master) ✗ ll scripts/*.py |

However, ONLY 3 of the scripts can be successfully run, but scripts/download_flownet2.py failed to run, as follows:

1 | ➜ vid2vid git:(master) python scripts/download_flownet2.py |

If we run Testing on vid2vid homepage, it gives the following ERROR:

1 | ➜ vid2vid git:(master) python test.py --name label2city_2048 --label_nc 35 --loadSize 2048 --n_scales_spatial 3 --use_instance --fg --use_single_G |

This is still annoying me. A detailed github issue has been posted today. Please give me a hand. Thanks…

With the most inspiring speech by Arnold Schwarzenegger, 2019 arrived… I’ve got something in my mind as well…

Everybody has a dream/goal. NEVER EVER blow it out… Here comes the preface of my PhD’s thesis… It’s been 10 years already… This is NOT ONLY my attitude towards science, BUT ALSO towards those so-called professors(叫兽) and specialists(砖家).

So, today, I’m NOT going to repeat any cliches, BUT happily encouraged by Arnold Schwarzenegger’s speech. We will definitely have a fruitful 2019. Let’s have some pizza.

Merry Christmas everybody. Let’s take a look at the Christmas Lights around Lafarge Lake in Coquitlam FIRST.

| Lafarge Lake Christmas Lights 01 | Lafarge Lake Christmas Lights 02 |

|---|---|

|

|

| Lafarge Lake Christmas Lights 03 | Lafarge Lake Christmas Lights 04 |

|

|

| Lafarge Lake Christmas Lights 05 | Lafarge Lake Christmas Lights 06 |

|

|

Now, it’s the time to test PyDicom.

Copy and paste that piece of code from Medical Image Analysis with Deep Learning with trivial modifications as follows:

1 | import cv2 |

I did a MRI scan in 2015, in SIAT, for the purpose of research. The scan resolution is NOT very high. Anyway, a bunch of DICOM images can be viewed as follows: